Using OpenCV for image processing and machine learning inference

Defining a Set of Gestures

The first step for good gesture recognition was deciding upon a set of gestures. The five gestures that we chose for this project were a left, right, ok, spidey, and peace sign gesture. These were chosen because they were fun and easy for inference: they did not require motion tracking over a series of frames but were different enough to be distinguishable with one frame.

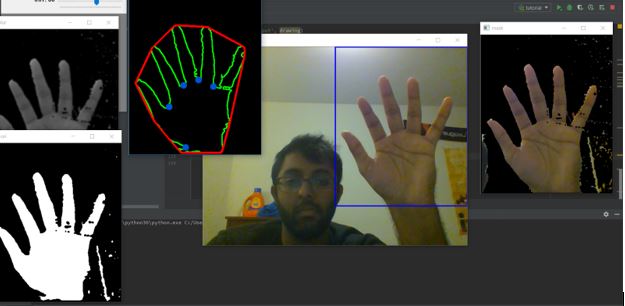

Detecting the Hand and Fingers with OpenCV

The next step after deciding a set of gestures was collecting a training set for any algorithm learning gestures to use. However, simply collecting image frames as input directly from the camera is memory intensive and computationally expensive. The necessary image processing steps must subtract the background from the given image frame, resize it as necessary, convert it to black and white, and perform edge detection to locate the hand and infer some gesture. Thankfully, OpenCV included many useful built-in functions to do all of these things. The first important function for this was the Gaussian-Mixture OpenCV background remover, that essentially detected motion in successive image frames to separate the foreground and background.By finding which pixels were in the background,a mask was applied so that elements in the background were removed. Then, OpenCV found the Convex Hull of the foreground points to determine how many "extreme" points were visible in the frame. These extrema in the case of gestures corresponded to the ends of the fingers. Using this information we were able to differentiate a hand from the image. After this image processing, we collected 10,000 training images for the model, 2,000 per gesture using a webcam. These were downsampled to a black and white image with a 128x128 resolution to use as an input to the machine learning models.

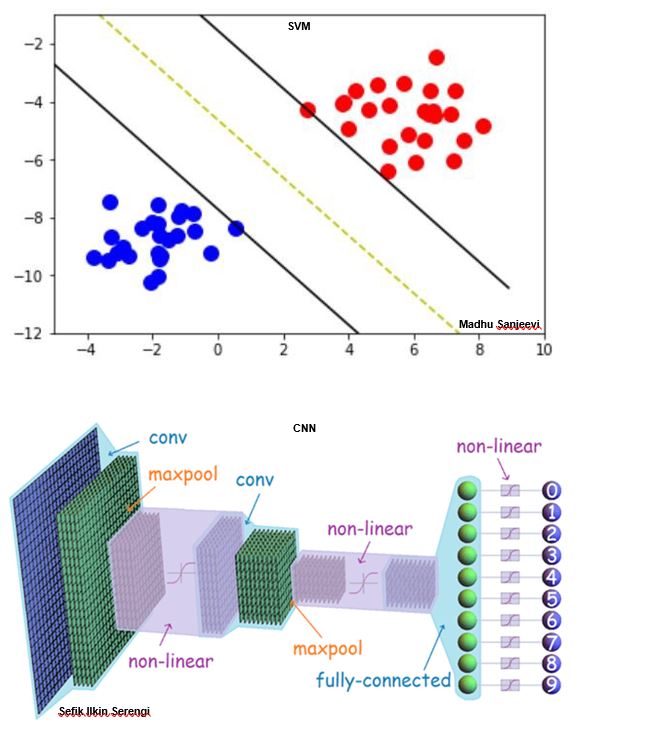

Machine Learning for Image Detection

After collecting the training data, the next step was to use machine learning algorithms to distinguish between different gestures. After the image frames collected previously were imported and shuffled, the deep learning approach used convolutional neural networks to do this. Different neural network architectures of 2-3 hidden layers, ReLU and tanh activations, and 128-256-512 nodes in each layer were trained with batch sizes of 16,32, and 64 for 10-12 epochs but most failed to converge. This is likely due to the extreme sparsity of the data. Each of these was coded in Tensorflow using the Keras module, but none failed to achieve training accuracy of over 40%, which was better than guessing at random (20%) but still not sufficient to recognize gesture reliable. So, we resorted to using a Support Vector Machine (SVM), believing this approach could handle data sparsity with greater success. The scikit-learn library was used to train three kernels: linear, radial basis function (RBF), and polynomial. The RBF and polynomial kernels were not better than the CNN, but the linear SVM was quite successful, reporting training/testing accuracy over 95%. Empirical testing showed that using the laptop webcam, the linear SVM was able to distinguish gestures with some success, though it was quite noisy presumably as a result of varying lighting conditions and hand locations.The goal was to load the trained model (which consumed about 700 MB of space) to the Raspberry Pi used onboard the Magic Mirror and allow it to do inference, but scikit-learn was not supported so we reverted to gesture recognition using fingers recognized, which did not use the machine learning component.

Finger Detection

Ultimately, the scikit-learn module did not load on the Raspberry Pi, due to what seemed to be an error in compatibility with Python 2 and (Stretch) Raspbian. As a result, we used OpenCV to detect fingers, so that the convex hull of one point separated by under 90 degrees from an edge was recognized as a finger. Then, the UI could be updated by signalling the intended page with the corresponding number of fingers. In practice, while multiple fingers were sometimes recognized, only holding up one finger was a reliable gesture from the user-side, possible due to a combination of resolution and downsampling as well as lighting. We used this finger recognition to toggle the clock visibility.