Hadoop in Raspberry Pi

Yupeng Lin (yl2458)

Introduction

Hadoop is one of most popular open source software to run distribute system. However, it is usually expensive to buy the server and cluster. Raspberry Pi provides the low-cost platform for Linux. It is feasible that I can set up the Raspberry Pi cluster within 100 dollars and provide the distribute system for Hadoop instead of buying expensive cloud service. It is also worth mentioning that network knowledge will be accumulated for this project.

Objective

There are three main objectives during this project. First is to set up the Raspberry Pi cluster servers, the second is to install and configure the Hadoop specific for pi, the third is to run the Hadoop program in the cluster and compare its performance with regular java program.

Design

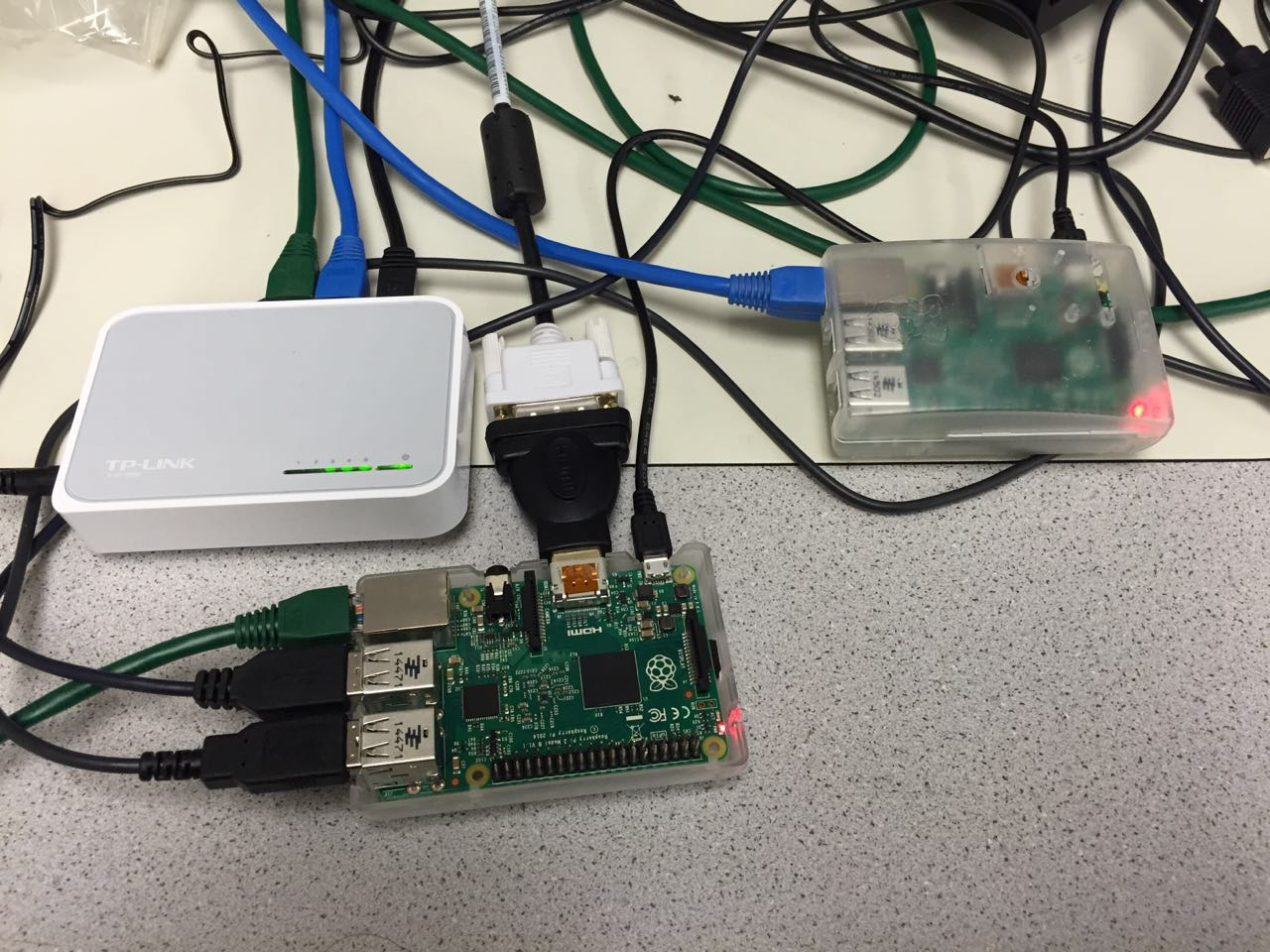

The Hadoop version I used for project is Hadoop 1.2.0, which get be download from apache website. The compile processing is pretty same as other software. It is also necessary to configure the bash_rc file for environment variable. After installing Hadoop, it is also necessary that the server will add the hduser exclusive for Hadoop user. SSH key is also set for internet connection. I use applePi Baker to copy the image and burn the image to another Raspberry Pi. The two raspberry pi is connected with Ethernet switch. For internet configuration, Cornell campus provides the dynamic ip address, which is ok for Hadoop. But once I use another internet port, the network configuration has to be reset again. Therefore, I configure the static IP for each Raspberry Pi. The configuration is located in /etc/network/interface and /etc/resolv.conf. It is also important to add host name to /etc/hosts. At this stage, the raspberry pi will run in the distributed mode. The first program to run in Hadoop is wordcount, which summary the frequency of each word. In order to compare the performance with single thread Java file, I also wrote a Java program to do the statistics by hash map.

Testing

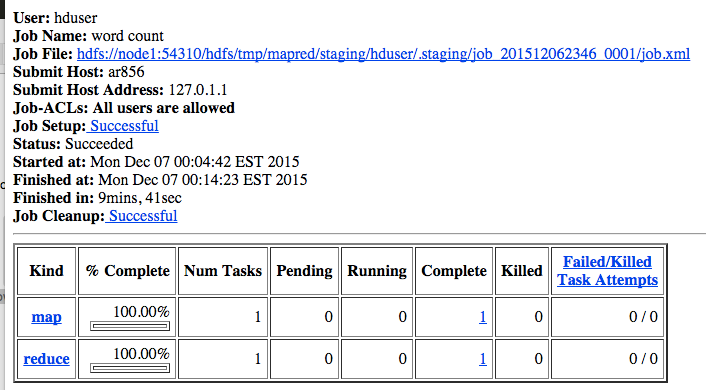

To test Hadoop, I first use the small file, which is 600kb. By the default Hadoop configuration, the outcome is pretty slow. It took 10 minus to complete the task.

Why it took this long time to process the text? The Hadoop took the input file and store in blocks. The block size determines the number of mapper. The default block size is 64mb. When the input file is smaller than the block size, it will cause the overhead (repetition). This overhead can cause the unnecessary computation. Therefore, if we can provide the larger files and configure the smaller files. I download text file which has 35mb. I also shrink the block size to 5mb.

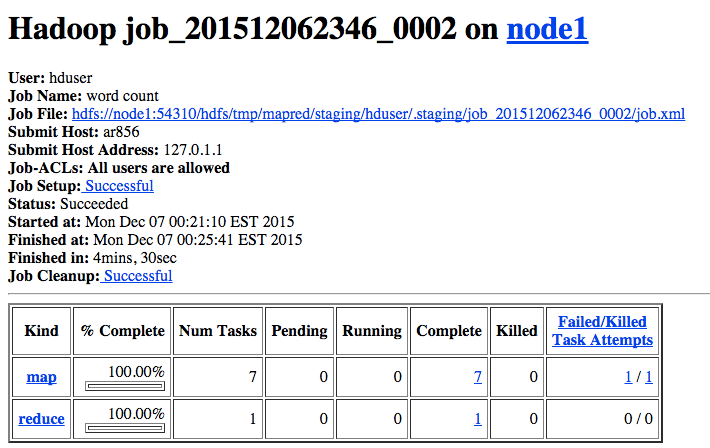

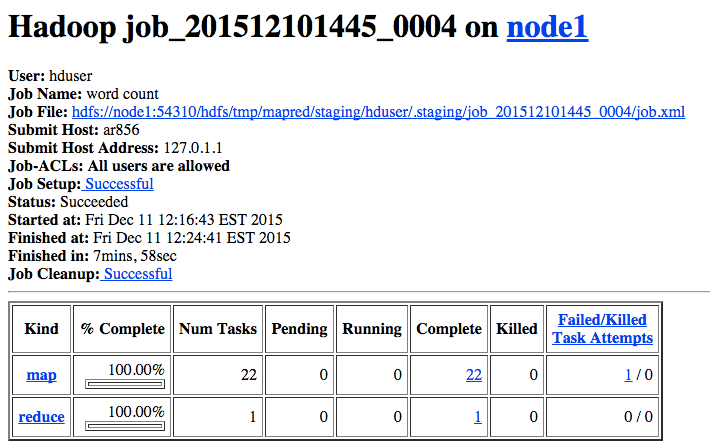

As we can see from the result, even we provide the larger file (35mb), the run time decreased to 4:30. It is worth noticing that the number of map increase to 7, which means there are 7 blocks that contain unique string segment. What if I provide a larger file? I download a very large file to raspberry pi. The file is around 110mb. The files will be separate to 22 blocks, a.k.a maps. This This main phrase took 7min and 58 sec.

To compare with the single thread Java program, I also wrote program, which tokenize every word and put them in the hash table. The run time was recorded by perf command. For 600kb, the run time was 0.56 seconds, for medium file (35mb), the run time was 26.31 seconds, for large file (110mb), the run time is 97.23 seconds.

Results

The running time is greater in Hadoop compared to simple file in those small file. Usually the big file means 10GB above. At this level, the Hadoop begins to play its role. Since each blocks consume a single process, for 35mb file, the seven blocks will use process. It is very time-consuming to swap those process. In addition, the preparation. Before map and reducer begins to work, Hadoop takes time to deploy the jar files and input data. All these time is insignificant when dealing with very massive files. However, it is obviously relative long time to process the small file.

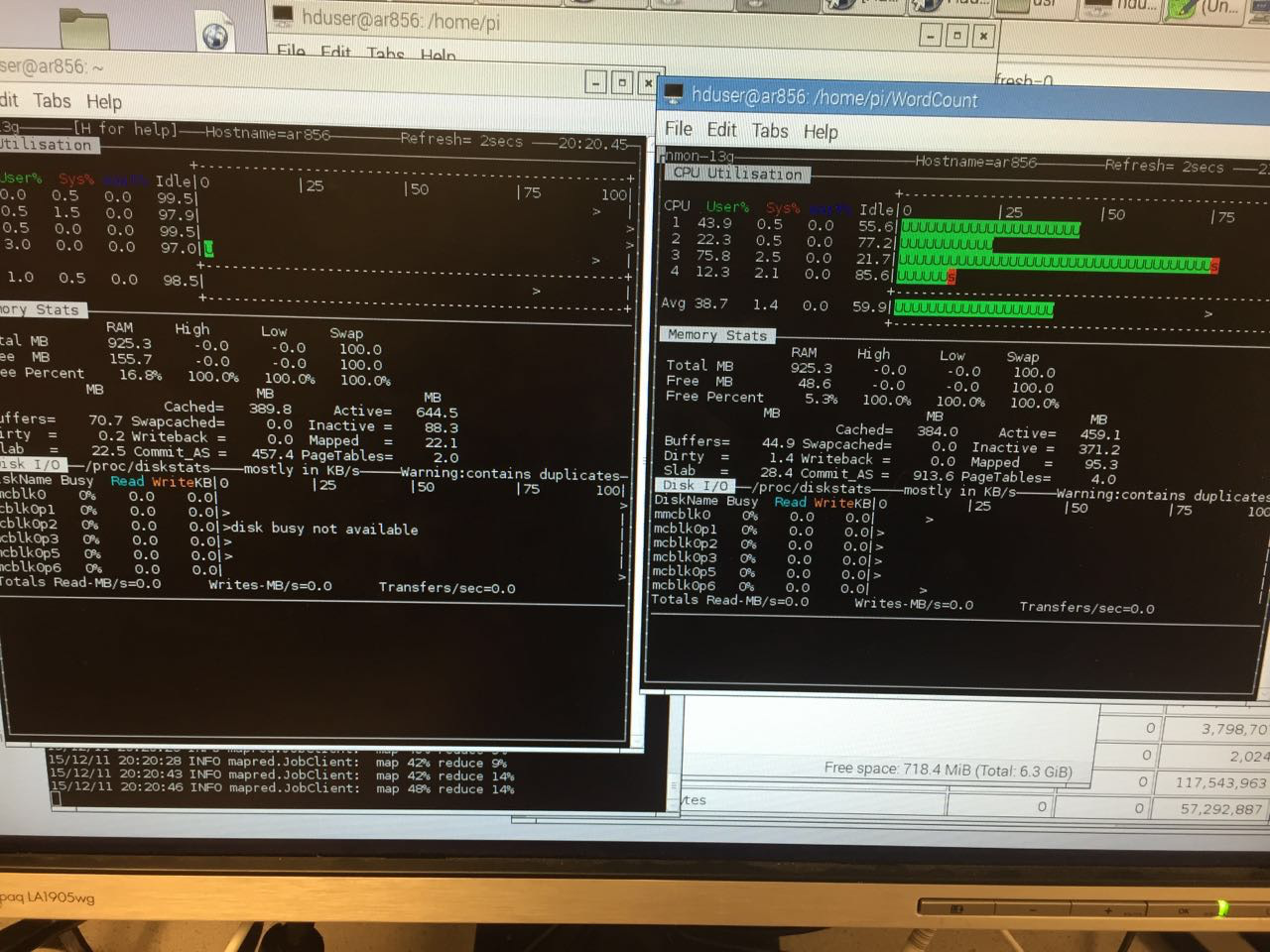

Cpu loading is also not even, the left side is the master node cpu loading, the right side is the slave node cpu loading. The slave node may take up most of computing task.

Conclusion

For current stage, the hadoop does not meet its expectation of processing data faster compared with regular single thread java word processing program. My configuration of Hadoop does not fully utilize the power of distribute system. However, I did see the potential that Hadoop could deal with larger data set. During this project, I also learned a lot of network knowledge

Future Work

First improvement for future development will be add more slaves node to cluster, because the single slave node will take up most computional task. Another solution will be increasing the hard disk drive. The current sd card is 8GB, the virtual memory is also constrained. Therefore, the larger hard drive enables the pi to store larger files.

Code Appendix

import java.io.*; import java.util.Hashtable; import java.util.Set; import java.util.StringTokenizer; /** * Created by linyupeng on 12/7/15. */ public class WordCount { public static void main(String[] args) throws IOException{ Hashtable<String, Integer> freq = new Hashtable<>(); String fileName = args[0]; BufferedReader br = new BufferedReader(new FileReader(fileName)); try { String line = null; while ((line = br.readLine()) != null) { StringTokenizer st = new StringTokenizer(line); while(st.hasMoreTokens()) { String token = st.nextToken(); if(freq.containsKey(token)) { int num = freq.get(token); num++; freq.put(token, num); } else { freq.put(token, 1); } } } } catch (IOException e) { System.out.println(e); } finally { br.close(); } BufferedWriter output = null; try { File file = new File("stat.txt"); output = new BufferedWriter(new FileWriter(file)); Set<String> keys = freq.keySet(); for(String key : keys) { String singleWord = "[ " + key + " ] " + freq.get(key) + "\n"; output.write(singleWord); } } catch (IOException e) { System.out.println(e); } finally { output.close(); } } }