Companion Robot

ECE 5725 Final Project(Monday session)

Kaixin Yang (ky427)

Klora Wang (hw768)

Xiangzhi

Tong(xt242)

Demonstration Video

Introduction

In today's fast-paced, technology-driven world, people of all ages and backgrounds are increasingly reliant on their smartphones for interaction and entertainment. While this trend brings many conveniences, it also contributes to social isolation and reduces face-to-face interactions. To address these challenges, we have designed Companion Robot, an innovative companion robot that provides meaningful interactions and offers a respite from screen time. Companion Robot is specifically designed to cater to various groups, offering them valuable opportunities to engage with a responsive and interactive companion. Whether it's for elderly individuals seeking companionship, children needing a playful friend, or anyone looking to reduce their smartphone dependency, our robot serves as a versatile and enjoyable solution. Our companion robot has three core modes that enhance the user experience. First, Chat Mode: the companion robot can participate in dialog, providing users with a sense of companionship and the fun of interactive dialog. Second, Play Mode: the robot has the ability to listen to the commands and execute user interactions, and can perform various actions according to the commands. Third, Music Mode: The robot companion robot is preloaded with 8 selected songs to provide a pleasant music experience, and the user can control the stop, fast-forward, rewind and exit music mode.

Project Objective:

- CAD Design and Assembly: Design and print various robot components using CAD software and assemble them to create the robot's physical structure.

- Ensure low latency in the process of voice recording, speech-to-text conversion, Large Language Model (LLM) responses, emotion analysis, and text-to-speech synthesis.

- Enable interaction with the robot using MPU6050 and PiTFT.

- Implement video and music play with button control.

- Add robot speech accompanied by cute background music.

- Design an attractive and user-friendly interface on the PiTFT.

- Raspberry Pi and Arduino Communication: Establish reliable communication between the Raspberry Pi and Arduino for seamless integration and coordination of different robot components.

- Servo Control via Arduino: Use the Arduino to control multiple servos, enabling the robot to perform a wide range of movements and actions based on user commands.

Design

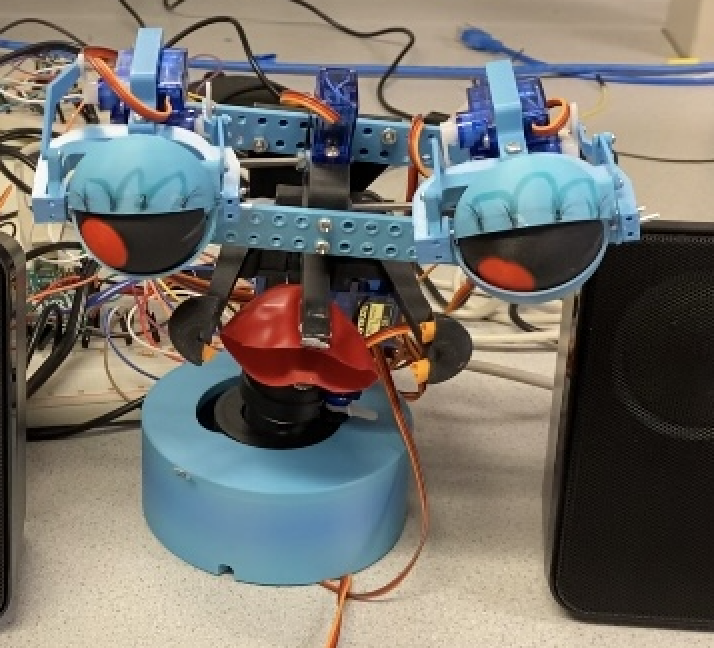

1. CAD Design and 3D Print

The robot's structure was based on an open-source project, particularly the dual-eye component. However, we modified and designed new mouth and base connections in AutoCAD. All solid components were 3D printed using a Bambu X1C FDM (Fused Deposition Modeling) machine. We used two types of filaments: PLA-CF, which contains carbon fiber for increased robustness, was used for heavy-bearing components, while standard PLA, being cost-effective and lower in density, was used for other parts.

2. Component Assembly

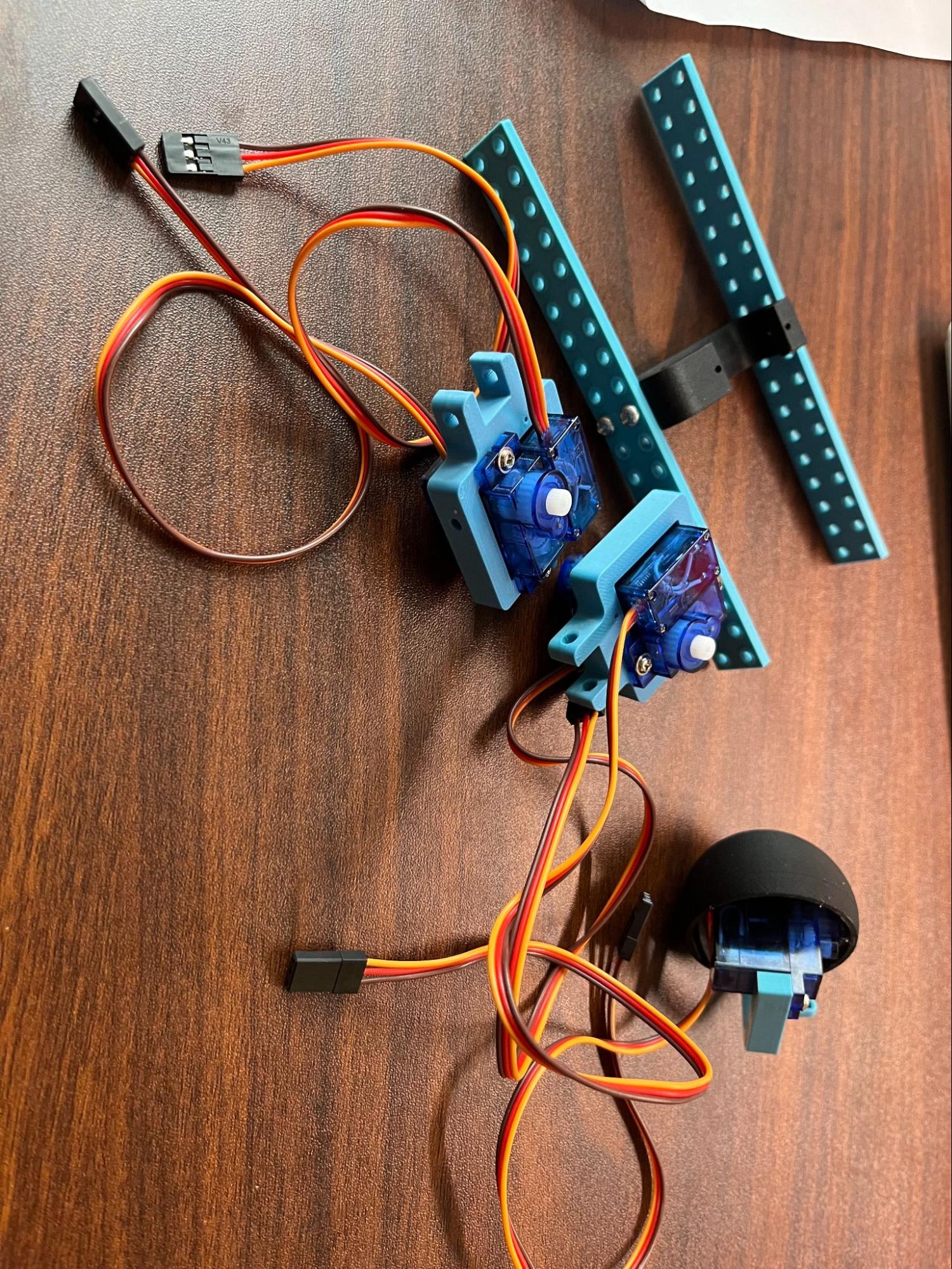

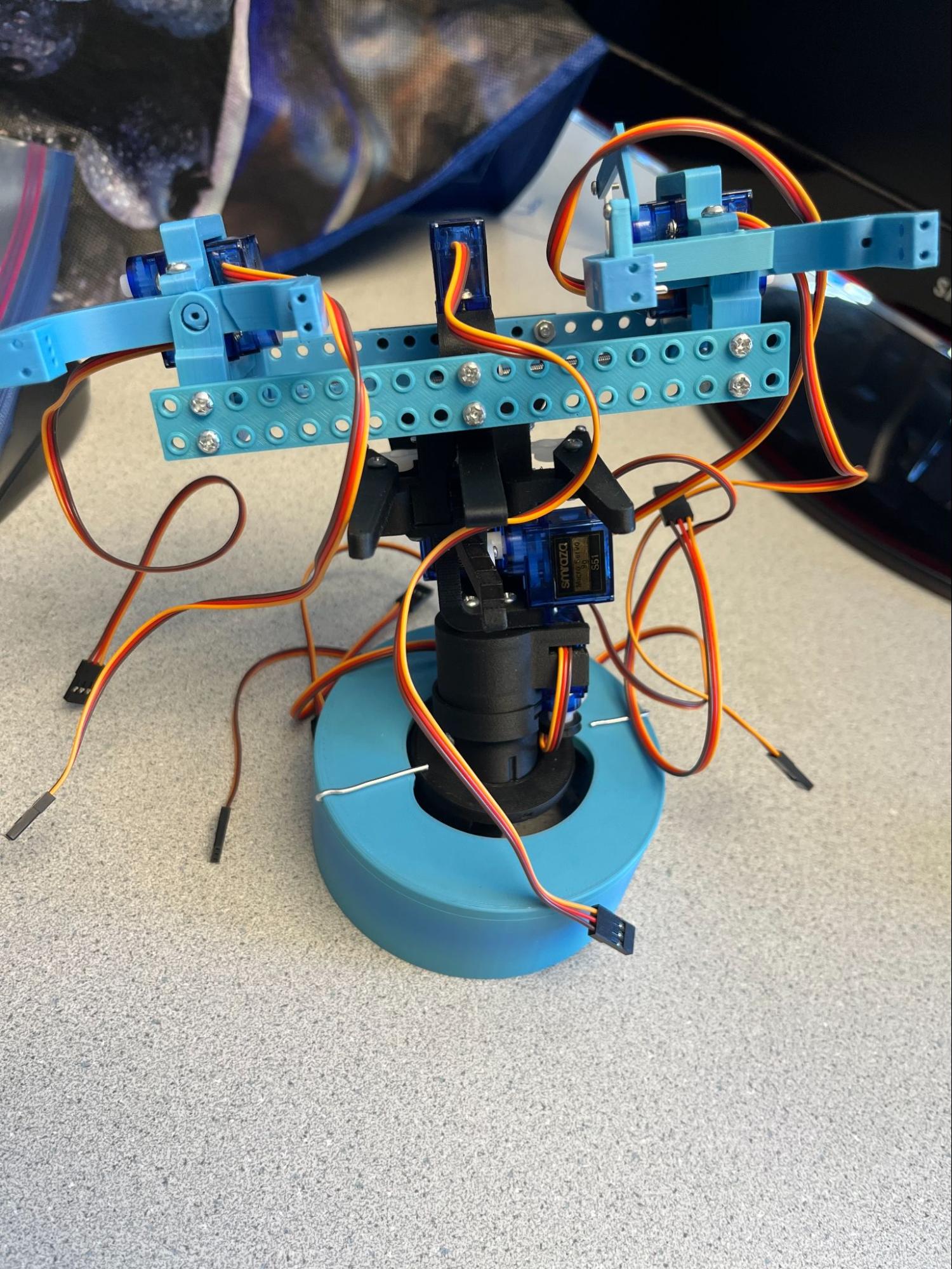

We used various M1.6, M2, and M3 screws to assemble all the components. Some parts, particularly the eye-ball movement control units, required disassembling and reassembling the servos to fit them into the frame. The head features five SG90 servos: four for the individual movements of the dual eyes and one for controlling the entire eyeball's movement. Additionally, two servos control the horizontal and vertical movement of the mouth. The eyelids are moved using gauge 12 aluminum wire sliders.

3. Robot Control Design

To control the Arduino, we integrated a complex servo control system to animate various components of a robotic or mechanical model, such as eyes, mouth, and body parts. These movements can simulate human or animal actions for educational or entertainment purposes. In the Arduino code, we begin by setting up multiple Servo objects for different functions—mouth movements (mouth_servo_r, mouth_servo_c), eye actions (eye_servo_rotation), and body animations (body_servo_rotation, body_rotation_2). Each servo is connected to specific pins on the Arduino board, with power and ground connections also made to the Arduino. We pre-set initial positions and movement parameters for each servo to ensure smooth operation from the start. To achieve the desired movements, we use timing mechanisms to manage when and how the servos move, creating life-like animations. The servos are updated at intervals that change depending on the model's actions, such as talking or reacting to different scenarios. By adjusting the interval values, we control the speed of movements to make them appear more natural. Using unsigned long variables, the code tracks the timing of movements to create fluid motion sequences. Servos are updated at intervals defined by variables such as interval_mouth_r for the mouth servos or interval_eye_in_left for eye movements. These intervals are dynamically adjusted based on the system's state to reflect different animation speeds required for scenarios like talking, listening, or expressive gestures. Our system's behavior is controlled by a state variable with 14 encoded states in the Arduino, such as calm speaking, happy speaking, sad speaking, listening, looking up, and looking down. The code adjusts the servo positions in response to external inputs received through the Arduino's serial interface, which indicates the robot's corresponding states and behaviors. This allows the model to respond to real-time interactions; when it receives a new state from the Raspberry Pi 4, it transitions between states like calm, happy, or sad, each with distinct motion patterns. The loop function continuously checks for serial data, updating the state and adjusting servo movements accordingly.

| State | Function | State | Function |

|---|---|---|---|

| 0 Calm State | Speaking with calm emotion | 8 Eye Left | Looking to Left |

| 1 Sad State | Speaking with sad emotion | 9 Eye Right | Looking to Right |

| 2 Happy State | Speaking with happy emotion | 10 Body Left | Body turns to the left |

| 3 Listen State | Listen to user voice | 11 Body Right | Body turns to the right |

| 4 Nothing State | No action | 12 Mouth small | Mouth open to small size |

| 5 Dancing State | Dancing while playing the music | 13 Mouth Middle | Mouth open to middle size |

| 6 Eye Down | Looking down | 14 Mouth Large | Mouth open to large size |

| 7 Eye Up | Looking up |

4. Rpi Software Design

1.Voice Streaming and Real-Time Speech-to-Text:

We connect a microphone to the Raspberry Pi to capture voice input. Using PyAudio's streaming function, we achieve real-time voice recording without waiting for the entire question to be completed. This streaming capability also allows us to implement streaming speech-to-text, further reducing latency. Initially, we encountered issues with voice input overflow during streaming. By fine-tuning the streaming buffer size in PyAudio's source code, we determined that a buffer size of 512 at a sample rate of 48000 is optimal. Latency is crucial for a real-time response companion robot. Once the speech is transcribed, we store each word in a list.

2. Robot Answer and Emotion Analysis (Large Language Model Inference):

We use Llama3, a state-of-the-art open-source large language model developed by Meta, specifically the Llama3 70B version, which was released last month. After receiving the transcribed text, we add prompts to simulate a robot's response to the user's input. For emotion analysis, we append, “The above is my input. What emotion do you think I am now? Respond with one word from [happy, sad, calm].” For generating the robot's response, we use, “Imagine you are a companion robot, and I am your host. Respond like a companion robot. Your response should be under 20 words, concise, and short.” We maintain a history list of the user's inputs and the LLM's answers to support continuous conversation context. This ensures the robot can recognize related questions or answers in ongoing interactions, which is vital for a companion robot. Additionally, while the robot processes the user's question, the speaker plays pleasant reminder music, creating a warmer interaction experience. Upon receiving the LLM's answer and emotion analysis, we send the corresponding emotion code to the Raspberry Pi and store the answer as a string for text-to-speech conversion.

3. Text-to-Speech:

Our companion robot supports various text-to-speech modes. For answering user questions, we use pure text-to-speech for clarity. In other contexts, such as greeting users upon entering each page, we use text-to-speech with background music to create a pleasant atmosphere.

4. Music Playback:

In music mode, we pre-download eight favorite song videos onto an SD card. When the user enters the song name on the PiTFT, we use MPlayer to play the video on the PiTFT.

5. Real-Time Voice Amplitude Detection:

We run an additional thread to detect voice amplitude in real-time while the robot answers questions. Based on a designed amplitude threshold, we send corresponding commands to the Arduino to control mouth movements.

6. Switching on PiTFT & MPU6050:

In play mode, we run two threads. One thread detects left and right switches on the PiTFT, sending corresponding commands to the Arduino to control the robot's tilt. The second thread detects PiTFT rotation using an MPU6050 sensor. This thread reads data from the MPU6050 and calculates rotation in the X and Y directions.

7. Greeting Voice for Each Page:

We designed greeting voices for each page, such as “Welcome to Group 7's companion robot. I am Cute 7, ready to serve.”

8. Communication with Arduino:

We use a custom communication function to send various state commands to the Arduino, controlling the robot's state. This will be detailed in the robot control section.

5. User Interface design

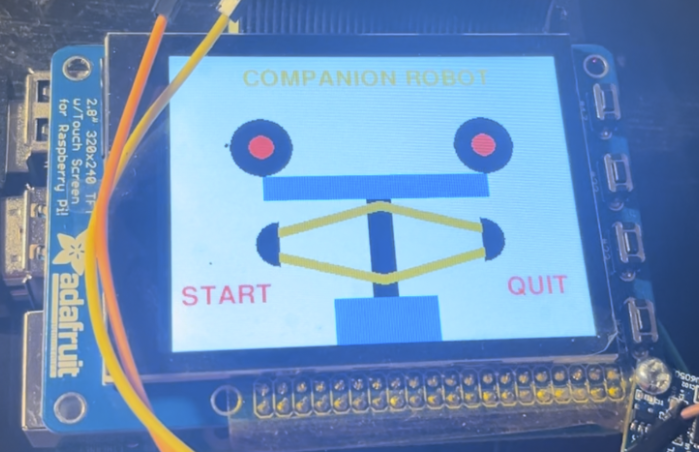

1. Entering Page:

This is the first page displayed when you start our “companion robot.” It features the title “Companion Robot” at the top and a cute image of our robot in the center. At the bottom, there are two buttons: Start and Quit. Clicking Quit turns off the robot. Clicking Start navigates to the Mode Choice Page.

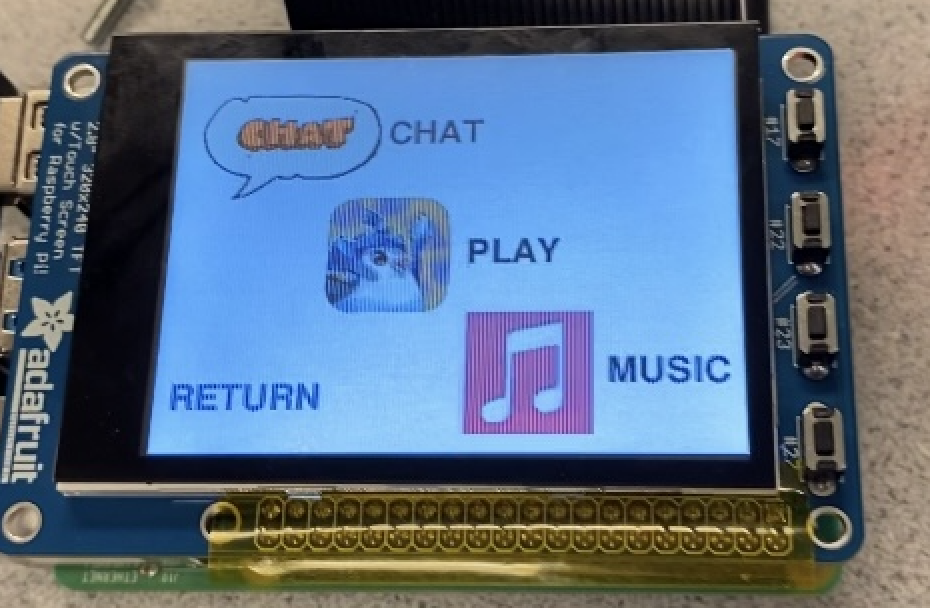

2. Mode Choice Page:

On this page, there are three buttons: Chat, Play, and Music. Clicking each button navigates to the corresponding page. There is also a Return button at the bottom left, which takes you back to the Entering Page.

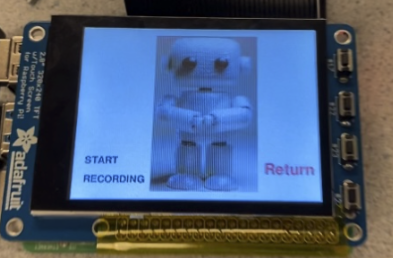

3. Chat Mode Page:

This page displays a human-like robot in the center, indicating interaction through speech. At the bottom, there are two buttons: Start Recording and Return. Clicking Return takes you back to the Mode Choice Page. Clicking Start Recording allows you to speak, changing the button to Stop Recording. During recording, the robot's right side shows two shrinking circles, indicating it is listening. After recording, the button changes to Dealing Prompts, signifying that the robot is processing and answering your question. The robot will respond in 3-5 seconds, and the button will revert to Start Recording for the next question. Click Return to go back to the Mode Choice Page.

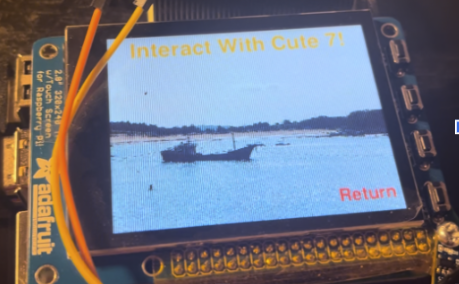

4. Play Mode Page:

In this mode, the top of the screen displays “Interact with Cute 7!” You can swipe left and right on the PiTFT screen to control the robot's tilt, and rotate the PiTFT to control the robot's eye movements. When you finish interacting, click Return to go back to the Mode Choice Page.

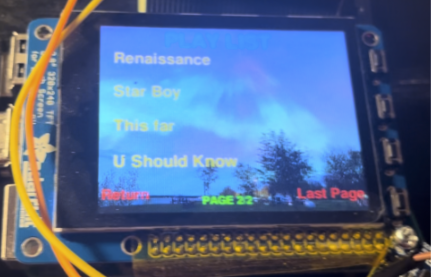

5. Music Mode Page:

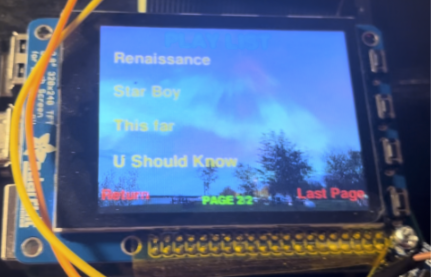

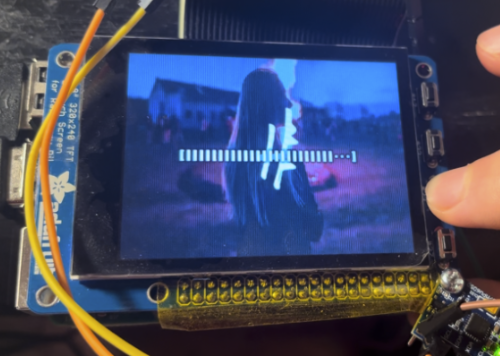

This page displays “Play List” at the top and features four song (video) options. At the bottom, there are Return, Page 1/2, and Next Page buttons. Clicking Next Page takes you to the second page of the music mode, which also has four songs (videos). The bottom of the second page has Return, Page 2/2, and Last Page buttons, allowing you to navigate back to the first page. In music mode, clicking a song plays it, and the video is displayed on the PiTFT. You can control playback using buttons on the right side of the PiTFT, including Pause/Start, Seek 10s, Rewind 10s, and Quit.

6. Rpi and Arduino Communication

To transmit commands from the Raspberry Pi to the Arduino, we use UART as the communication protocol. We connect the Tx (transmit) pin of the Raspberry Pi to the Rx (receive) pin of the Arduino and establish a common ground. This setup ensures proper pin connections and hardware integration between the devices. On the software side, we first enable UART on the Raspberry Pi. Next, we write code to ensure that both the Raspberry Pi and the Arduino communicate at the same baud rate. In our project, we set the serial communication to 9600 baud.

Testing

We initially conducted unit tests to ensure the functionality of individual components in our project. Before integrating with the Arduino to connect the entire robot's functionality, we wrote Python scripts to test each software function individually. We began by testing each servo's rotation angle to determine its threshold points. Next, we defined the actions of each servo as functions and tested these functions individually to ensure they could be called correctly. Since multiple action combinations are divided into various states, we adjusted each state to verify that the action combinations executed properly when called. Additionally, we frequently encountered pin connection drops or wiring issues when calling each function, which required regular adjustments and corrections. To ensure that the Arduino and Raspberry Pi could communicate effectively, we used a separate module to send messages and verify communication between the two devices. We also conducted unit tests for the IMU chip to ensure it was sending the correct information. After connecting the Raspberry Pi and Arduino, we tested our program as a whole to validate the integration and overall functionality of the system.

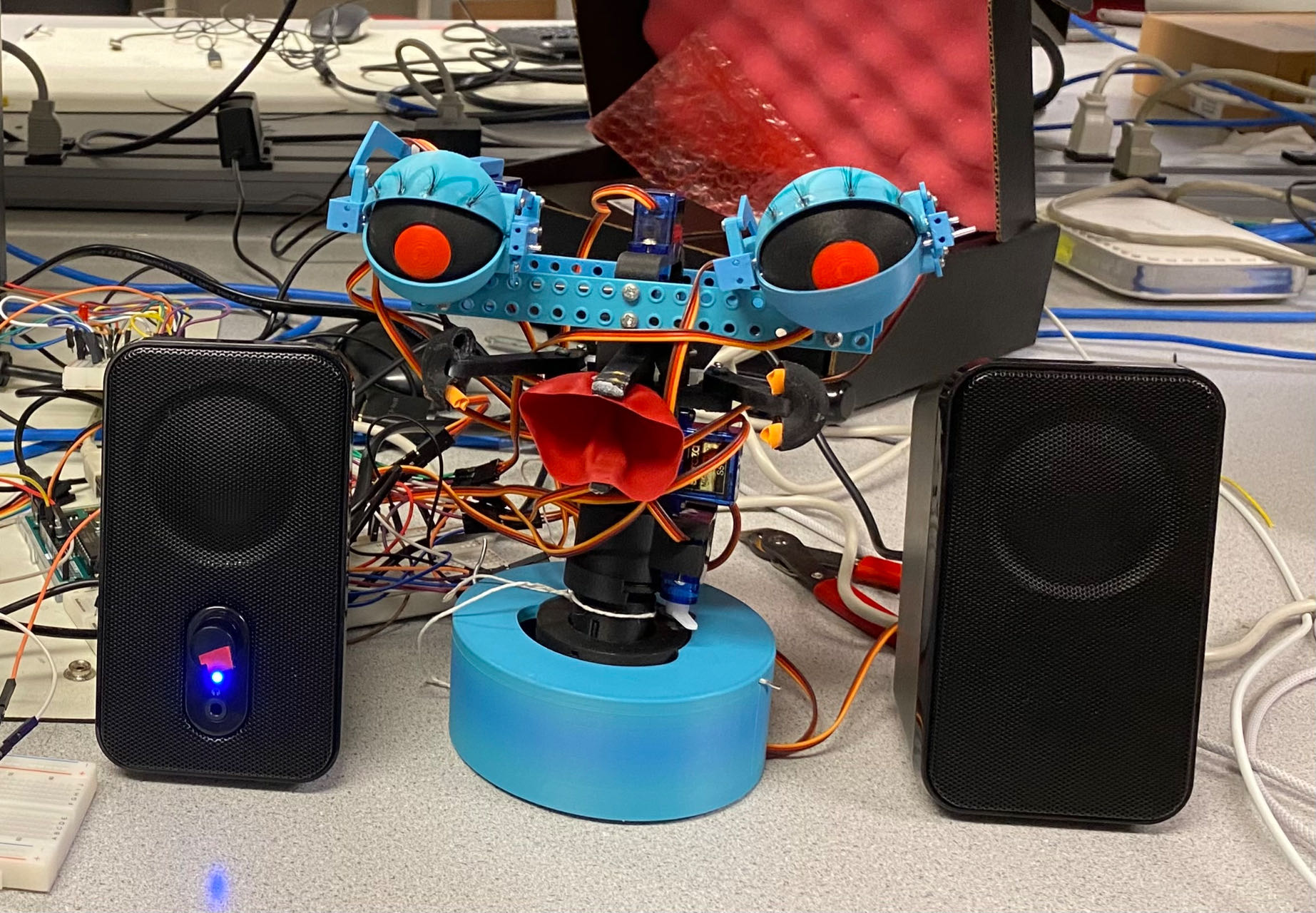

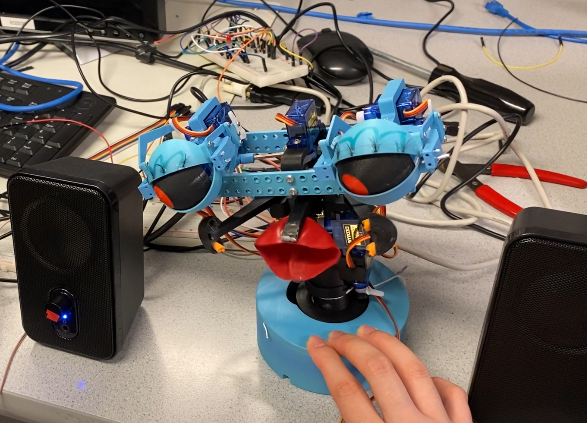

Gallery

Some Assembled Servo Components

Main Body Structure

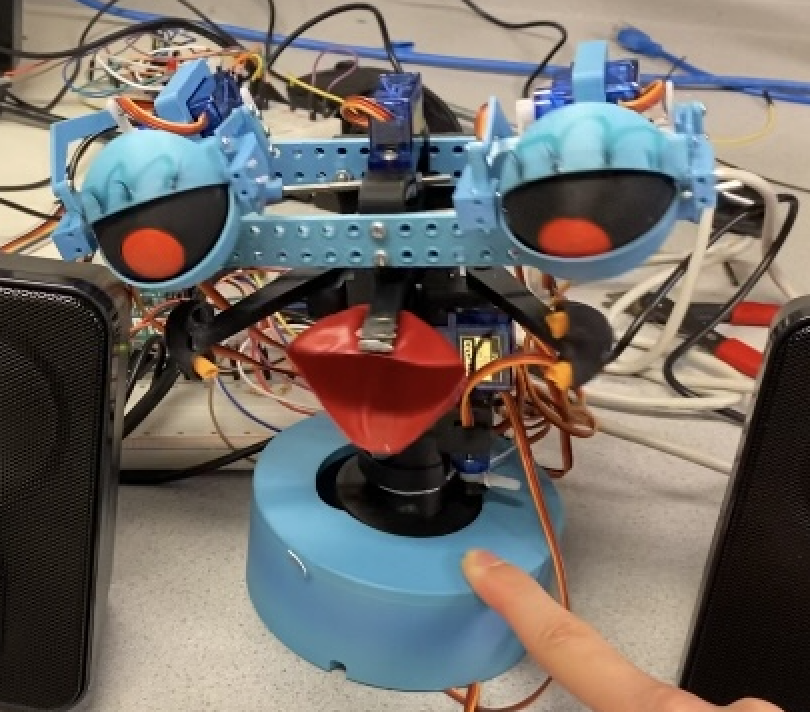

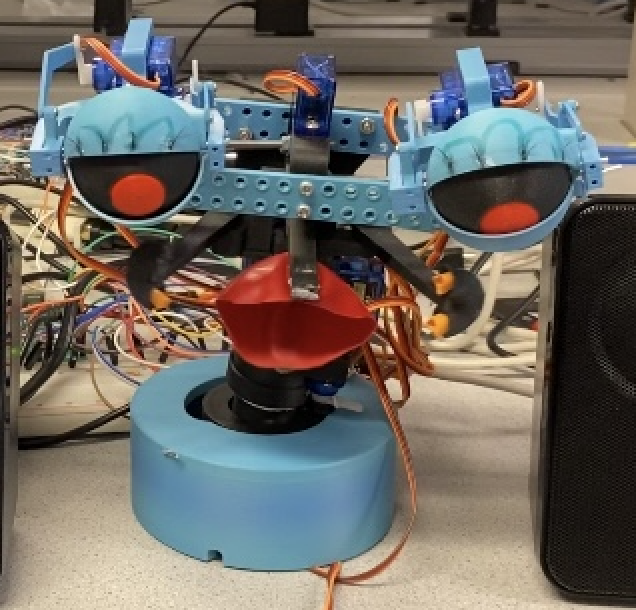

Robot Dancing Mode

Robot Listen Mode

Robot happy speaking Mode

Robot calm speaking Mode

User Interface: Entering Page

User Interface: Mode Choice Page

User Interface: Chat Mode Page

User Interface: Play Mode Page

User Interface: Music Mode Page1

User Interface: Music Mode Page2

User Interface: Music Mode -- Playing

Work Distributions

Kaixin Yang:

Robot functions software design; User Interface design; Arduino and Raspberry Pi Communication

Klora Wang:

Hardware configuration; Robot control with Arduino; Arduino and Raspberry Pi Communication

Xiangzhi Tong:

CAD model design; 3D Printing; Robot Mechanic Assembly

Parts List

- Raspberry Pi 4

- Arduino

- Speaker - 10$

- Microphone

- MPU6050

- filament 50$

- Servo 30$

- Various screws and connecting rods 10$

Total: $100

References

Llama3 DocumentGroq API Document

Arduino Reference

MPU6050 Document

Pigpio Library

R-Pi GPIO Document

Code Appendix

// main.py

import os

import pygame,pigame

from pygame.locals import *

import sys

import math

from time import sleep

from speech2text import SpeechController

from OurGPT_context import chat

from text2speech import text_to_speech, text_to_speech_with_bg, play_with_bg, play_bg_thread

from UART_test import arduino_communication

import subprocess

import re

import threading

from music_mode.video_control import control_video_thread

from play_mode.pitft_switch import handle_continuous_touch_thread

from play_mode.mpu import imu_thread

from detect_voice_amp import detect_voice_amplitude

touchscreen_device_path = '/dev/input/event5' # may change

os.putenv('SDL_VIDEODRV','fbcon')

os.putenv('SDL_FBDEV', '/dev/fb0')

os.putenv('SDL_MOUSEDRV','dummy')

os.putenv('SDL_MOUSEDEV','/dev/null')

os.putenv('DISPLAY','')

pygame.init()

pygame.mouse.set_visible(False)

pitft = pigame.PiTft()

# screen size

screen = pygame.display.set_mode((320, 240))

pygame.display.update()

font_super = pygame.font.Font(None, 40)

font_big = pygame.font.Font(None, 30)

font_middle = pygame.font.Font(None, 20)

font_middle_plus = pygame.font.Font(None, 25)

#color define

BLACK = (0, 0, 0)

WHITE = (255, 255, 255)

YELLOW = (255, 255, 255)

BLUE = (0, 121, 150) # blue

RED = (255, 0, 0) # red

GREEN = (0, 255, 0) # green

ORANGE = (255 , 165 , 0)

GRAY = (127, 127, 127)

def emotion_str_to_num(str_emotion):

if "happy" in str_emotion or "Happy" in str_emotion :

return 2

elif "sad" in str_emotion or "Sad" in str_emotion:

return 1

elif "calm" in str_emotion or "Calm" in str_emotion:

return 0

def draw_robot():

# draw eye

pygame.draw.circle(screen, BLACK, [70, 80], 25)

pygame.draw.circle(screen, BLACK, [250, 80], 25)

pygame.draw.circle(screen, RED, [70, 80], 10) # center_x, center_y, radius

pygame.draw.circle(screen, RED, [250, 80], 10) # center_x, center_y, radius

# draw nose

pygame.draw.rect(screen, BLUE, [70, 105, 180, 20])

# draw body

pygame.draw.rect(screen, BLACK, [150, 125, 20, 80])

radius = 18

#left one

center = (240, 160)

start_angle = 1.5*math.pi

stop_angle = 2.5*math.pi

points = []

steps = 50

for i in range(steps + 1):

angle = start_angle + (stop_angle - start_angle) * i / steps

x = center[0] + int(radius * math.cos(angle))

y = center[1] + int(radius * math.sin(angle))

points.append((x, y))

points.append(center)

pygame.draw.polygon(screen, BLACK, points)

#right one

center = (80, 160)

start_angle = 0.5*math.pi

stop_angle = 1.5*math.pi

points = []

steps = 50

for i in range(steps + 1):

angle = start_angle + (stop_angle - start_angle) * i / steps

x = center[0] + int(radius * math.cos(angle))

y = center[1] + int(radius * math.sin(angle))

points.append((x, y))

points.append(center)

pygame.draw.polygon(screen, BLACK, points)

points = [(80, 150), (160, 130), (240, 150), (160, 200), (80, 170)]

lines = [[(80, 150), (160, 130)], [(160, 130), (240, 150)], [(240, 170), (160,190)], [(160,190), (80,170)]]

line_width = 8 #

for line in lines:

pygame.draw.line(screen, ORANGE, line[0], line[1], line_width)

# draw wheel

pygame.draw.rect(screen, BLUE, [120, 205, 80, 40])

def draw_microphone(screen, is_recording, pulse_radius=10):

background = pygame.image.load('/home/pi/company_robot/photo/robot.jpg')

size = background.get_size()

background = pygame.transform.scale(background, (140, 220))

screen.blit(background, (100, 10))

if is_recording:

pygame.draw.circle(screen, GRAY, (255, 40), pulse_radius)

pygame.draw.circle(screen, GRAY, (275, 20), pulse_radius)

def draw_page1(screen):

# fill screen

screen.fill(WHITE)

draw_robot()

# text showing:

text_surface = font_big.render(f'COMPANION ROBOT', True, ORANGE)

rect = text_surface.get_rect(center=(160,20))

screen.blit(text_surface, rect)

text_surface = font_big.render(f'START', True, RED)

rect = text_surface.get_rect(center=(40,200))

screen.blit(text_surface, rect)

text_surface = font_big.render(f'QUIT', True, RED)

rect = text_surface.get_rect(center=(280,200))

screen.blit(text_surface, rect)

pygame.display.flip()

return "page1"

def draw_page2(screen): #choice page

screen.fill(WHITE)

background = pygame.image.load('/home/pi/company_robot/photo/chat.png')

background = pygame.transform.scale(background, (110, 70))

screen.blit(background, (10, 20))

text_surface = font_big.render(f'CHAT', True, BLACK)

rect = text_surface.get_rect(center=(150,50))

screen.blit(text_surface, rect)

background = pygame.image.load('/home/pi/company_robot/photo/play.png')

background = pygame.transform.scale(background, (70, 70))

screen.blit(background, (90, 90))

text_surface = font_big.render(f'PLAY', True, BLACK)

rect = text_surface.get_rect(center=(195,125))

screen.blit(text_surface, rect)

background = pygame.image.load('/home/pi/company_robot/photo/music_symbol.png')

background = pygame.transform.scale(background, (70, 70))

screen.blit(background, (170, 160))

text_surface = font_big.render(f'MUSIC', True, BLACK)

rect = text_surface.get_rect(center=(280,195))

screen.blit(text_surface, rect)

text_surface = font_big.render(f'RETURN', True, BLUE)

rect = text_surface.get_rect(center=(50,210))

screen.blit(text_surface, rect)

pygame.display.flip()

def draw_page3(screen, is_recording, dealing = False): #chat

screen.fill(WHITE)

draw_microphone(screen, is_recording)

text_surface = font_big.render(f'Return', True, RED)

rect = text_surface.get_rect(center=(280,200))

screen.blit(text_surface, rect)

if dealing:

text_surface = font_middle.render(f'Dealing', True, BLACK)

rect = text_surface.get_rect(center=(35,190))

screen.blit(text_surface, rect)

text_surface = font_middle.render(f'PROMPTS.....', True, BLACK)

rect = text_surface.get_rect(center=(53,215))

screen.blit(text_surface, rect)

pygame.display.flip()

return "page2"

if not is_recording:

text_surface = font_middle.render(f'START', True, BLACK)

else:

text_surface = font_middle.render(f'STOP', True, BLACK)

rect = text_surface.get_rect(center=(35,190))

screen.blit(text_surface, rect)

text_surface = font_middle.render(f'RECORDING', True, BLACK)

rect = text_surface.get_rect(center=(53,215))

screen.blit(text_surface, rect)

pygame.display.flip()

return "page3"

def draw_page4(screen): # play mode

screen.fill(WHITE)

background = pygame.image.load('/home/pi/company_robot/photo/IMG_6005.JPG')

background = pygame.transform.scale(background, (320, 240))

screen.blit(background, (0, 0))

text_surface = font_super.render(f'Interact With Cute 7!', True, ORANGE)

rect = text_surface.get_rect(center=(160,20))

screen.blit(text_surface, rect)

pygame.display.flip()

text_surface = font_big.render(f'Return', True, RED)

rect = text_surface.get_rect(center=(280,220))

screen.blit(text_surface, rect)

pygame.display.flip()

return "page4"

def draw_page5(screen): # music mode - music list1

screen.fill(WHITE)

background = pygame.image.load('/home/pi/company_robot/photo/IMG_5996.JPG')

background = pygame.transform.scale(background, (320, 240))

screen.blit(background, (0, 0))

text_surface = font_super.render(f'PLAY LIST', True, BLUE)

rect = text_surface.get_rect()

rect.topleft = (80, 5)

screen.blit(text_surface, rect)

text_surface = font_middle_plus.render(f'Return', True, RED)

rect = text_surface.get_rect(center=(30,220))

screen.blit(text_surface, rect)

text_surface = font_middle_plus.render(f'Next Page', True, RED)

rect = text_surface.get_rect(center=(275,220))

screen.blit(text_surface, rect)

text_surface = font_big.render(f'7 Years', True, ORANGE)

rect = text_surface.get_rect()

rect.topleft = (16, 35)

screen.blit(text_surface, rect)

text_surface = font_big.render(f'BABY DOLL', True, ORANGE)

rect = text_surface.get_rect()

rect.topleft = (16, 80)

screen.blit(text_surface, rect)

text_surface = font_big.render(f'Ferrari', True, ORANGE)

rect = text_surface.get_rect()

rect.topleft = (16, 125)

screen.blit(text_surface, rect)

text_surface = font_big.render(f'I Really WT Stay At Your House', True, ORANGE)

rect = text_surface.get_rect()

rect.topleft = (16, 170)

screen.blit(text_surface, rect)

text_surface = font_middle.render(f'PAGE 1/2', True, GREEN)

rect = text_surface.get_rect()

rect.topleft = (120, 220)

screen.blit(text_surface, rect)

pygame.display.flip()

return "page5"

def draw_page6(screen): # music mode - music list2

screen.fill(WHITE)

background = pygame.image.load('/home/pi/company_robot/photo/IMG_5997.JPG')

background = pygame.transform.scale(background, (320, 240))

screen.blit(background, (0, 0))

text_surface = font_middle_plus.render(f'Return', True, RED)

rect = text_surface.get_rect(center=(30,220))

screen.blit(text_surface, rect)

text_surface = font_middle_plus.render(f'Last Page', True, RED)

rect = text_surface.get_rect(center=(275,220))

screen.blit(text_surface, rect)

text_surface = font_super.render(f'PLAY LIST', True, BLUE)

rect = text_surface.get_rect()

rect.topleft = (80, 5)

screen.blit(text_surface, rect)

text_surface = font_big.render(f'Renaissance', True, ORANGE)

rect = text_surface.get_rect()

rect.topleft = (16, 35)

screen.blit(text_surface, rect)

text_surface = font_big.render(f'Star Boy', True, ORANGE)

rect = text_surface.get_rect()

rect.topleft = (16, 80)

screen.blit(text_surface, rect)

text_surface = font_big.render(f'This far', True, ORANGE)

rect = text_surface.get_rect()

rect.topleft = (16, 125)

screen.blit(text_surface, rect)

text_surface = font_big.render(f'U Should Know ', True, ORANGE)

rect = text_surface.get_rect()

rect.topleft = (16, 170)

screen.blit(text_surface, rect)

text_surface = font_middle.render(f'PAGE 2/2', True, GREEN)

rect = text_surface.get_rect()

rect.topleft = (120, 220)

screen.blit(text_surface, rect)

pygame.display.flip()

return "page6"

def main(pitft):

speech_controller = SpeechController()

history_message = []

is_recording_times=0

current_page = "page1"

is_recording = False

pulse_radius = 10

growing = True

draw_page1(screen)

consecutive_2x = []

consecutive_2y = []

play_with_bg("/home/pi/company_robot/text_wav/start.wav", '/home/pi/company_robot/bg_music/start.wav')

try:

while True:

pitft.update()

for event in pygame.event.get():

if(event.type is MOUSEBUTTONUP):

x,y = pygame.mouse.get_pos()

print(x,y)

if current_page == "page1":

if 240>= y >= 100 and (0 <= x <= 100 ):

current_page = "page2"

draw_page2(screen)

play_with_bg("/home/pi/company_robot/text_wav/page2.wav", '/home/pi/company_robot/bg_music/start.wav')

continue

elif 240>= y >= 170 and (240 <= x <= 320 ):

play_with_bg("/home/pi/company_robot/text_wav/quit_voice.wav", '/home/pi/company_robot/bg_music/start.wav')

pygame.quit()

quit()

if current_page == "page2":

if 90>= y >= 0:

current_page = "page3" #chat

draw_page3(screen, is_recording)

play_with_bg("/home/pi/company_robot/text_wav/page3.wav", '/home/pi/company_robot/bg_music/start.wav')

continue

elif 160>= y >= 90:

current_page = "page4" #play

draw_page4(screen)

play_with_bg("/home/pi/company_robot/text_wav/page4.wav", '/home/pi/company_robot/bg_music/start.wav')

imu_stop_event = threading.Event()

pitft_stop_event = threading.Event()

imu_rotate_thread = threading.Thread(target=imu_thread, args=(imu_stop_event, ))

pitft_switch_thread = threading.Thread(target=handle_continuous_touch_thread,

args=(touchscreen_device_path, pitft_stop_event))

imu_stop_event.clear()

imu_rotate_thread.start()

pitft_stop_event.clear()

pitft_switch_thread.start()

# breakpoint()

elif 240>= y >= 160 and (120 <= x <= 320 ):

current_page = "page5" #music

draw_page5(screen) # music mode

play_with_bg("/home/pi/company_robot/text_wav/page5.wav", '/home/pi/company_robot/bg_music/start.wav')

elif 240>= y >= 160 and (0 <= x <= 120 ):

current_page = "page1"

elif current_page == "page3":

if 240>= y >= 100 and (0 <= x <= 100 ):

is_recording = not is_recording

if is_recording:

speech_controller.start()

arduino_communication(3)

print("send listen to arduino")

else:

stop_flag = threading.Event()

sound_thread = threading.Thread(target=play_bg_thread, args=('/home/pi/company_robot/bg_music/reminder.mp3',stop_flag))

stop_flag.clear()

sound_thread.start()

draw_page3(screen, is_recording, dealing=True)

speech_controller.stop()

# breakpoint()

emotion, answer = chat(speech_controller.transcribed_texts, history_message)

print(f"emotion={emotion}")

emotion_num = emotion_str_to_num(emotion)

arduino_communication(emotion_num)

print(f"send {emotion} to arduino")

print(f"answer={answer}")

answer = re.sub(r"[?!]", "", answer)

stop_flag.set()

sound_thread.join()

# breakpoint()

detect_voice_flag = threading.Event()

detect_voice_thread = threading.Thread(target=detect_voice_amplitude, args=(detect_voice_flag, ))

detect_voice_flag.clear()

detect_voice_thread.start()

text_to_speech(answer)

detect_voice_flag.set()

detect_voice_thread.join()

print("finish speaking")

arduino_communication(4)

print("send nothing to arduino")

elif 240>= y >= 170 and (240 <= x <= 320 ):

current_page = "page2"

elif current_page == "page4": #play

#detect switch on pitft

# breakpoint()

if 240>= y >= 180 and (260 <= x <= 320 ):

current_page = "page2"

draw_page2(screen)

imu_stop_event.set()

imu_rotate_thread.join()

pitft_stop_event.set()

pitft_switch_thread.join()

break

else:

pass

elif current_page == "page5": #MUSIC MODE page1

if 50>= y >= 0:

arduino_communication(5)#robot dancing

print(f"send dancing to arduino")

stop_flag = threading.Event()

music_thread = threading.Thread(target=control_video_thread, args=(stop_flag, ))

stop_flag.clear()

music_thread.start()

subprocess.run(['bash', '/home/pi/company_robot/music_mode/start_video.sh',

'/home/pi/company_robot/video/7Years.mp4'], capture_output=True, text=True) # 7years

stop_flag.set()

music_thread.join()

arduino_communication(4)

print("send nothing to arduino")

elif 100>= y >= 50:

arduino_communication(5)#robot dancing

print(f"send dancing to arduino")

stop_flag = threading.Event()

music_thread = threading.Thread(target=control_video_thread, args=(stop_flag, ))

stop_flag.clear()

music_thread.start()

subprocess.run(['bash', '/home/pi/company_robot/music_mode/start_video.sh',

'/home/pi/company_robot/video/BABYDOLL.mp4'], capture_output=True, text=True) # BABYDOLL

stop_flag.set()

music_thread.join()

arduino_communication(4)

print("send nothing to arduino")

elif 150>= y >= 100:

arduino_communication(5)#robot dancing

print(f"send dancing to arduino")

stop_flag = threading.Event()

music_thread = threading.Thread(target=control_video_thread, args=(stop_flag, ))

stop_flag.clear()

music_thread.start()

subprocess.run(['bash', '/home/pi/company_robot/music_mode/start_video.sh',

'/home/pi/company_robot/video/Ferrari.mp4'], capture_output=True, text=True) # Ferrari

stop_flag.set()

music_thread.join()

arduino_communication(4)

print("send nothing to arduino")

elif 200>= y >= 150:

arduino_communication(5)#robot dancing

print(f"send dancing to arduino")

stop_flag = threading.Event()

music_thread = threading.Thread(target=control_video_thread, args=(stop_flag, ))

stop_flag.clear()

music_thread.start()

subprocess.run(['bash', '/home/pi/company_robot/music_mode/start_video.sh',

'/home/pi/company_robot/video/IReallyWantToStayAtYourHouse.mp4'], capture_output=True, text=True) # House

stop_flag.set()

music_thread.join()

arduino_communication(4)

print("send nothing to arduino")

elif 240>= y >= 210 and (240 <= x <= 320 ):

current_page = "page6" #go to list2

elif 240>= y >= 210 and (0 <= x <= 60 ):

current_page = "page2" #go to page2

elif current_page == "page6": #MUSIC MODE page2

if 50>= y >= 0:

arduino_communication(5)#robot dancing

print(f"send dancing to arduino")

stop_flag = threading.Event()

music_thread = threading.Thread(target=control_video_thread, args=(stop_flag, ))

stop_flag.clear()

music_thread.start()

subprocess.run(['bash', '/home/pi/company_robot/music_mode/start_video.sh',

'/home/pi/company_robot/video/Renaissance.mp4'], capture_output=True, text=True) # Rei

stop_flag.set()

music_thread.join()

arduino_communication(4)

print("send nothing to arduino")

elif 100>= y >= 50:

arduino_communication(5)#robot dancing

print(f"send dancing to arduino")

stop_flag = threading.Event()

music_thread = threading.Thread(target=control_video_thread, args=(stop_flag, ))

stop_flag.clear()

music_thread.start()

subprocess.run(['bash', '/home/pi/company_robot/music_mode/start_video.sh',

'/home/pi/company_robot/video/STARBOY.mp4'], capture_output=True, text=True) # STARBOY

stop_flag.set()

music_thread.join()

arduino_communication(4)

print("send nothing to arduino")

elif 150>= y >= 100:

arduino_communication(5)#robot dancing

print(f"send dancing to arduino")

stop_flag = threading.Event()

music_thread = threading.Thread(target=control_video_thread, args=(stop_flag, ))

stop_flag.clear()

music_thread.start()

subprocess.run(['bash', '/home/pi/company_robot/music_mode/start_video.sh',

'/home/pi/company_robot/video/Thisfar.mp4'], capture_output=True, text=True) # Thisfar

stop_flag.set()

music_thread.join()

arduino_communication(4)

print("send nothing to arduino")

elif 200>= y >= 150:

arduino_communication(5)#robot dancing

print(f"send dancing to arduino")

subprocess.run(['bash', '/home/pi/company_robot/music_mode/start_video.sh',

'/home/pi/company_robot/video/UShouldKnow.mp4'], capture_output=True, text=True) # USHOULDKNOW

arduino_communication(4)

print("send nothing to arduino")

elif 240>= y >= 210 and (240 <= x <= 320 ):

current_page = "page5" #go to list1

elif 240>= y >= 210 and (0 <= x <= 60 ):

current_page = "page2" #go to page2

if current_page == "page1":

draw_page1(screen)

elif current_page == "page2":

draw_page2(screen)

elif current_page == "page3":

draw_page3(screen, is_recording)

elif current_page == "page4":

draw_page4(screen)

elif current_page == "page5":

draw_page5(screen)

elif current_page == "page6":

draw_page6(screen)

if is_recording:

is_recording_times +=1

if growing:

pulse_radius += 1

if pulse_radius > 20:

growing = False

else:

pulse_radius -= 1

if pulse_radius < 10:

growing = True

if is_recording_times % 10 ==0 :

draw_microphone(screen, is_recording, pulse_radius)

pygame.display.flip()

except KeyboardInterrupt:

pass

finally:

del(pitft)

if __name__ == "__main__":

main(pitft)

//OurGPT_context.py

from groq import Groq

import os

emotion_instruction = ", The above is my input, what emotion do you think I am now? \

I only need you response me one word in the range of [happy, sad, calm]"

text_instruction = ", Imagine you are a companion robot and I'm your host, you should response\

like a companion robot, your response shouldn't be long, because I need to user\

speaker to output your voice, try your best to response < 20 words, precisely and short"

# history_message looks loke [{....},{....}]

def chat(text, history_message):

client = Groq(api_key=os.environ.get("GROQ_API_KEY"))

text = text[0]

messages1 = history_message.copy()

messages1.append( {

"role": "user",

"content": text + emotion_instruction

})

completion = client.chat.completions.create(

model="llama3-70b-8192",

messages = messages1,

temperature=1,

max_tokens=1024,

top_p=1,

stream=True,

stop=None,

)

emotion_answer=""

for chunk in completion:

content = chunk.choices[0].delta.content or ""

# print(content, end="")

emotion_answer += content

history_message.append( {

"role": "user",

"content": text + text_instruction

})

completion = client.chat.completions.create(

model="llama3-70b-8192",

messages = history_message,

temperature=1,

max_tokens=1024,

top_p=1,

stream=True,

stop=None,

)

text_answer = ""

for chunk in completion:

content = chunk.choices[0].delta.content or ""

# print(content, end="")

text_answer += content

history_message.append({"role": "assistant","content":text_answer})

return emotion_answer, text_answer

if __name__ == "__main__":

usr_promt = "I just lost my bag"

# usr_promt += instruction

usr_promt= [usr_promt]

breakpoint()

history_message = []

emotion, answer = chat(usr_promt, history_message)

print(f"emotion = {emotion}")

print(f"answer = {answer}")

breakpoint()

usr_promt = "What did I told you that I lost?"

# usr_promt += instruction

usr_promt= [usr_promt]

breakpoint()

emotion, answer = chat(usr_promt, history_message)

print(f"emotion = {emotion}")

print(f"answer = {answer}")

//speech2text.py

import assemblyai as aai

import threading

import queue

import pyaudio

import time

print(aai.__file__)

print(pyaudio.__file__)

def speech2text_setting(transcribed_texts):

aai.settings.api_key = ""

def on_open(session_opened: aai.RealtimeSessionOpened):

print("Session ID:", session_opened.session_id)

def on_data(transcript: aai.RealtimeTranscript):

if not transcript.text:

return

if isinstance(transcript, aai.RealtimeFinalTranscript):

transcribed_texts.append(transcript.text)

print(transcript.text, end="\r\n")

else:

print(transcript.text, end="\r")

def on_error(error: aai.RealtimeError):

print("An error occured:", error)

def on_close():

print("Closing Session")

print(transcribed_texts)

transcriber = aai.RealtimeTranscriber(

sample_rate=48_000,

on_data=on_data,

on_error=on_error,

on_open=on_open,

on_close=on_close,

)

return transcriber

def stream_speech2text(shut_flag, transcribed_texts):

transcriber = speech2text_setting(transcribed_texts)

transcriber.connect()

microphone_stream = aai.extras.MicrophoneStream(sample_rate=48000)

transcriber.stream(microphone_stream, shut_flag)

transcriber.close()

class SpeechController:

def __init__(self):

self.transcribed_texts = []

self.shut_flag = threading.Event()

self.thread = None

def start(self):

if self.thread is None or not self.thread.is_alive():

self.transcribed_texts = []

self.thread = threading.Thread(target=stream_speech2text, args=(self.shut_flag, self.transcribed_texts))

self.shut_flag.clear()

self.thread.start()

def stop(self):

self.shut_flag.set()

if self.thread is not None:

self.thread.join()

if __name__ == "__main__":

a = SpeechController()

time1 = time.time()

# breakpoint()

a.start()

while True:

if time.time() - time1 > 8:

a.stop()

quit()

//text2speech.py

from gtts import gTTS

import pygame

import os

import argparse

import subprocess

import threading

import time

def text_to_speech(text, lang='en'):

# text to speech

tts = gTTS(text=text, lang=lang, slow=False)

tts.save("GPTanswer.mp3") # save audio

# init pygame

pygame.init()

pygame.mixer.init()

pygame.mixer.music.load("GPTanswer.mp3")

pygame.mixer.music.set_volume(1.0)

pygame.mixer.music.play()

# wait for end

while pygame.mixer.music.get_busy() == True:

continue

def text_to_speech_with_bg(text, bg_music,lang='en'):

# text to speech

tts = gTTS(text=text, lang=lang, slow=False)

tts.save("GPTanswer.mp3") # save audio

subprocess.run(['ffmpeg', '-y','-i', 'GPTanswer.mp3', 'start.wav'], capture_output=True, text=True)

# init pygame

pygame.init()

pygame.mixer.init()

speak = pygame.mixer.Sound("/home/pi/company_robot/GPTanswer.wav")

background_music = pygame.mixer.Sound(bg_music)

background_music.set_volume(0.5)

speak.set_volume(1.0)

background_channel = background_music.play(-1)

speak_channel = speak.play()

# wait for end

while speak_channel.get_busy() == True:

continue

background_music.stop()

def play_with_bg(wav_path, bg_path):

pygame.init()

pygame.mixer.init()

speak = pygame.mixer.Sound(wav_path)

background_music = pygame.mixer.Sound(bg_path)

background_music.set_volume(0.5)

speak.set_volume(1.0)

background_channel = background_music.play(-1)

speak_channel = speak.play()

while speak_channel.get_busy() == True:

continue

background_music.stop()

def play_bg_thread(bg_path, stop_flag):

pygame.init()

pygame.mixer.init()

pygame.mixer.music.load(bg_path)

pygame.mixer.music.set_volume(1.0)

while not stop_flag.is_set():

pygame.mixer.music.play()

time.sleep(1)

if __name__ == "__main__":

text = "Hello, how are you today?"

text_to_speech(text)

text_to_speech_with_bg("Welcome to Group 7's companion Robot!\

My name is cute 7, Happy to serve you,\

Click Start to begin!", "/home/pi/company_robot/bg_music/start.wav")

//UART_test.py

import serial

import time

import argparse

def arduino_communication(num):

ser = serial.Serial('/dev/serial0', 9600) # Open serial port at 9600 baud

new_num = f"{num}\n"

ser.write(new_num.encode()) # Send a byte string

print("send arduino yes")

# time.sleep(1) # Wait for 1 second

if __name__ =="__main__":

arduino_communication(4)

//detect_voice_amp.py

import pyaudio

import numpy as np

import time

from UART_test import arduino_communication

import threading

FORMAT = pyaudio.paInt16

CHANNELS = 1

RATE = 48000

CHUNK = 48000

def detect_voice_amplitude(stop_event):

p = pyaudio.PyAudio()

stream = p.open(format=FORMAT,

channels=CHANNELS,

rate=RATE,

input=True,

frames_per_buffer=CHUNK)

print("Detecting amplitude...")

time_all = 0

k=1

time1=time.time()

while not stop_event.is_set():

data = stream.read(CHUNK)

audio_data = np.frombuffer(data, dtype=np.int16)

amplitude = np.abs(audio_data).mean()

time_all += time.time() - time1

if time_all / 0.1 > k:

k+=1

print("Amplitude:", amplitude)

if (amplitude < 10):

arduino_communication(12)

print("send 12(mouth small to arduino)")

elif (10< amplitude < 20):

arduino_communication(13)

print("send 13(mouth middle to arduino)")

elif ( amplitude > 20 ):

arduino_communication(14)

print("send 14(mouth large to arduino)")

if __name__ =="__main__":

detect_voice_flag = threading.Event()

detect_voice_thread = threading.Thread(target=detect_voice_amplitude, args=(detect_voice_flag, ))

detect_voice_flag.clear()

detect_voice_thread.start()

time1= time.time()

while True:

if time.time()-time1 > 10:

break

detect_voice_flag.set()

detect_voice_thread.join()

//music_mode/video_control.py

import RPi.GPIO as GPIO

import sys

import time

import subprocess

def control_video_thread(stop_flag):

GPIO.setmode(GPIO.BCM)

GPIO.setup(27, GPIO.IN)

GPIO.setup(23, GPIO.IN)

GPIO.setup(22, GPIO.IN)

GPIO.setup(17, GPIO.IN)

# different commmand send to fifo

pause_cmd1 = 'echo "pause" > /home/pi/company_robot/music_mode/fifo'

seek1_cmd2 = 'echo "seek 10" > /home/pi/company_robot/music_mode/fifo'

seek2_cmd3 = 'echo "seek -10" > /home/pi/company_robot/music_mode/fifo'

quit_cmd4 = 'echo "quit" > /home/pi/company_robot/music_mode/fifo'

#scan the input from GPIO

while not stop_flag.is_set():

time.sleep(0.2)

if GPIO.input(27) == GPIO.LOW:

subprocess.run(pause_cmd1, shell=True)

print("pause")

elif GPIO.input(23) == GPIO.LOW:

subprocess.run(seek1_cmd2, shell=True)

print("seek 10")

elif GPIO.input(22) == GPIO.LOW:

subprocess.run(seek2_cmd3, shell=True)

print("seek -10")

elif GPIO.input(17) == GPIO.LOW:

subprocess.run(quit_cmd4, shell=True)

print("quit")

break

//music_mode/start_video.sh

# play the video on the background

sudo mplayer -vo fbdev2:/dev/fb0 -input file=/home/pi/company_robot/music_mode/fifo -zoom -x 320 -y 240 /home/pi/company_robot/video/Lukas_Graham-7Years.mp4

sudo python /home/pi/company_robot/music_mode/video_control.py &

//play_mode/mpu.py

# for control eye

import smbus

import time

import numpy as np

from UART_test import arduino_communication

import threading

# device address和 I2C channel

DEVICE_ADDRESS = 0x68

bus = smbus.SMBus(1)

# initilize MPU

bus.write_byte_data(DEVICE_ADDRESS, 0x6B, 0)

def read_word(reg):

# read data

h = bus.read_byte_data(DEVICE_ADDRESS, reg)

l = bus.read_byte_data(DEVICE_ADDRESS, reg+1)

value = (h << 8) + l

return value

def read_word_2c(reg):

val = read_word(reg)

if (val >= 0x8000):

return -((65535 - val) + 1)

else:

return val

def get_y_rotation(x,y,z):

radians = np.arctan2(x, np.sqrt(y*y + z*z))

return -np.degrees(radians)

def get_x_rotation(x,y,z):

radians = np.arctan2(y, np.sqrt(x*x + z*z))

return np.degrees(radians)

def imu_thread(stop_event):

while not stop_event.is_set():

# calculate acceleration

accel_xout = read_word_2c(0x3b)

accel_yout = read_word_2c(0x3d)

accel_zout = read_word_2c(0x3f)

accel_xout_scaled = accel_xout / 16384.0

accel_yout_scaled = accel_yout / 16384.0

accel_zout_scaled = accel_zout / 16384.0

x_rotation = get_x_rotation(accel_xout_scaled, accel_yout_scaled, accel_zout_scaled)

y_rotation = get_y_rotation(accel_xout_scaled, accel_yout_scaled, accel_zout_scaled)

print("X rotation: ", x_rotation)

print("Y rotation: ", y_rotation)

if(x_rotation < -30):

arduino_communication(8)

print("sent 8(eye left) to arduino")

elif(x_rotation > 30):

arduino_communication(9)

print("sent 9(eye right) to arduino")

if(y_rotation < -30):

arduino_communication(7)

print("sent 7(eye up) to arduino")

elif(y_rotation > 30):

arduino_communication(6)

print("sent 6(eye down) to arduino")

time.sleep(1)

if __name__ == "__main__":

stop_event = threading.Event()

thread = threading.Thread(target=imu_thread, args=(stop_event,))

stop_event.clear()

thread.start()

time.sleep(10)

stop_event.set()

thread.join()

print("Thread end")

//play_mode/pitft_switch

import threading

from evdev import InputDevice, categorize, ecodes

import time

from UART_test import arduino_communication

touchscreen_device_path = '/dev/input/event5' # may change

def handle_continuous_touch_thread(device_path, stop_event):

dev = InputDevice(device_path)

start_y = None

end_y = None

is_touching = False

for event in dev.read_loop():

if stop_event.is_set():

break

if event.type == ecodes.EV_ABS:

absevent = categorize(event)

if event.code == ecodes.ABS_MT_POSITION_Y:

current_y = absevent.event.value

if not is_touching:

start_y = current_y

is_touching = True

else:

end_y = current_y

elif event.type == ecodes.EV_SYN:

if is_touching and start_y is not None and end_y is not None:

if end_y > start_y + 10:

print("Shift right")

arduino_communication(11)

print(f"send 11 (body right) to arduino")

elif end_y < start_y - 10:

print("Shift left")

arduino_communication(10)

print(f"send 11 (body left) to arduino")

start_y = None

end_y = None

is_touching = False

if __name__ == "__main__":

touchscreen_device_path = '/dev/input/event5' # may change

stop_event = threading.Event()

touch_thread = threading.Thread(target=handle_continuous_touch_thread, args=(touchscreen_device_path, stop_event))

touch_thread.start()

time.sleep(3)

stop_event.set()

touch_thread.join()

//robot_control_Final.py

#include

Servo mouse_servo_r, mouse_servo_c, eye_servo_rotation, body_servo_rotation, body_rotation_2;

Servo eyelids_left, eyelids_right, eyeball_right, eyeball_left, eyeball_right_in, eyeball_left_in;

unsigned long previousMillis_r = 0, previousMillis_c = 0, previousMillis_eye = 0, previousMillis_ini = 0;

unsigned long previousMillis_eyeball_left = 0, previousMillis_eyeball_right = 0;

unsigned long previousMillis_body = 0;

// eye_in_left

unsigned long previousMillis_eye_in_left = 0; // to store the last update time

long interval_eye_in_left = 20; // interval at which to update (milliseconds)

int pos_eye_in_left = 160; // Initial position of the servo

boolean increasing_eye_in_left = false; // Direction of movement

// eye_in_left

unsigned long previousMillis_eye_in_right = 0; // to store the last update time

long interval_eye_in_right = 20; // interval at which to update (milliseconds)

int pos_eye_in_right = 160; // Initial position of the servo

boolean increasing_eye_in_right = false; // Direction of movement

// mouse_r; // Create servo object

unsigned long previousMillis_mouse_r = 0; // to store the last update time

long interval_mouse_r = 4; // interval at which to update (milliseconds)

int pos_mouse_r = 90; // Initial position of the servo

boolean increasing_mouse_r = false; // Direction of movement

// body_2; // Create servo object

unsigned long previousMillis_body_2 = 0; // to store the last update time

long interval_body_2 = 5; // interval at which to update (milliseconds)

int pos_body_2 = 160; // Initial position of the servo

boolean increasing_body_2 = false; // Direction of movement

// mouse_c; // Create servo object

unsigned long previousMillis_mouse_c = 0; // to store the last update time

long interval_mouse_c = 2; // interval at which to update (milliseconds)

int pos_mouse_c = 160; // Initial position of the servo

boolean increasing_mouse_c = false; // Direction of movement

boolean increasing_ini = false; // Direction of movement

int pos_ini = 180;

const long interval_r = 15, interval_c = 15, interval_eye = 4;

const long interval_eyeball_left = 10, interval_eyeball_right = 10; // Faster intervals for eyeballs

long interval_body = 20; // Interval at which to update body servo position

int pos_m_r = 20, pos_m_c = 0, pos_eye = 90;

int pos_eyelids_left = 180, pos_eyelids_right = 180;

int pos_eyeball_left = 180, pos_eyeball_right = 0;

int pos_body = 120; // Initial position of the body servo

boolean increasing_r = true, increasing_c = true, increasing_eye = true;

boolean increasing_eyeball_left = false, increasing_eyeball_right = true; // Initial directions

boolean increasing_body = true; // Direction of body servo movement

void setup()

{

Serial.begin(9600);

// Attach servos to pins

mouse_servo_r.attach(10);

mouse_servo_c.attach(8);

eye_servo_rotation.attach(7);

eyelids_left.attach(6);

eyelids_right.attach(5); //

eyeball_left.attach(4);

eyeball_right.attach(3);

eyeball_right_in.attach(2);

eyeball_left_in.attach(9);

body_servo_rotation.attach(11);

body_rotation_2.attach(12);

eyeball_left.write(150);

eyeball_right.write(40);

eyeball_right_in.write(80); // 40

eyeball_left_in.write(140);

body_servo_rotation.write(50);

}

// happy 2 , sad 1 , calm 0 , nothing 4, listen 3, dancing 5, eye down 6, eye up 7, eye left 8, eye right 9,

// body left 10, body right 11, mouth small 12, mouth middle 13, mouth large 14

int state = 4;

int pre_state = 4;

int counter = 0;

static bool initialized = false;

void loop()

{

if (Serial.available() > 0)

{ // Check if data is coming to the serial port

String data = Serial.readStringUntil('\n'); // Read the data until a newline

Serial.println("Received:" + data);

state = data.toInt();

}

if (state == 1 || state == 2 || state == 0)

{

pre_state = state;

}

// static bool initialized = false;

if (initialized == false)

{

for (int i = 180; i >= 90; i--)

{

eyelids_left.write(i);

// mouse_servo_r.write(20);

eyelids_right.write(i - 90);

// body_rotation_2.write(200);

delay(40);

}

initialized = true;

}

else

{

// body_2_move();

if (state == 0 || (pre_state == 0 && (state == 12 || state == 13)))

{ // calm speaking

// body_2_move();

oscillateEyeServo();

// mouse_r_move(140, 20);

mouse_c_move();

eyeball_left.write(150);

eyeball_right.write(40);

eyeball_right_in.write(40); // 40

eyeball_left_in.write(140);

body_servo_rotation.write(50);

if (state == 12)

{

mouse_r_move(150, 30);

}

else if (state == 13)

{

mouse_r_move(150, 0);

}

else

{

mouse_r_move(150, 10);

}

}

else if (state == 1 || (pre_state == 1 && (state == 12 || state == 13)))

{

oscillateEyeServo();

mouse_r_move(140, 20);

mouse_c_move();

eyeball_right.write(40);

eyeball_left_in.write(140);

eyeball_right_in.write(40);

body_servo_rotation.write(50);

if (state == 12)

{

mouse_r_move(150, 30);

}

else if (state == 13)

{

mouse_r_move(150, 0);

}

else

{

mouse_r_move(150, 10);

}

}

else if (state == 2 || (pre_state == 2 && (state == 12 || state == 13)))

{ // happy

if (state == 12)

{

mouse_r_move(150, 30);

}

else if (state == 13)

{

mouse_r_move(150, 0);

}

else

{

mouse_r_move(150, 10);

}

// mouse_r_move(180, 5);

mouse_c_move();

oscillateBodyServo();

// handleContinuousServoMovement();

oscillateEyeServo();

}

else if (state == 3)

{

moveEyeballs();

eye_in_left_move();

eye_in_right_move();

body_servo_rotation.write(50);

oscillateEyeServo();

}

else if (state == 8)

{

eyeball_left.write(150);

eyeball_right.write(40);

// oscillateBodyServo();

oscillateEyeServo();

eyeball_left_in.write(80);

eyeball_right_in.write(0);

// body_2_move();

}

else if (state == 9)

{ // look right

body_servo_rotation.write(50);

eyeball_left.write(150);

eyeball_right.write(40);

oscillateEyeServo();

eyeball_left_in.write(200); // 200

eyeball_right_in.write(80);

}

else if (state == 6)

{ // loop up

body_servo_rotation.write(50);

eyeball_left.write(0); // up

eyeball_right.write(0); // up

eyeball_left_in.write(140);

eyeball_right_in.write(40);

oscillateEyeServo();

}

else if (state == 7)

{ // loop down

eyeball_left.write(90); // down

eyeball_right.write(70); // down

eyeball_left_in.write(140);

eyeball_right_in.write(40);

oscillateEyeServo();

body_servo_rotation.write(50);

}

else if (state == 5)

{ // play music

eyeball_left.write(150);

eyeball_right.write(40);

eye_in_left_move();

eye_in_right_move();

mouse_c_move();

oscillateBodyServo();

oscillateEyeServo();

mouse_r_move(150, 0);

}

else if (state == 11)

{ // left

body_servo_rotation.write(50);

}

else if (state == 10)

{ // right

body_servo_rotation.write(120);

}

else

{ // do nothing

eyeball_left.write(150);

eyeball_right.write(40);

eyeball_right_in.write(40);

eyeball_left_in.write(140);

// body_2_move();

body_servo_rotation.write(50);

}

}

}

void ini_motion()

{

unsigned long currentMillis = millis();

if (currentMillis - previousMillis_ini >= 5)

{

previousMillis_ini = currentMillis;

if (increasing_ini)

{

pos_ini++;

if (pos_ini >= 180)

{

}

}

else

{

pos_ini--;

if (pos_ini <= 0)

{ // Change direction at the lower bound

increasing_ini = true;

delay(2000);

}

}

mouse_servo_r.write(pos_ini); // Set new position

}

}

void oscillateEyeServo()

{

unsigned long currentMillis = millis();

if (currentMillis - previousMillis_eye >= interval_eye)

{

previousMillis_eye = currentMillis;

// Update the position of the eye servo

if (increasing_eye)

{

pos_eye--;

if (pos_eye <= 90)

{

increasing_eye = false; // Change direction at the lower bound

}

}

else

{

pos_eye++;

if (pos_eye >= 230)

{

increasing_eye = true; // Change direction at the upper bound

}

}

eye_servo_rotation.write(pos_eye); // Set new position

}

}

void moveEyeballs()

{

unsigned long currentMillis = millis();

if (currentMillis - previousMillis_eyeball_left >= interval_eyeball_left)

{

previousMillis_eyeball_left = currentMillis;

pos_eyeball_left = updateServoPosition(pos_eyeball_left, 220, 140, increasing_eyeball_left); // 140 50

eyeball_left.write(pos_eyeball_left);

}

eyeball_left.write(90);

eyeball_right.write(70);

if (currentMillis - previousMillis_eyeball_right >= interval_eyeball_right)

{

previousMillis_eyeball_right = currentMillis;

pos_eyeball_right = updateServoPosition(pos_eyeball_right, 70, 0, increasing_eyeball_right);

eyeball_right.write(pos_eyeball_right);

}

}

int updateServoPosition(int pos, int max_pos, int min_pos, boolean &increasing)

{

if (increasing)

{

pos++;

if (pos >= max_pos)

increasing = false;

}

else

{

pos--;

if (pos <= min_pos)

increasing = true;

}

return pos;

}

void mouse_r_move(int max, int min)

{ // new--------------------------------------------------------------------------------------------

unsigned long currentMillis = millis();

if (state == 5 || state == 2 || pre_state == 2 || pre_state == 5)

{ // play music

interval_mouse_r = 2;

}

else if (state == 0)

{

interval_mouse_r = 4;

}

else if (state == 1 || pre_state == 1)

{

interval_mouse_r = 5;

}

else

interval_mouse_r = 4;

if (currentMillis - previousMillis_mouse_r >= interval_mouse_r)

{

previousMillis_mouse_r = currentMillis;

// Update the position of the servo

if (increasing_mouse_r)

{

pos_mouse_r++;

if (pos_mouse_r >= max)

{ // Change direction at the upper bound zhangxiao

// if (pos_mouse_r >= 120) {

increasing_mouse_r = false;

}

}

else

{

pos_mouse_r--;

if (pos_mouse_r <= min)

{ // Change direction at the lower bound zhangda

// if (pos_mouse_r <= 0) {

increasing_mouse_r = true;

}

}

mouse_servo_r.write(pos_mouse_r); // Set new position

}

}

void mouse_c_move()

{ // new--------------------------------------------------------------------------------------------

unsigned long currentMillis = millis();

if (currentMillis - previousMillis_mouse_c >= interval_mouse_c)

{

previousMillis_mouse_c = currentMillis;

// Update the position of the servo

if (increasing_mouse_c)

{

pos_mouse_c++;

if (pos_mouse_c >= 160)

{ // Change direction at the upper bound

increasing_mouse_c = false;

}

}

else

{

pos_mouse_c--;

if (pos_mouse_c <= 20)

{ // Change direction at the lower bound

increasing_mouse_c = true;

}

}

mouse_servo_c.write(pos_mouse_r); // Set new position

}

}

void body_2_move()

{ // new--------------------------------------------------------------------------------------------

unsigned long currentMillis = millis();

if (currentMillis - previousMillis_body_2 >= interval_body_2)

{

previousMillis_body_2 = currentMillis;

// Update the position of the servo

if (increasing_body_2)

{

pos_body_2++;

if (pos_body_2 >= 160)

{ // Change direction at the upper bound

increasing_body_2 = false;

}

}

else

{

pos_body_2--;

if (pos_body_2 <= 0)

{ // Change direction at the lower bound

increasing_body_2 = true;

}

}

body_rotation_2.write(pos_body_2); // Set new position

}

}

void oscillateBodyServo()

{

unsigned long currentMillis = millis();

if (state == 5)

{ // play music

interval_body = 20;

}

else

interval_body = 10;

if (currentMillis - previousMillis_body >= interval_body)

{

previousMillis_body = currentMillis;

// Update the position of the body servo

if (increasing_body)

{

pos_body--;

if (pos_body <= 50)

{

increasing_body = false; // Change direction at the lower bound

}

}

else

{

pos_body++;

if (pos_body >= 110)

{

increasing_body = true; // Change direction at the upper bound

}

}

body_servo_rotation.write(pos_body); // Set new position

}

}

void eye_in_left_move()

{

unsigned long currentMillis = millis();

if (state == 5)

{ // play music

interval_eye_in_left = 10;

}

else

interval_eye_in_left = 20;

if (currentMillis - previousMillis_eye_in_left >= interval_eye_in_left)

{

previousMillis_eye_in_left = currentMillis;

// Update the position of the servo

if (increasing_eye_in_left)

{

pos_eye_in_left++;

if (pos_eye_in_left >= 200)

{ // Change direction at the upper bound //200

increasing_eye_in_left = false;

}

}

else

{

pos_eye_in_left--;

if (pos_eye_in_left <= 20)

{ // Change direction at the lower bound //20

increasing_eye_in_left = true;

}

}

eyeball_left_in.write(pos_eye_in_left); // Set new position

}

}

void eye_in_right_move()

{

unsigned long currentMillis = millis();

if (state == 5)

{ // play music

interval_eye_in_right = 10;

}

else

interval_eye_in_right = 20;

if (currentMillis - previousMillis_eye_in_right >= interval_eye_in_right)

{

previousMillis_eye_in_right = currentMillis;

// Update the position of the servo

if (increasing_eye_in_right)

{

pos_eye_in_right++;

if (pos_eye_in_right >= 80)

{ // Change direction at the upper bound///look to the right

increasing_eye_in_right = false;

}

}

else

{

pos_eye_in_right--;

if (pos_eye_in_right <= 0)

{ // Change direction at the lower bound

increasing_eye_in_right = true;

}

}

eyeball_right_in.write(pos_eye_in_right); // Set new position

}

}