Objective

The goal of this project was to design a robot that would pick up food from a charcuterie board and bring it back to the user. CharcuterPi makes retrieving food easy and convenient. Using computer vision, the system rotates the charcuterie board and the robot drives over to pick up the food.

Demo Video

Introduction

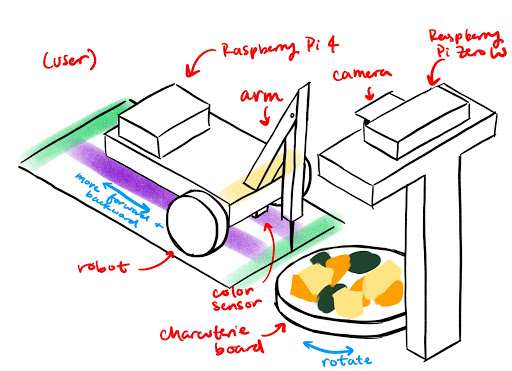

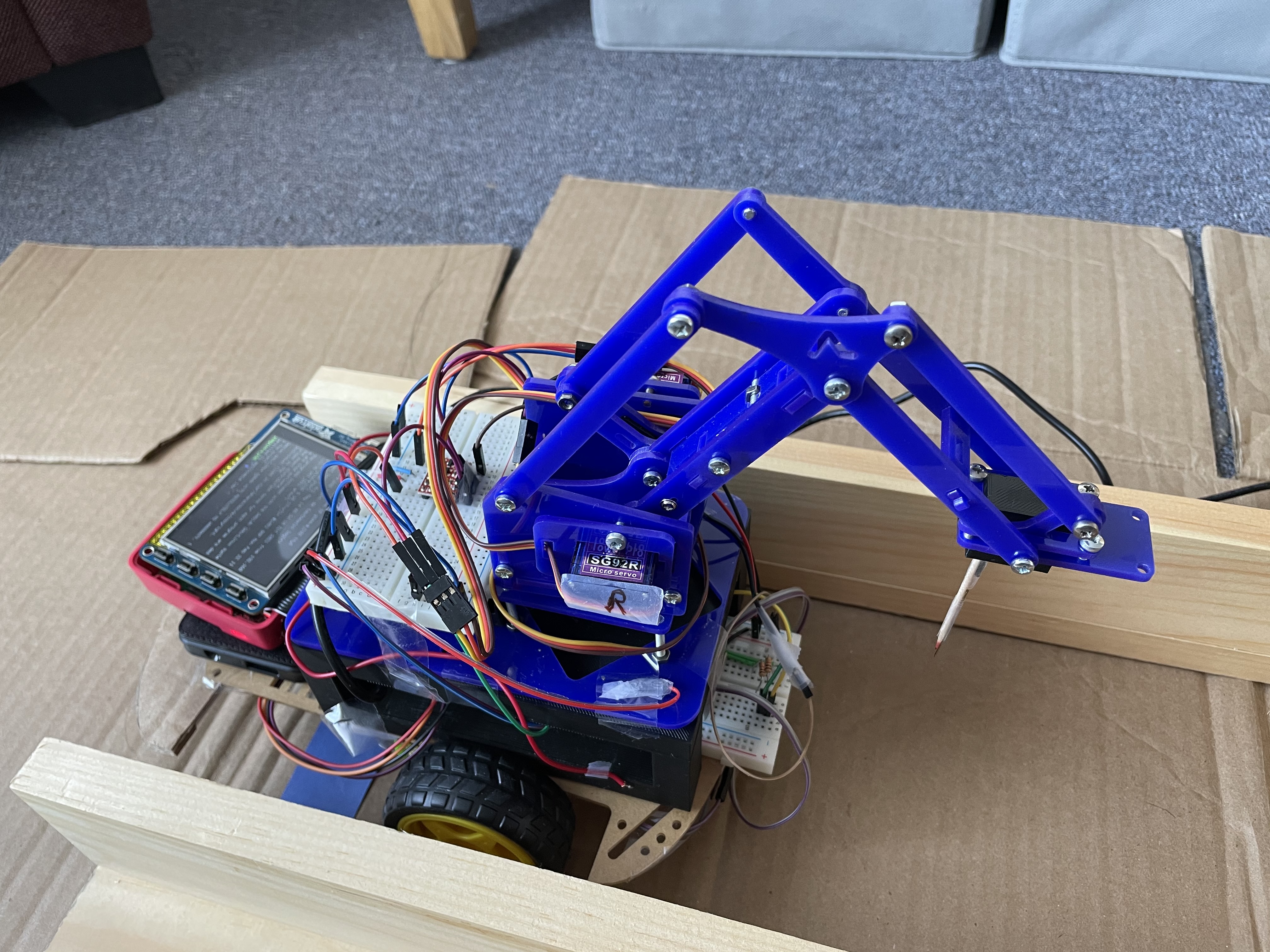

For this project, we designed a system that has a robot retrieve food for a user. We had three different food items placed on a rotating charcuterie board and a robot that could drive between the user and the board. The goal of this project was to have the robot retrieve the food without the need for the user to get up. We used a Raspberry Pi Camera mounted above the charcuterie board, a Raspberry Pi Zero W, a servo motor, and OpenCV to rotate the charcuterie board. The board was rotated such that the desired food item was aligned with the robot. The robot was built using the Lab 3 parts, a color sensor, and a robot arm consisting of two servo motors. The Raspberry Pi 4 and piTFT were mounted on the robot, so the user could choose which food item they wanted by pressing a button. The Raspberry Pi 4 and the Raspberry Pi Zero W communicated with each other over WiFi using the Python Socket library. The robot used the color sensor to determine when to stop (i.e. when it reached the user or the board). We used inverse kinematics to move the robot arm to pick up the food on the charcuterie board.

Design and Testing

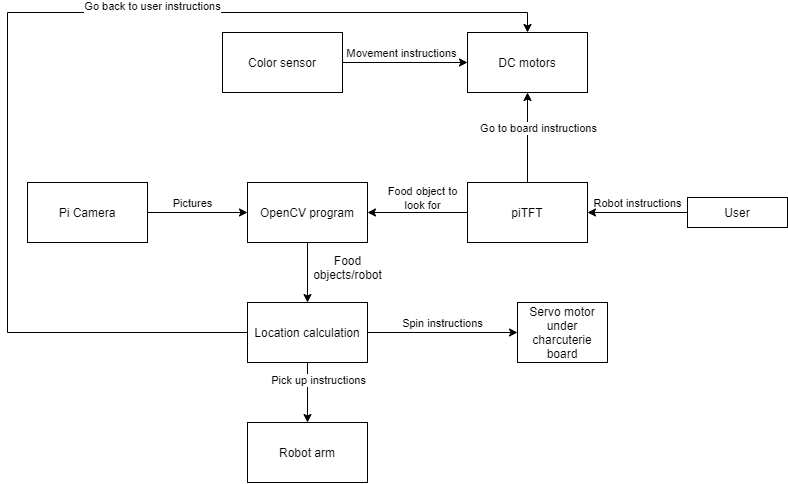

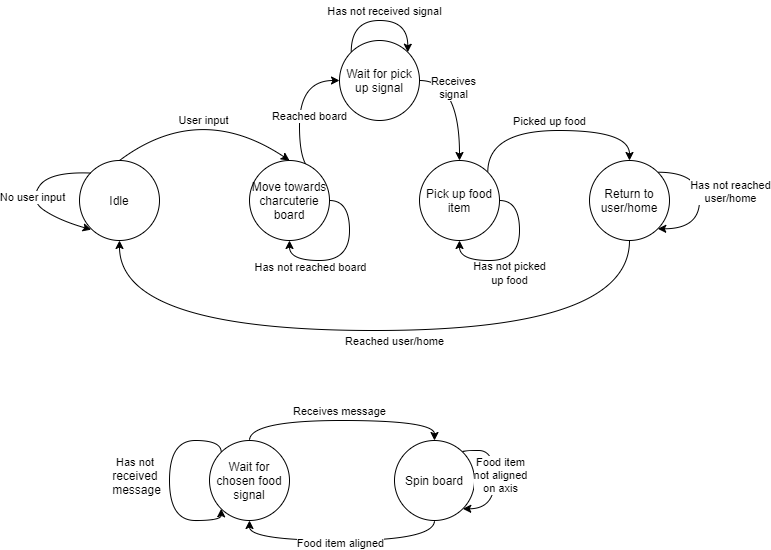

Overall System Design

The overall design of the system was to have the user choose a food for the robot to pick up. The robot would then tell the charcuterie which food it wanted, and the board would spin to align that food along the axis parallel to the track. The robot would then drive over to the board and use the robot arm to pick up the food and bring it back to the user.

Charcuterie Board

Hardware Design

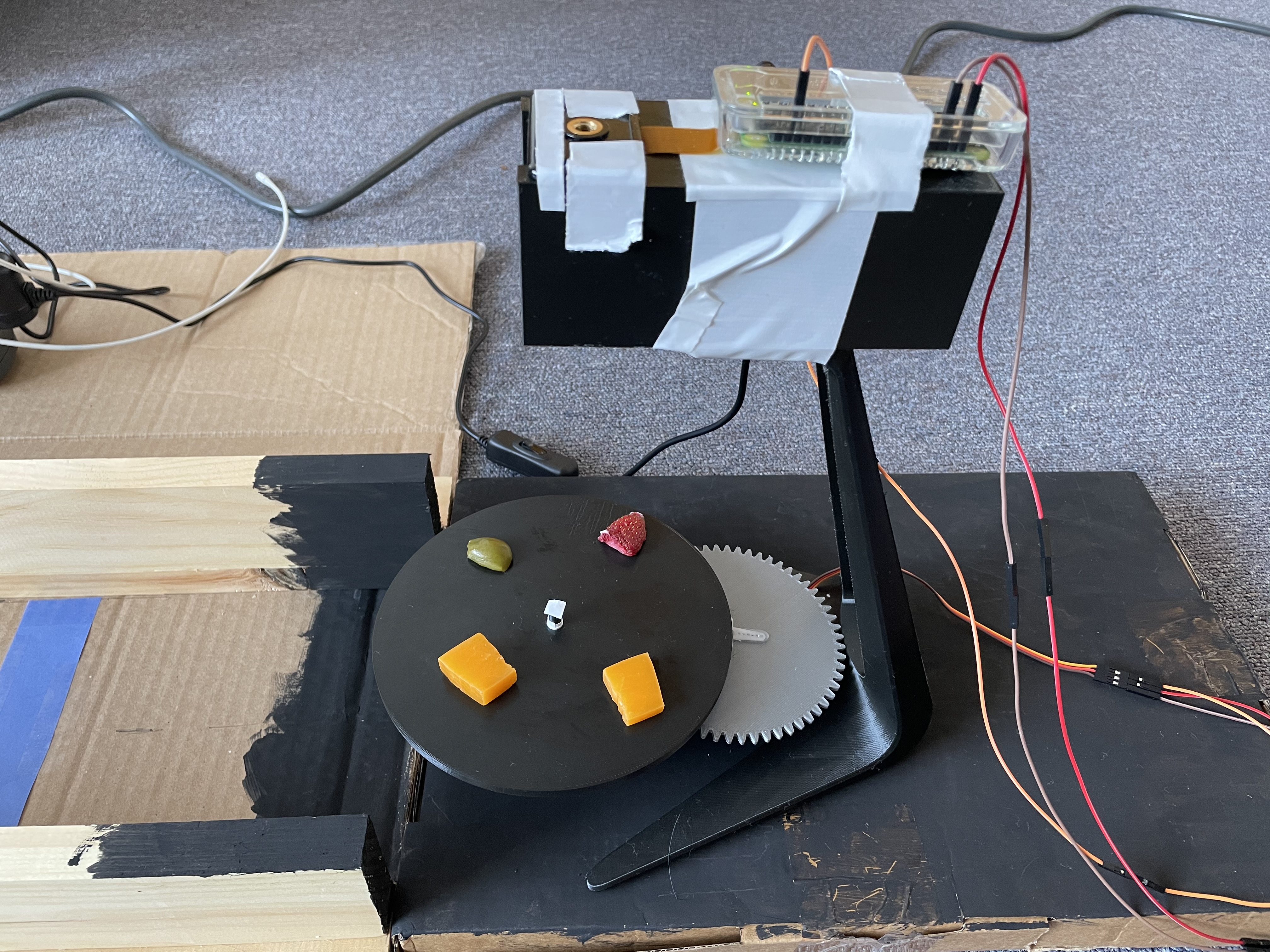

The hardware for the charcuterie board setup consisted of the Pi Camera, an SG90 servo motor, the Raspberry Pi Zero W, a 3D printed rotating board, and a 3D printed stand. The Pi Camera and Raspberry Pi Zero W were mounted on top of the 3D printed stand such that the camera was overlooking the board.

The rotating board consisted of two gears, the board itself, and a base with a space for the servo motor and an axle for the gears. Since the servo motor is only capable of spinning 180°, we designed the gears to be in a 2-1 ratio. This meant that the gear attached to the servo motor had twice as many teeth as the gear attached to the board. This would allow us to get a full 360° range of motion. The gear that was attached to the board sat on an axle that protruded from the base. When we printed the base, the axle was slightly too narrow, but this was fixed by simply wrapping some tape around the axle to increase its diameter. The servo motor was connected to the Raspberry Pi Zero W. It was connected to the 5V pin, ground, and GPIO19 on the Raspberry Pi Zero W. GPIO19 was used to control the PWM signal for the motor while the 5V pin and ground were used to power the servo.

The camera setup consisted of the Pi Camera, the Raspberry Pi Zero W, and a 3D printed stand. Both the Pi Camera and the Raspberry Pi Zero W were mounted on top of the stand using duct tape. The camera was positioned such that it overlooked the charcuterie board while the Raspberry Pi Zero W was simply mounted behind it. We had to mount the Raspberry Pi Zero W with the Pi Camera because the cable connecting the camera and the Pi was too short to have the Pi elsewhere.

Software Design

The software for the charcuterie board setup consisted of OpenCV, the piServoCtl library, and the Python socket library. We used OpenCV to distinguish between different food items. The piServoCtl library was used to control the servo motor that spun the board, and the Python socket library was used to communicate between both Raspberry Pis.

The Pi Camera took photos of the board while the program was running. We set the camera shutter speed to the exposure speed to make the images more consistent. In addition, we set the camera iso to 200 which is good for daytime lighting.

On the board, we had cheese, strawberries, and olives. Since each food item we had on the board was a different color, we used OpenCV to color mask based on the chosen food item. For example, if the user chose cheese, we would mask the color yellow on the image taken by the Pi Camera to find the cheese on the board.

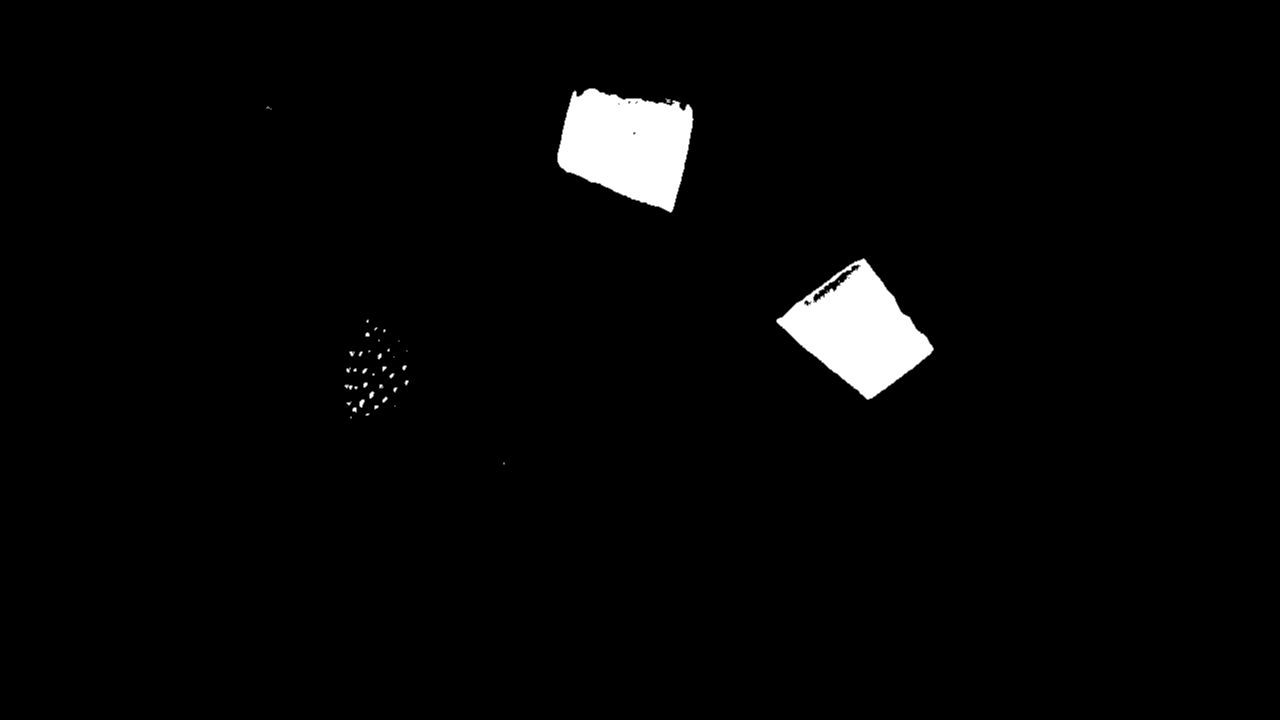

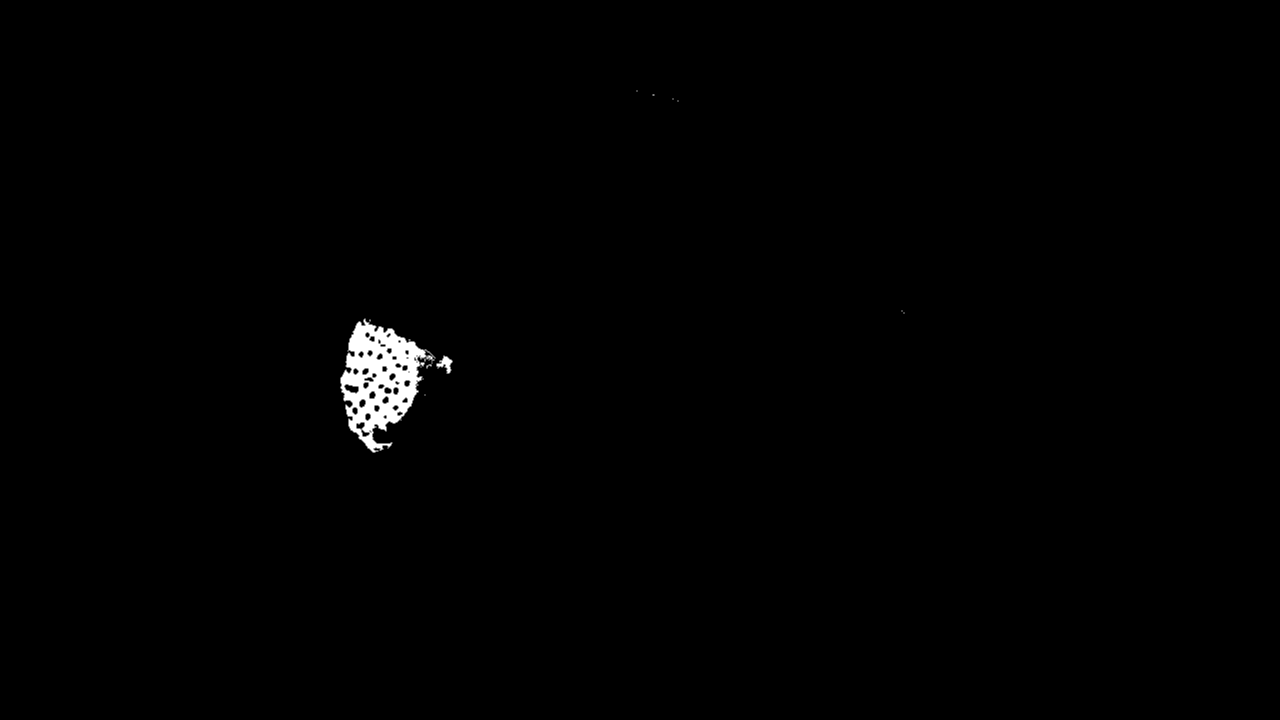

The color masking was done in the getTargetAngle function in move.py. We defined upper and lower HSV bounds for each color we wanted to mask. For example, the red color thresholds were [165, 100, 90] to [179, 255, 255]. Then, we converted the image taken by the Pi Camera from RGB to HSV and applied the color mask by using the function cv2.inRange which took in an image and lower and upper color bounds and returned a black and white masked image.

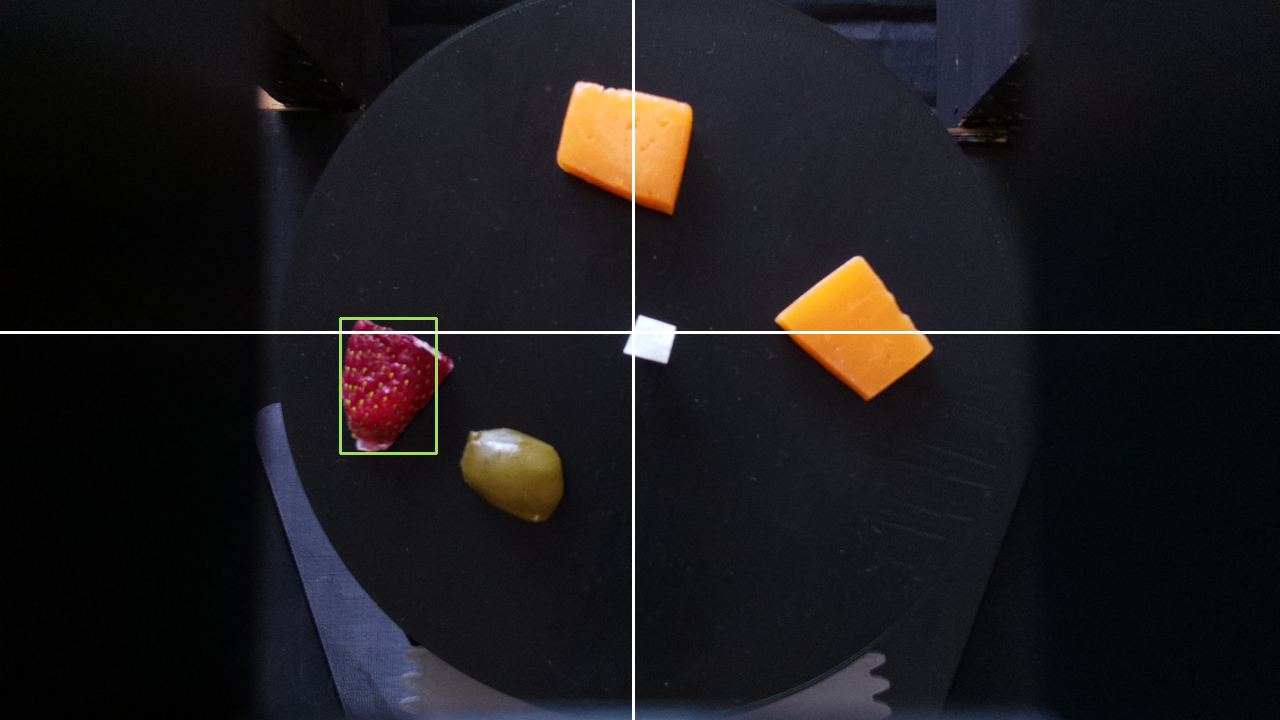

After color masking the image, we applied a blur to the masked image to reduce any noise in the image. Then, we found the edges in the image by using the cv2.Canny function. Using these edges, we were able to find the contours in the image. Then, for each contour in the list of contours, we used the cv2.approxPolyDP function to approximate the contour shape to a polygon. This would help reduce unnecessary vertices in the contour. Next, we drew bounding rectangles over each contour. If the area of the rectangle was less than 625 pixels, it was discarded. This was to reduce error and noise that could have occured with the color masking. Using an area bound helped ensure that rectangles were only drawn around the desired food items since the area of the food items was greater than 625 pixels. For example, the strawberry seeds are yellow in color, so they get picked up when we mask the cheese. However, since the area of each seed is not greater than 625 pixels, they are not included. We maintained a list of rectangles where each rectangle represented one food object. This list was recreated every time a new image was taken and a new color mask was applied. For each rectangle that was appended to the list, we also found the minimum enclosing circle and added the center of the circle to another list. This was to determine where the center of the food item was. We returned the list of centers. All of the contour logic was done in the easyContours function in easyContours.py.

Once we had a list of centers, we chose one of the entries at random and calculated what angle the board needed to move to such that the food was aligned with the positive y-axis. We did so by calling the calculateAngle function in find.py. This function takes in the x distance between the center of the board and the center of the food, the y distance between the center of the board and the center of the food, as well as the current position of the board (i.e. the angle the servo motor is at multiplied by 2). It then checks which quadrant the food is in based on the x and y distances and uses math.atan to determine theta, which is the arctangent. The quadrants are determined by the axes drawn in the figure above.

Using theta, we find the target angle the servo needs to move to by calculating the difference between the current angle and theta plus any offset needed based on the determined quadrant. For example, if the food was in the third quadrant, the target angle was current angle - (180 + theta) because we needed to compensate for how many degrees off the negative x-axis was from the positive x-axis. This is because the arctangent returns the angle between the point and the axis corresponding to its quadrant. In other words, the first quadrant corresponds to the positive x-axis, the second quadrant corresponds to the negative y-axis, and so on. The offset for each axis is based off of the angle between it and the positive x-axis, so the negative y-axis has a 90° offset. In addition, if current angle < theta + offset, then target angle is 360 - (current angle - (theta + offset)). This is to ensure we do not return a negative angle.

After we find the target angle, we rotate the servo using a drive function. This function simply checks whether or not the current angle is less than or greater than the target angle and increments or decrements the current angle accordingly and moves the servo using the piServoCtl library. This library uses hardware PWM to rotate the motor. Specifically, we used the servo.write function. This function takes in an angle and moves the servo object to that angle. We slowly incremented the angle with 0.5 second delays between each increment to ensure the table moved smoothly and did not whip around too quickly. We also had to normalize the target angle because the servo motor seemed to spin slightly more than 180°. To account for this inaccuracy, we multiplied the target angle by 172/180 before moving the servo. The drive function returned the current angle of the servo motor after it was done moving. This was so that we could keep track of the position of the board.

The Python socket library was used to communicate between the two Raspberry Pis and is explained later.

Testing

We tested the charcuterie board functionality by running the program several times. We had several different Python scripts that each corresponded to different parts of the program. As a result, we were able to test different components separately before testing everything together. This also made it easier to debug because we could test different parts in isolation.

First, we tested the color masking. We did this by having the Pi Camera take an image and using OpenCV to mask the image and display the masked image. This helped us see how well the masking was working and whether or not we needed to adjust the boundaries. We used Paint to find the HSV values for the colors in the image. Since OpenCV and Paint have slightly different HSV ranges, we scaled them to fit the OpenCV ranges. This part was very finnicky, as different lighting conditions could affect the coloring in the images.

Once we had the basic color masking working, we tested driving the servo motor. We tested this by placing an object indicator on the board and having the board drive to a specified angle. Based on where the object indicator ended up, we could see how accurate the spinning was. This portion went very well, and we did not have any issues with the servo moving except for the fact that it seemed to spin slightly more than 180°, which was fixed by normalizing the angle.

Finally, we tested finding the target angle and moving to it. This part was the most time consuming because it involved integrating all of the previous parts, as well as testing new functionality. In order to test this, every time we modified the image or drew something on it, we displayed it. This way we could see what the program thought it should be doing at every step. We also printed out the quadrant, coordinates of the food, and the calculated target angle. We ran into several issues while testing this. Since we did not yet have the food we were going to use in the demo, we substituted with sponges. In addition, since we were testing at night, the lighting was too dark. As a result, we used a flashlight to add light; however, this introduced some inconsistencies with the lighting simply due to human error. Finally, we ran into issues when drawing the rectangles around the food objects since sometimes there was a lot of noise in the masked image. This was solved by adding a blur to the image and only drawing rectangles over contours with a large enough area.

Robot

Hardware Design

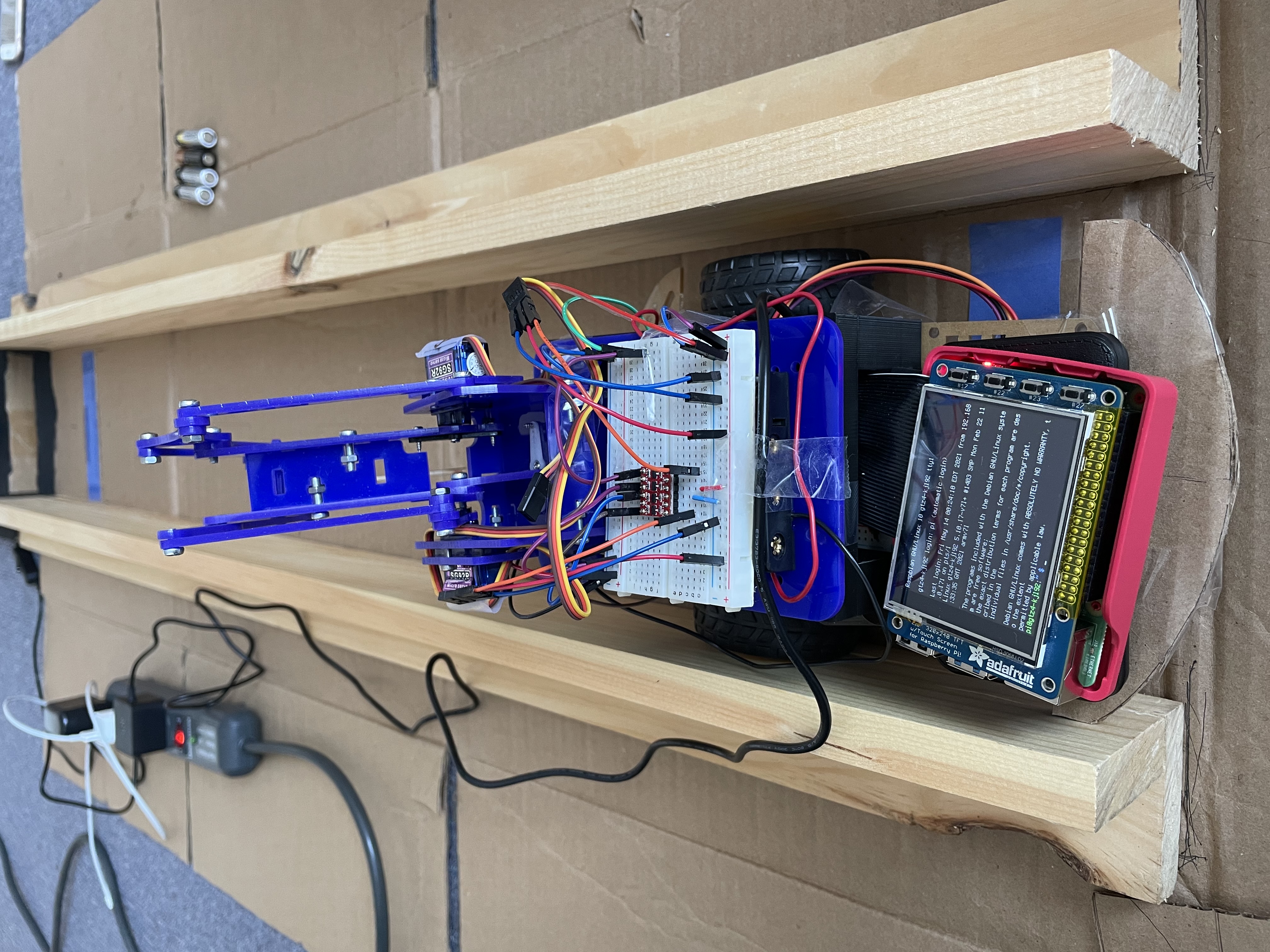

The hardware for the robot consisted of the Lab 3 robot parts, Raspberry Pi 4, piTFT, and a color sensor. The color sensor was used to detect when the robot should stop (i.e. it had reached the user or the charcuterie board).

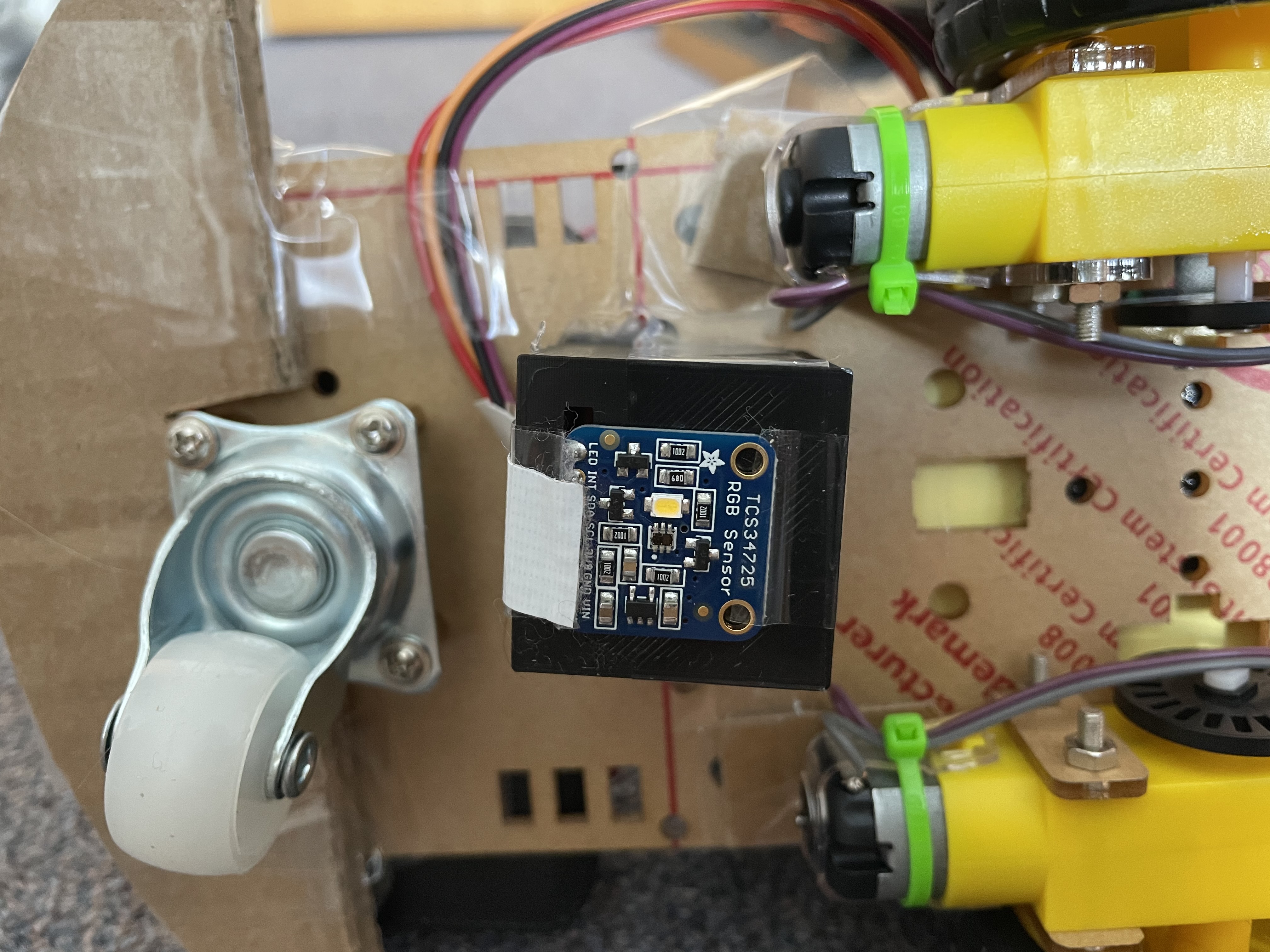

The color sensor communicated with the Raspberry Pi 4 over I2C. It was connected to the 3.3V pin, ground, SDA, and SCL. The SDA and SCL pins were GPIO2 and GPIO3, respectively.

The DC motors were set up in the same way they were for Lab 3. The left motor was connected to GPIO5 and GPIO6, and it used GPIO12 as its PWM pin. The right motor was connected to GPIO26 and GPIO19. GPIO21 was used for PWM. The duty cycle for both motors was 100 at 50 Hz frequency. We had to run the motors at the maximum duty cycle because the motors would not spin at lower duty cycles.

The robot frame was assembled in the same way it was for Lab 3. The piTFT screen was attached to the Raspberry Pi 4 in order to display the GUI.

Software Design

The software for the robot setup consisted of the pygame, RPi.GPIO, the color sensor logic, and the Python socket library. we used pygame to create the GUI for the piTFT. The RPi.GPIO library was used to setup the physical buttons on the piTFT screen and to control the DC motors. The color sensor returned the RGB value of the color directly beneath the robot. This was used in our drive logic. The Python socket library was used to communicate between both Raspberry Pis.

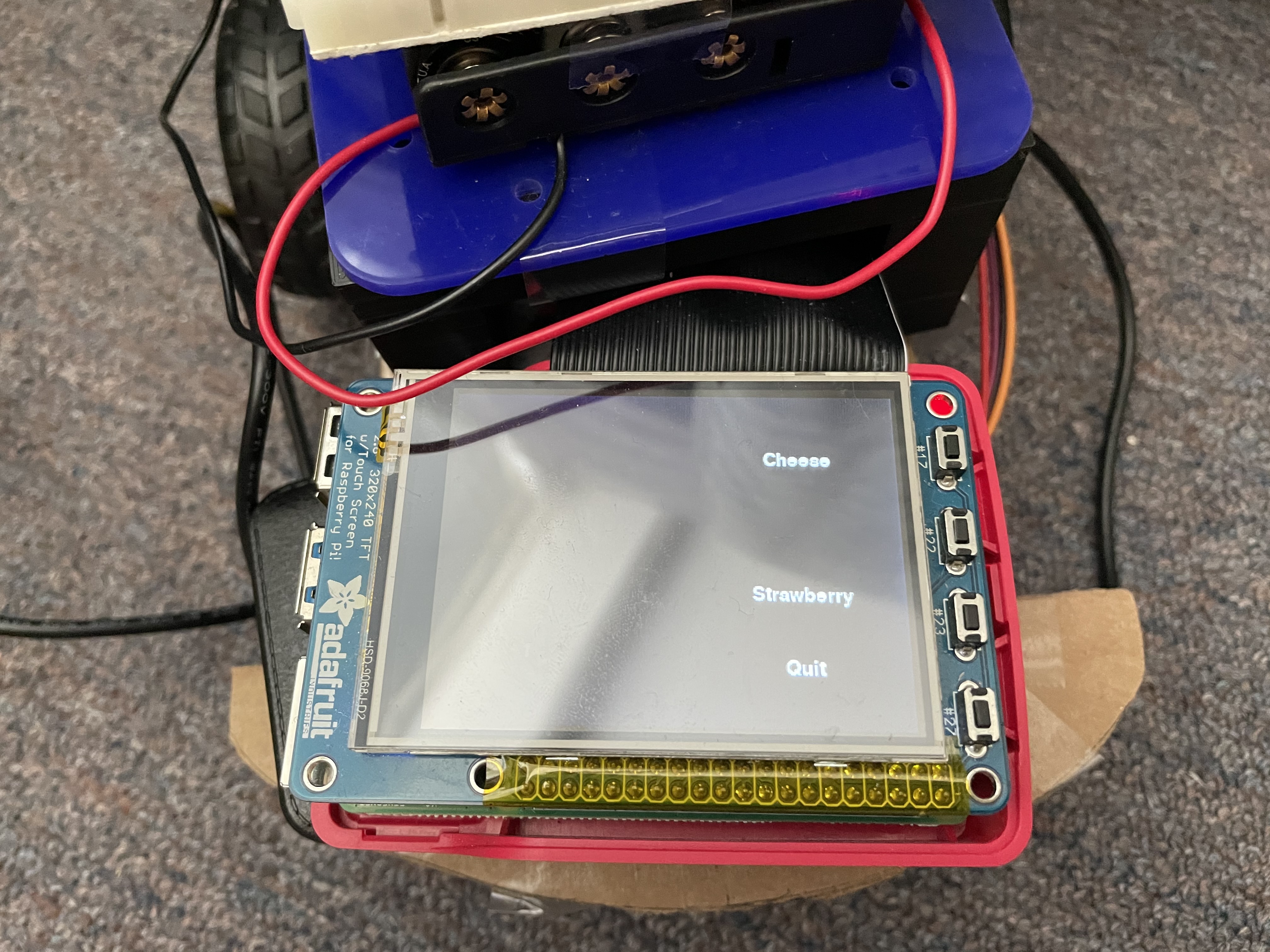

We used pygame to create the GUI that would be displayed to the user on the piTFT screen. The GUI displayed the available food choices on the charcuterie board, as well as an option to exit the program. Initially, we wanted to use touchscreen buttons, so the user could tap on the screen to choose a food item. However, we ran into issues with the touchscreen where the coordinates of the presses were very random. This resulted in lots of error when trying to choose an item, as the piTFT would not recognize the correct coordinates. This meant that whenever you pressed the screen, you would essentially be choosing a random food item. As a result, we switched to using the physical buttons on the piTFT screen instead. We displayed text on the screen next to each of the buttons to show the user what food item each of the buttons corresponded to. We used callback functions to perform the logic for each button.

If a food item was no longer available on the charcuterie board, it would no longer be displayed on the GUI, and pressing the button would not do anything. This was to prevent the user from trying to get a food that did not exist on the board.

For the motors, a drive function was made that took in direction as a parameter. There were three possible directions: forward, backward, and stop. If the motor should drive forwards, pins 6 and 19 were set high, and pins 5 and 26 were set low. If the motor should drive backward, the pins were flipped from the forward commands. To stop the motors, all of these outputs were set to low. Pins 12 and 21 were used for PWM and were not included in this drive function. Instead, this was set to a constant duty cycle of 100% outside of the drive function, as mentioned before.

The color sensor was used to determine when the robot should stop. When the color sensor saw the color blue, it would tell the robot to stop. The color sensor used the adafruit_tcs34725 and board libraries. We used the function sensor.color_rgb_bytes to return the RGB value of the color in front of the color sensor. Using this value, we checked if it was within a range of blue shades. If it was, the robot stopped; otherwise, the robot would continue moving.

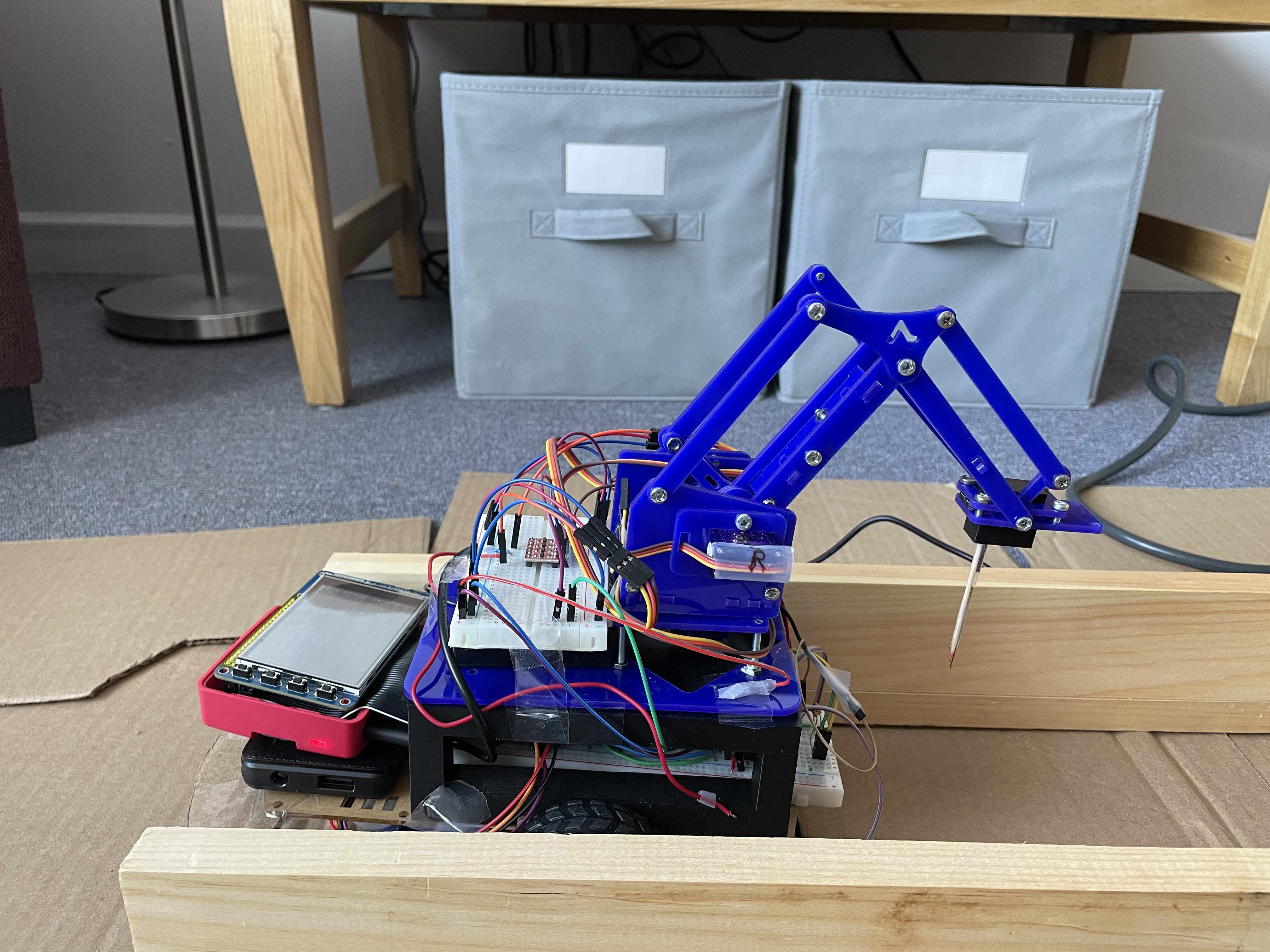

Initially, we also wanted to use the color sensor to keep the robot on course. We would do so by having colored lines on either side of the sensor. For example, there would be a red line on the left and a green line on the right. If the color sensor saw the color red, it would tell the robot to turn right. If the color sensor saw the color green, it would tell the robot to turn left. However, after attempting this, we determined that it was not feasible given the mechanical and timing constraints. Since the DC motors are not very accurate nor precise when moving, it was not feasible to have the robot turn accurately based on the feedback from the color sensor. In addition, since the robot needed to be facing the charcuterie board directly, we needed to be able to determine which way the robot was facing. Since we did not have a way of determining this, we needed a different method of ensuring the robot was lined up with the charcuterie board as precisely as possible. As a result, we decided to use wood and cardboard to build a track for the robot to follow. This would ensure that the robot would reach the charcuterie board facing the right way. We still used the color sensor to determing whether or not the robot should stop by placing a blue line on either end of the track.

The Python socket library was used to communicate between the two Raspberry Pis and is explained later.

Testing

Testing the robot was done in stages. Since we knew that the drive capabilities worked from Lab 3, we did not need to test whether the robot was able to drive. For the color sensor, we first looked at how good it was at detecting colors. We found that while it detected color quickly and accurately, the sensor had a very small range, leading to a 3D printed box that would keep the sensor around half a centimeter away from the ground. After this was attached to the bottom of the robot (as seen in figure 15), we created a path for the robot with colored lines. A program was made to test whether the robot was able to react correctly to the detected colors. However, as mentioned earlier, using the colored sensors to keep the robot moving on course did not work well. To determine what was happening, we printed out the detected colors onto the console. We saw that while the sensor would detect the colors correctly while driving, the motors would not turn accurately, leading to the robot sometimes driving off the track. Additionally, the robot would sometimes move so quickly that it would overshoot the colored lines. At first, we experimented with the speed of the motors to see whether slower speeds would provide more accuracy, but the motors on the robot did not have enough torque to move the robot at smaller speeds. Thus, the track in figure 18 was used instead.

With the track, we once again tested the driving capabilities of the robot with the color sensor. While driving, the robot would sometimes get stuck on the sides of the track. This was just due to friction between the sides of the robot and the track, and simply nudging the robot was usually enough for it to continue driving. A small cardboard bumper was added on the back of the robot, since it would get stuck more while driving backwards than forwards. This helped improve the smoohness of the driving. Because of all of the friction, the robot moved much slower than it had before, even when the motors were set to full speed. We found that the robot almost never overshot the blue lines at the ends of the tracks and consistently stopped correctly. Functionality was added for the robot to able to drive back and forth between the two lines. While there were some initial issues with the robot trying to move in the wrong direction at a bllue line or getting stuck at those lines, we added logic to ensure that the robot would not try to detect color until after the robot had moved off of the blue line.

The final stage was testing the GUI in conjunction with everything else. As mentioned before, the touchscreen would not register the touches at the correct coordinates, causing the food selection to be more like a game of roulette. While attempting to solve this issue, we first used collidepoint() to detect which button had beeen pressed. When this did not work, we then switched to manually checking whether the position of the touch was within a certain x and y bound. When this still failed, we then reran the code from Lab 2 that displayed the coordinates of the button presses. Since we knew that the Lab 2 code worked, there was no doubt that the touchscreen was not behaving as expected and giving us random coordinates. The phsyical buttons worked as expected and the robot was able able to register the selection, drive to the charcuterie board and stop a set distance away from it, and drive ack to the user before stopping at the blue line there, as needed.

Robot Arm

Hardware Design

The arm that we used was the MeArm Robot Arm v1.0. Although the base servo was included in the build of the arm, that was only for structural reasons; the servo was not used to move anything in the arm. Additionally, the claw was replaced by the toothpick holder. The entire arm was placed on the top of the 3D printed shelf on the robot.

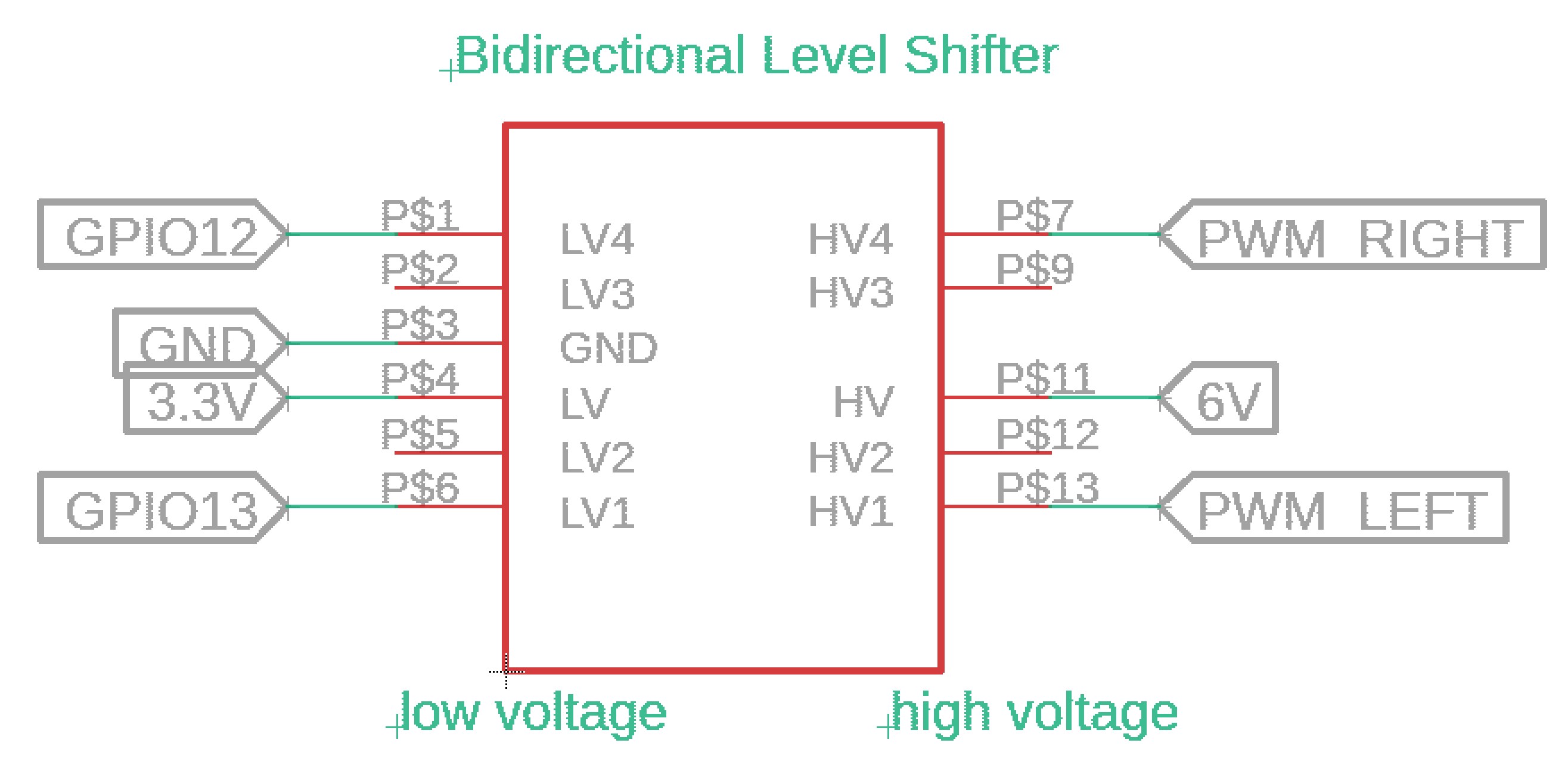

The servo connections are shown below. The signals from GPIO pins 12 and 13 were shifted to battery voltage before going to the servos. The servos are powered by the battery.

Software Design

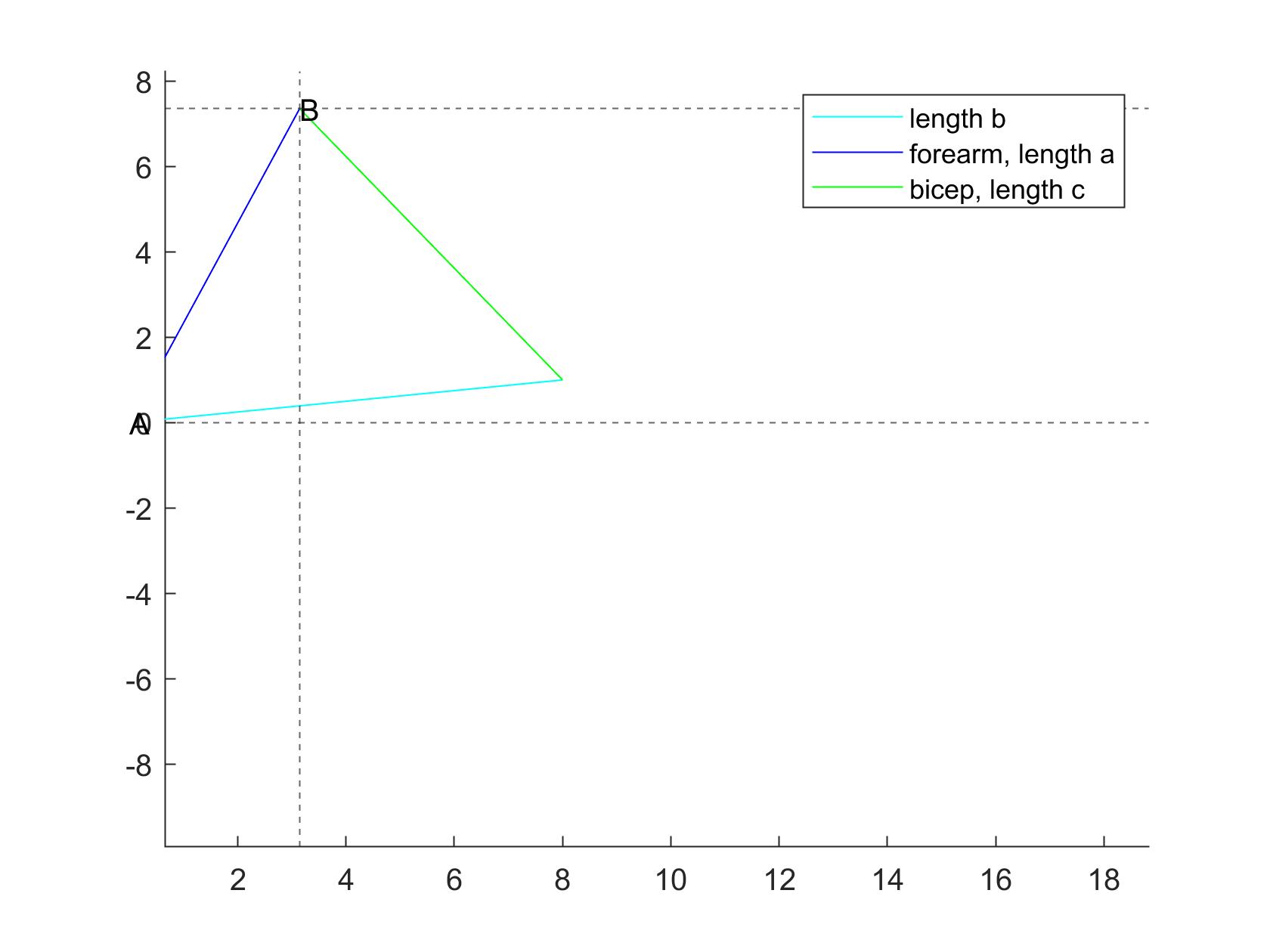

Since we were able to determine the position of the arm from the camera, we wanted to be able to move the arm to stab the food at that point. Thus, we had to use inverse kinematics to move the arm. Client calls the arm function, which is given the distance from the center of the charcuterie table to the center of the food in pixels. The arm function also takes in the left and right servo objects. Since we only used two servos, the arm only moved in one plane. The arm code converted the pixel distance to distance in centimeters from the base of the arm. The setup of the arm is below. There is a bicep and forearm, and each section is approximately 8 cm long. Each servo ranged from approximately 0° to 90°.

First, we had to determine the angles of A and B from the given x and y. To do this, we used the Pythagorean theorem, law of cosines, and general trigonometry to find angles A, B, and D. This then had to be translated into servo angles. The servo angles were both measured relative to the vertical axis at their respective joints, as pictured below. To avoid too much fiddling with signs, the angle D was always positive and an if statement determined what angle the servos should move to, depending on whether y was positive or negative. After further trigonometry, the servo angles were calculated and given bounds to avoid breaking either the arm or the servos. All of these calculations were done in the function calculate_angles, which took in x and y in centimeters as parameters and returned the servo angles.

After the calculations were done, the arm function then called on the move_arm function which took in the new servo angles as well as the last servo angles. This function moved the arm from its old position to its new position. Like the charcuterie table, this was done by incrementing the servo angle slowly, with a 0.05 second delay for the forearm and no delay for the bicep. The servos were moved through hardware PWM. When this was done, the function returned the current servo angles.

In the main arm function, the arm went through 5 different states: the home position, hover position, stab position, hover position, and finally back to home position. As the robot moved to each of these positions, calculate_angles and move_arm were called each time. A 2 second delay occurred between each movement. The home position was set to a position high enough for the arm to keep from hitting the charcuterie board when it drove up to it. The x position of the hover and stab positions were determined by the conversion from the pixel distance, while the y position was hardcoded in: 3 cm for hover, and -1 cm for stab.

Testing

The very first phase of testing involved simply testing the servos in the arm to check the range. A test program increased and decrased the servo angle. This was run individually on each servo. When running a single servo, there were no complications, but running two servos caused issues initially. This was partly due to the arm servos being connected to the same hardware PWM module. However, after this connections was changed, there were still issues with jitter. However, we proceeded with the testing.

MATLAB code was created to test the equations. The arm position was displayed and all of the angles were printed out to help with debugging.

After the MATLAB code worked, we then implemented it on the arm. This took several iterations to get the equations exactly right. When it did work, the arm proceeded to go to the correct position, but the amount of jitter would shake the arm so much that the arm would actual break after a while. We tried multiple different methods of setting the servo position and checked the signals on the oscilloscope to see if the PWM looked bad. In the end, we discovered that the jitter was due to the PWM signals being directly connected to the arm from the Pi. This was a problem because the Pi outputted 3.3V and the arm ran on 6V. By using the level shifter, this problem was avoided and the arm ran mostly smoothly.

To test whether the arm could actually pick up food, we put food items directly in front of the robot and manually gave the arm positions. We found that while the arm had no trouble going low enough to pick up the food, the toothpick was not sharp enough to pierce the food items. Even in the cheese, the easiest item to pick up, the toothpick would only make a dent in the top. To aid the toothpick, we taped a needle to the toothpick. This way, the needle would pierce the food and the toothpick would provide enough friction to keep the food from falling off. We were able to sucessfully stab all of the food items in this way.

After the whole system was working, some small calibration had to be done to the arm calculations to ensure that the position the arm went to was correct. This was done by subracting a small amount from the x position given to the calculate_angles function.

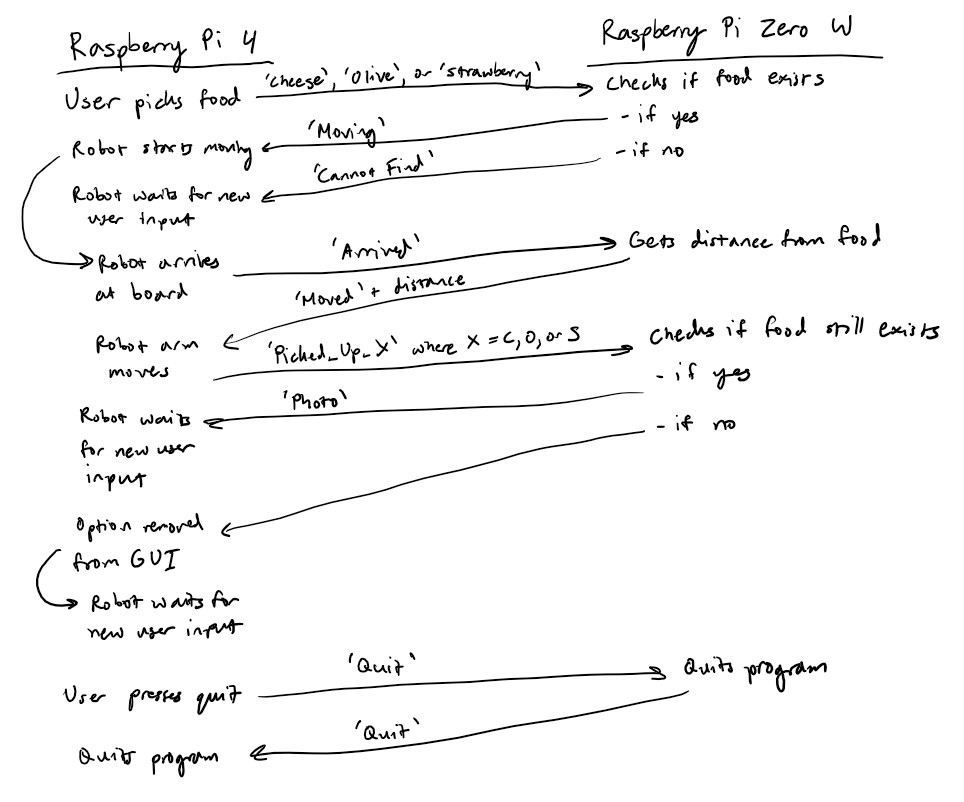

Communication

Communication between the Raspberry Pi 4 and the Raspberry Pi Zero W was handled with the Python socket library. The two Raspberry Pis communicated over WiFi. The Raspberry Pi Zero W acted as the server, and the Raspberry Pi 4 acted as the client. Below is a diagram of how the two Raspberry Pis communicated with each other.

Since the Rapsberry Pi 4 was the client and the Raspberry Pi Zero W was the server, the Raspberry Pi Zero W had to wait to receive a message from the Raspberry Pi 4 before it could send a response. We set it up this way because the Raspberry Pi 4 needed to initiate the communication, so it could not act as the server.

Pi Charcuterie in action:

Results and Conclusions

In the end, we were successfully able to implement almost all of the functionality of the charcutrie robot. The user was able to input their desired food selection and the robot was able to locate the food item and stab it. Overall, the color and food detection were the most consistent. The robot repeatedly stopped in the same location over the blue lines and the charcuterie board consistently spun to align the correct food item with the robot. With the camera, the only variation was lighting, which was easily taken care of by changing the camera settings.

Our main problems came from mechanical errors. The motors for driving the robot were not accurate enough, causing us to have to use physical tracks to keep the robot in a straight line. The arm did not have enough torque to properly stab some of the food items, and it also had a tendency to drift left or right of the food because of the base servo. Because of this, sometimes the robot arm was not properly aligned with the food and would miss slightly. Additionally, the toothpick was not strong enough to keep the food on the toothpick, causing the food to fall off while the robot was driving to the user. All of these problems were due simply to mechanical variations that our current setup could not account for.

Future Work

If we had more time to work on the project, we would have definitely explored ways to make the robot arm more powerful and stable. For example, we would have tried using more powerful motors to generate more torque. In addition, we would have liked to try to have the charcuterie board adjust to the robot arm's position as it was trying to pick up the food to try to reduce the chances of missing. Additionally, using a linear drive mechanism such as stepper motors or a linear actuator would have been much more accurate than using inverse kinematics on the robot arm. We would also have used higher quality motors that would have allowed for more accurate driving.

Budget

| Item | Cost |

|---|---|

| Raspberry Pi 4 | $0 |

| Raspberry Pi Zero W | $10 |

| Raspberry Pi Camera | $15 |

| Lab 3 Robot | $0 |

| Robot arm + servo motors | $23.31 |

| Color sensor | $7.95 |

| Bidirectional level shifter | $2.95 |

| 3D printed parts | $9.03 |

| Wood + cardboard | $10 |

| Total | $78.24 |

References

- pygame Documentation

- Pi Camera Documentation

- OpenCV Documentation

- Color Sensor Documentation

- piServoCtl Documentation

- Python Socket Library Documentation

- MeArm Robot Arm v1.0

- pigpio Documentation

Code Appendix

Link to our GitHubThe two main files are located at 'FinalProject/server/server.py' and 'FinalProject/client/client.py'

Client code

client.py

import socket

import pygame

from pygame.locals import*

import os

import RPi.GPIO as GPIO

from color import *

import subprocess

from arm import *

import pigpio

# Call pigpiod

subprocess.call(['sudo', 'pigpiod'])

# Set up environment

os.putenv('SDL_VIDEODRIVER', 'fbcon')

os.putenv('SDL_FBDEV', '/dev/fb1')

os.putenv('SDL_MOUSEDRV', 'TSLIB')

os.putenv('SDL_MOUSEDEV', '/dev/input/touchscreen')

# Use BCM numbers

GPIO.setmode(GPIO.BCM)

# Initialize pygame

pygame.init()

pygame.mouse.set_visible(False)

# Set up GPIO pins

GPIO.setup(12, GPIO.OUT)

GPIO.setup(5, GPIO.OUT)

GPIO.setup(6, GPIO.OUT)

GPIO.setup(21, GPIO.OUT)

GPIO.setup(26, GPIO.OUT)

GPIO.setup(19, GPIO.OUT)

GPIO.setup(17, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(22, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(23, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(27, GPIO.IN, pull_up_down=GPIO.PUD_UP)

# Initialize duty cycle

dutyA = 100

dutyB = 100

# Set up DC motors

GPIO.output(5, GPIO.LOW)

GPIO.output(6, GPIO.LOW)

p = GPIO.PWM(12, 50)

p.start(dutyA)

GPIO.output(26, GPIO.LOW)

GPIO.output(19, GPIO.LOW)

q = GPIO.PWM(21, 50)

q.start(dutyB)

# Set up servo motors

left_pin = 13

right_pin = 12

left = pigpio.pi()

left.set_mode(left_pin, pigpio.OUTPUT)

right = pigpio.pi()

right.set_mode(right_pin, pigpio.OUTPUT)

# Define colors

black = 0,0,0

white = 255,255,255

red = 255,0,0

green = 0,255,0

yellow = 255,255,0

# Define button dictionary

buttons = {'Cheese': (250,50), 'Olive': (250,100), 'Strawberry': (250,150), 'Quit': (250,200)}

screen = pygame.display.set_mode((320, 240))

font = pygame.font.Font(None, 20)

# Server IP and port

ip = '192.168.0.145'

port = 9050

# Set up color sensor

i2c = board.I2C()

sensor = adafruit_tcs34725.TCS34725(i2c)

sensor.gain = 16

color = (255,255,255)

# Run the program

running = True

sendData = False

data = ''

# Variables to keep track of existing foods

cheese = True

olive = True

strawberry = True

# Initialize the clock

clock = pygame.time.Clock()

# Assign buttons to rects and text surfaces

for text, text_pos in buttons.items():

text_surface = font.render(text, True, white)

rect = text_surface.get_rect(center=text_pos)

if text == 'Cheese':

rect1 = rect

text_surface1 = text_surface

elif text == 'Olive':

rect2 = rect

text_surface2 = text_surface

elif text == 'Strawberry':

rect3 = rect

text_surface3 = text_surface

elif text == 'Quit':

rect4 = rect

text_surface4 = text_surface

# GPIO17 callback function

def GPIO17_callback(channel):

global sendData

global data

global cheese

# Send cheese

if cheese:

sendData = True

data = 'Cheese'

# GPIO22 callback function

def GPIO22_callback(channel):

global sendData

global data

global olive

# Send olive

if olive:

sendData = True

data = 'Olive'

# GPIO23 callback function

def GPIO23_callback(channel):

global sendData

global data

global strawberry

# Send strawberry

if strawberry:

sendData = True

data = 'Strawberry'

# Physical quit button

def GPIO27_callback(channel):

global sendData

global data

global running

# Quit the program on both ends

running = False

sendData = True

data = 'Quit'

# Add GPIO events

GPIO.add_event_detect(17, GPIO.FALLING, callback=GPIO17_callback, bouncetime=300)

GPIO.add_event_detect(22, GPIO.FALLING, callback=GPIO22_callback, bouncetime=300)

GPIO.add_event_detect(23, GPIO.FALLING, callback=GPIO23_callback, bouncetime=300)

GPIO.add_event_detect(27, GPIO.FALLING, callback=GPIO27_callback, bouncetime=300)

# Drive function

def drive(direction):

if direction == 'forward':

GPIO.output(26, GPIO.LOW)

GPIO.output(19, GPIO.HIGH)

GPIO.output(5, GPIO.LOW)

GPIO.output(6, GPIO.HIGH)

elif direction == 'backward':

GPIO.output(26, GPIO.HIGH)

GPIO.output(19, GPIO.LOW)

GPIO.output(5, GPIO.HIGH)

GPIO.output(6, GPIO.LOW)

elif direction == 'stop':

GPIO.output(26, GPIO.LOW)

GPIO.output(19, GPIO.LOW)

GPIO.output(5, GPIO.LOW)

GPIO.output(6, GPIO.LOW)

# Run program

while running:

# Set FPS

clock.tick(40)

# Clear the screen

screen.fill(black)

# Display buttons

if cheese:

# Display cheese button

screen.blit(text_surface1, rect1)

if olive:

# Display olive button

screen.blit(text_surface2, rect2)

if strawberry:

# Disply strawberry button

screen.blit(text_surface3, rect3)

# Display quit button

screen.blit(text_surface4, rect4)

# Check if should send message to server

if sendData:

print(data)

sendData = False

try:

# Connect to server

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

s.connect((ip, port))

msg = data

# Send message to server

s.sendall(msg.encode())

resp = s.recv(1024)

resp = resp.decode('utf-8')

print(resp)

# Check server response

if resp == 'Moving':

# Move robot off starting line

drive('forward')

time.sleep(2)

color = getColor(sensor)

while (color[0] < 50 and color[1] < 180 and color[2] <= 255 and color[0] > 0 and color[1] > 0 and color[2] > 125):

# Move the robot forward off the start line

drive('forward')

# Check color

color = getColor(sensor)

# Check that the robot is not on the blue line

while not (color[0] < 50 and color[1] < 180 and color[2] <= 255 and color[0] > 0 and color[1] > 0 and color[2] > 125):

color = getColor(sensor)

drive('forward')

drive('stop')

# Tell server that robot has arrived

msg = 'Arrived'

s.sendall(msg.encode())

resp = s.recv(1024)

resp = resp.decode('utf-8')

print(resp)

resp = resp.split(' ')

if resp[0] == 'Moved':

dist = resp[1]

# Robot arm movement

arm(dist, left, right)

# Send response based on what robot picked up

if data == 'Cheese':

msg = 'PickedUp_C'

s.sendall(msg.encode())

elif data == 'Olive':

msg = 'PickedUp_O'

s.sendall(msg.encode())

elif data == 'Strawberry':

msg = 'PickedUp_S'

s.sendall(msg.encode())

# Drive off of the end line

while (color[0] < 50 and color[1] < 180 and color[2] <= 255 and color[0] > 0 and color[1] > 0 and color[2] > 125):

drive('backward')

# Check color

color = getColor(sensor)

resp = s.recv(1024)

resp = resp.decode('utf-8')

print(resp)

# Check if should remove option from GUI

if resp == 'None':

if data == 'Cheese':

cheese = False

elif data == 'Olive':

olive = False

elif data == 'Strawberry':

strawberry = False

# Move backward until reach start again

while not (color[0] < 50 and color[1] < 180 and color[2] <= 255 and color[0] > 0 and color[1] > 0 and color[2] > 125):

color = getColor(sensor)

drive('backward')

drive('stop')

finally:

# Close connection

s.close()

# Display GUI

pygame.display.flip()

# Stop motors

p.stop()

q.stop()

left.stop()

right.stop()

# Cleanup GPIO pins

GPIO.cleanup()

arm.py

#

# gtz4, kjl92

# arm.py

# controls arm servos

#

import time

import RPi.GPIO as GPIO

import pigpio

import math

def arm(pixels_to_c, left, right):

# home position, should be in this before this function is called

home_bicep = 20 #10

last_bicep = home_bicep

home_forearm = 45 #70

last_forearm = home_forearm

table_d = 15.5 # cm

table_p = 741 # pixels

conversion = table_d/table_p # cm/pixels

x = 15 - (int(float(pixels_to_c)) - 5)*conversion

y1 = 3 #5

y2 = -1

# go from home position to hover above food

print('Home -> Hover')

forearm, bicep = calculate_angles(x, y1)

last_forearm, last_bicep = move_arm(bicep, forearm, last_bicep, last_forearm, left, right)

time.sleep(2)

# go from hover position to stab food

print('Hover -> Stab')

forearm, bicep = calculate_angles(x, y2)

last_forearm, last_bicep = move_arm(bicep, forearm, last_bicep, last_forearm, left, right)

time.sleep(2)

# go from stab position to hover

print('Stab -> Hover')

forearm, bicep = calculate_angles(x, y1)

last_forearm, last_bicep = move_arm(bicep, forearm, last_bicep, last_forearm, left, right)

time.sleep(2)

# go from hover back to home position

print('Hover -> Home')

move_arm(home_bicep, home_forearm, last_bicep, last_forearm, left, right)

def calculate_angles(x,y):

import math

b = math.sqrt(x**2 + y**2)

a = 8

c = 8

D = math.degrees(math.atan(y/x))

# law of cosines

# a^2 = b^2 + c^2 -2*b*c*cosA

B = math.acos((b**2 - a**2 - c**2)/(-2*a*c))

A = math.acos((a**2 - b**2 - c**2)/(-2*b*c))

B = math.degrees(B)

A = math.degrees(A)

C = 180 - A - B

D = abs(D)

# convert to servo values

if (y > 0):

bicep = int((90 - A - D)/1.05)

forearm = int((B - 90 + A + D)/1.05)

elif (y == 0):

bicep = int((90 - A)/1.05)

forearm = int((B/2)/1.05)

else:

bicep = int((90 - A + D)/1.05)

forearm = int((90 - D - C)/1.05)

if forearm > 80:

forearm = 80

if forearm < 10:

forearm = 10

if bicep > 80:

bicep = 80

if bicep < 10:

bicep = 10

print('bicep angle: ')

print(bicep)

print('forearm angle: ')

print(forearm)

return forearm, bicep

def move_arm(bicep, forearm, last_bicep, last_forearm, left, right):

left_pin = 13

right_pin = 12

i = last_bicep

j = last_forearm

direction_left = bicep - last_bicep

direction_right = forearm - last_forearm

while ((i != bicep) or (j != forearm)):

if j!= forearm:

f = int((j*2000/180 + 500) * 50)

left.hardware_PWM(13, 50, f)

time.sleep(0.05)

if direction_right > 0:

j = j + 1

else:

j = j - 1

if i!= bicep:

b = int((i*2000/180 + 500) * 50)

right.hardware_PWM(12, 50, b)

if direction_left > 0:

i = i + 1

else:

i = i - 1

last_bicep = bicep

last_forearm = forearm

left.set_PWM_dutycycle(left_pin, 0)

left.set_PWM_frequency(left_pin, 0)

right.set_PWM_dutycycle(right_pin, 0)

right.set_PWM_frequency(right_pin, 0)

return last_forearm, last_bicep

color.py

import time

import board

import adafruit_tcs34725

# Return color that the color sensor sees

def getColor(sensor):

# Get RGB color value

color = sensor.color_rgb_bytes

print("Color: ({0}, {1}, {2})".format(*color))

return color

Server code

server.py

import socket

import struct

from move import *

from picamera import PiCamera

import subprocess

# Call sudo pigpiod

subprocess.call(['sudo', 'pigpiod'])

# Run the program

running = True

# Create the servo object

servo = Servo(19)

# Zero the servo

curr = 0

servo.write(curr)

time.sleep(2)

# Calibrate the servo

curr = drive(90, curr, servo)

time.sleep(2)

curr = drive(0, curr, servo)

time.sleep(2)

curr = drive(90, curr, servo)

time.sleep(2)

curr = drive(0, curr, servo)

time.sleep(2)

# Set up the camera and take an image

camera = PiCamera(resolution=(1280,720), framerate=30)

camera.iso = 200 # Daytime lighting

time.sleep(2)

camera.shutter_speed = camera.exposure_speed

camera.exposure_mode = 'off'

g = camera.awb_gains

camera.awb_mode = 'off'

camera.awb_gains = g

camera.capture('board.jpg')

camera.close()

# Intialize distance

dist = 0

# Server loop

while running:

# Set up socket server

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

s.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1)

s.bind(('192.168.0.145',9050))

s.listen(1)

connection, client_address = s.accept()

# Check for connection

try:

print('Connected by ', client_address)

# Loop to receive messages

while True:

data = connection.recv(1024)

data = data.decode('utf-8')

# Check message

if data == 'Cheese':

print(data)

# Move the servo to the proper position

target_angle, curr, dist = getTargetAngle(curr, 'yellow')

if target_angle < 400:

print(curr)

resp = 'Moving'

curr = drive(target_angle, curr, servo)

else:

resp = 'Cannot Find'

connection.sendall(resp.encode())

elif data == 'Olive':

print(data)

# Move the servo to the proper position

target_angle, curr, dist = getTargetAngle(curr, 'green')

if target_angle < 400:

print(curr)

resp = 'Moving'

curr = drive(target_angle, curr, servo)

else:

resp = 'Cannot Find'

connection.sendall(resp.encode())

elif data == 'Strawberry':

print(data)

# Move the servo to the proper position

target_angle, curr, dist = getTargetAngle(curr, 'red')

if target_angle < 400:

print(curr)

resp = 'Moving'

curr = drive(target_angle, curr, servo)

else:

resp = 'Cannot Find'

connection.sendall(resp.encode())

elif data == 'Arrived':

print(data)

# Send distance

resp = 'Moved ' + str(dist)

connection.sendall(resp.encode())

elif data == 'PickedUp_C':

print(data)

# Set up the camera and take an image

camera = PiCamera(resolution=(1280,720), framerate=30)

camera.iso = 200

time.sleep(2)

camera.shutter_speed = camera.exposure_speed

camera.exposure_mode = 'off'

g = camera.awb_gains

camera.awb_mode = 'off'

camera.awb_gains = g

camera.capture('board.jpg')

camera.close()

target_angle, curr, dist = getTargetAngle(curr, 'yellow')

# Check if food item still exists

if target_angle < 400:

resp = 'Photo'

else:

resp = 'None'

connection.sendall(resp.encode())

elif data == 'PickedUp_O':

print(data)

# Set up the camera and take an image

camera = PiCamera(resolution=(1280,720), framerate=30)

camera.iso = 200

time.sleep(2)

camera.shutter_speed = camera.exposure_speed

camera.exposure_mode = 'off'

g = camera.awb_gains

camera.awb_mode = 'off'

camera.awb_gains = g

camera.capture('board.jpg')

camera.close()

target_angle, curr, dist = getTargetAngle(curr, 'green')

# Check if food item still exists

if target_angle < 400:

resp = 'Photo'

else:

resp = 'None'

connection.sendall(resp.encode())

elif data == 'PickedUp_S':

print(data)

# Set up the camera and take an image

camera = PiCamera(resolution=(1280,720), framerate=30)

camera.iso = 200

time.sleep(2)

camera.shutter_speed = camera.exposure_speed

camera.exposure_mode = 'off'

g = camera.awb_gains

camera.awb_mode = 'off'

camera.awb_gains = g

camera.capture('board.jpg')

camera.close()

target_angle, curr, dist = getTargetAngle(curr, 'red')

# Check if food item still exists

if target_angle < 400:

resp = 'Photo'

else:

resp = 'None'

connection.sendall(resp.encode())

elif data == 'Quit':

# Quit the program

running = False

curr = drive(0, curr, servo)

resp = 'Quit'

connection.sendall(resp.encode())

else:

break

finally:

# Close the socket

connection.close()

# Move the table back to the intitial position

drive(0, curr, servo)

# Stop servo

servo.stop()

easyContours.py

from __future__ import print_function

import cv2 as cv

import numpy as np

import argparse

import random

import time

from picamera import PiCamera

# Function used for testing. Displays all images

def easyContoursImage(original, masked, thresh):

# Apply a blur to the masked image

src_gray = cv.blur(masked, (2, 2))

blur_window = 'Blur'

cv.namedWindow(blur_window)

cv.imshow(blur_window, src_gray)

# Find edges

canny_output = cv.Canny(src_gray, thresh, thresh * 2)

# Find contours

contours, _ = cv.findContours(canny_output, cv.RETR_TREE, cv.CHAIN_APPROX_SIMPLE)

contours_poly = [None]*len(contours)

boundRect = []

centers = []

radius = []

# Draw rectangles around contours

for i, c in enumerate(contours):

contours_poly[i] = cv.approxPolyDP(c, 3, True)

x,y,w,h = cv.boundingRect(contours_poly[i])

# Check if the contour has a large enough area

if w > 25 and h > 25:

boundRect.append([x,y,w,h])

center, radius = cv.minEnclosingCircle(contours_poly[i])

centers.append(center)

# Display rectangles on original image

for rectangle in boundRect:

color = (random.randint(0,256), random.randint(0,256), random.randint(0,256))

cv.rectangle(original, (int(rectangle[0]), int(rectangle[1])), \

(int(rectangle[0]+rectangle[2]), int(rectangle[1]+rectangle[3])), color, 2)

return original

# Function called to get the contours around the masked image

def easyContours(masked, thresh):

# Apply a blur to the image

src_gray = cv.blur(masked, (2, 2))

# Find edges

canny_output = cv.Canny(src_gray, thresh, thresh * 2)

# Find contours

contours, _ = cv.findContours(canny_output, cv.RETR_TREE, cv.CHAIN_APPROX_SIMPLE)

contours_poly = [None]*len(contours)

boundRect = []

centers = []

# Draw rectangles around contours

for i, c in enumerate(contours):

contours_poly[i] = cv.approxPolyDP(c, 3, True)

x,y,w,h = cv.boundingRect(contours_poly[i])

# Check if the contour has a large enough area

if w > 25 and h > 25:

boundRect.append([x,y,w,h])

center, radius = cv.minEnclosingCircle(contours_poly[i])

centers.append(center)

# Return a list of centers of rectangles

return centers

find.py

import cv2

from picamera import PiCamera

import numpy as np

import time

from fractions import Fraction

import math

from piservo import Servo

import time

# This function will take a vector and compute the angle required

# to align it with the positive y axis. It is assumed that the input

# vector is relative to the middle of the table.

# This function also takes the current angle of the servo relative

# to its zero position

def computeAngle(x, y, curr_angle):

# FIRST QUADRANT

phi = curr_angle*360/174 # Set phi to the current angle

print("X: " + str(x))

print("Y: " + str(y))

if (x >= 0 and y < 0):

print("First Quadrant")

theta = -math.atan(x/y) * (180/math.pi)

if phi < theta:

target_angle = 360 - (theta - phi)

else:

target_angle = phi - theta

print(target_angle)

# SECOND QUADRANT

elif (x >= 0 and y >= 0):

print("Second Quadrant")

theta = math.atan(y/x) * (180/math.pi)

if phi < (90 + theta):

target_angle = 360 - ((90 + theta) - phi)

else:

target_angle = phi - (90 + theta)

print(target_angle)

# THIRD QUADRANT

elif (x < 0 and y >= 0):

print("Third Quadrant")

theta = -math.atan(x/y) * (180/math.pi)

if phi < (180 + theta):

target_angle = 360 - ((180 + theta) - phi)

else:

target_angle = phi - (180 + theta)

print(target_angle)

# FOURTH QUADRANT

elif (x < 0 and y < 0):

print("Fourth Quadrant")

theta = math.atan(y/x) * (180/math.pi)

if phi < (270 + theta):

target_angle = 360 - ((270 + theta) - phi)

else:

target_angle = phi - (270 + theta)

print(target_angle)

else:

print("Couldn't Find Quadrant")

# Return the angle to move to

return target_angle

move.py

import cv2 as cv

import numpy as np

from fractions import Fraction

import math

from piservo import Servo

import time

from find import *

from easyContours import *

import random

# Function to slowly drive servo to a position

def drive(angle, curr_angle, servo):

# Normalize to account for angle inaccuracy

normalize = 172/180

angle = angle * normalize

while curr_angle < angle:

curr_angle += 1

servo.write(curr_angle)

time.sleep(0.05)

while curr_angle > angle:

curr_angle -= 1

servo.write(curr_angle)

time.sleep(0.05)

return curr_angle

# Function used for testing. Displays images

def getTargetAngleImage(curr_angle, color):

frame = cv.imread('photo.jpg')

# Define color thresholds

lower_red = np.array([165, 100, 90])

upper_red = np.array([179, 255, 255])

lower_yellow = np.array([0,100, 150])

upper_yellow = np.array([20, 255, 255])

lower_green = np.array([15, 50, 30])

upper_green = np.array([50, 255, 150])

# Convert the image to HSV and apply the color mask

# to find the sponge

frame1 = cv.cvtColor(frame, cv2.COLOR_BGR2HSV)

if color == 'red':

spongeFrame = cv.inRange(frame1, lower_red, upper_red)

elif color == 'yellow':

spongeFrame = cv.inRange(frame1, lower_yellow, upper_yellow)

elif color == 'green':

spongeFrame = cv.inRange(frame1, lower_green, upper_green)

# Get the contours from the sponges (should give the center

# of the sponges)

try:

sponges = easyContours(spongeFrame, 190)

assert len(sponges) > 0, "No Sponges Found!"

except AssertionError:

# Display the sponge mask

cv.imshow('Sponge Mask', spongeFrame)

while True:

if cv.waitKey(1) & 0xFF == ord('q'):

break

origin_x = 633

origin_y = 332

# Pick a sponge at random

chosenSponge = sponges[random.randint(0, len(sponges)-1)]

sx, sy = chosenSponge

# Calculate the vector for the sponge

x = sx - origin_x

y = sy - origin_y

# Get the angle we need to move to to align the chosen sponge

# with the positive y axis

target_angle = computeAngle(x, y, curr_angle) / 2

dist = x**2 + y**2

dist = math.sqrt(dist)

# Draw the bounding rectangles on the original image

frame = easyContoursImage(frame, spongeFrame, 190)

# Also going to draw axis through the origin on the image for clarity

cv.line(frame, (1280, int(origin_y)), (0, int(origin_y)), (255, 255, 255), 2)

cv.line(frame, (int(origin_x), 0), (int(origin_x), 720), (255, 255, 255), 2)

# Display the original, annotated image

cv.imshow('frame',frame)

while True:

if cv.waitKey(1) & 0xFF == ord('q'):

break

# Display the original, annotated image

cv.imshow('Sponge Frame',spongeFrame)

while True:

if cv.waitKey(1) & 0xFF == ord('q'):

break

cv.destroyAllWindows()

# Return the angle

return target_angle, curr_angle, dist

# Function to find the target angle and distance to the food

def getTargetAngle(curr_angle, color):

# Load table image

frame = cv.imread('board.jpg')

# Initialize distance and target angle

dist = 0

target_angle = 0

# Define color thresholds

lower_red = np.array([165, 100, 90])

upper_red = np.array([179, 255, 255])

lower_yellow = np.array([0,100, 150])

upper_yellow = np.array([20, 255, 255])

lower_green = np.array([15, 50, 30])

upper_green = np.array([50, 255, 150])

# Convert the image to HSV and apply the color mask

# to find the food

frame1 = cv.cvtColor(frame, cv2.COLOR_BGR2HSV)

if color == 'red':

foodFrame = cv.inRange(frame1, lower_red, upper_red)

elif color == 'yellow':

foodFrame = cv.inRange(frame1, lower_yellow, upper_yellow)

elif color == 'green':

foodFrame = cv.inRange(frame1, lower_green, upper_green)

# Get the contours from the food (should give the center

# of the food)

try:

food = easyContours(foodFrame, 190)

assert len(food) > 0, "No Food Found!"

except AssertionError:

target_angle = 400

# Check that food has been found

if target_angle == 0:

# Center of table

origin_x = 633

origin_y = 332

# Pick a sponge at random

chosenFood = food[random.randint(0, len(food)-1)]

sx, sy = chosenFood

# Calculate the vector for the food

x = sx - origin_x

y = sy - origin_y

# Get the angle we need to move to to align the chosen food

# with the positive y axis

target_angle = computeAngle(x, y, curr_angle) / 2

# Calculate the distance from the center of the food to the center of the table

dist = math.sqrt(x**2 + y**2)

# Return the angle

return target_angle, curr_angle, dist

Team Members

Kristin worked on the charcuterie board setup including servo motor movement, OpenCV, piTFT GUI, Python socket library, robot movement with color sensor, and overall software integration. She also wrote the objective, introduction, overall design, charcuterie board design, robot design, communication design, and future work sections of the report.

Grace worked on the CAD models for the robot shelf, gear assembly, and toothpick holder. She also worked on the inverse kinematics for the arm movement. She also wrote the robot testing, arm design, results and conclusions, and future work sections of the report.