ECE 5725 Thermometer Robot

By Yuetong Liu (yl3426)

Yan Zhang (yz2582)

Demonstration Video

Introduction

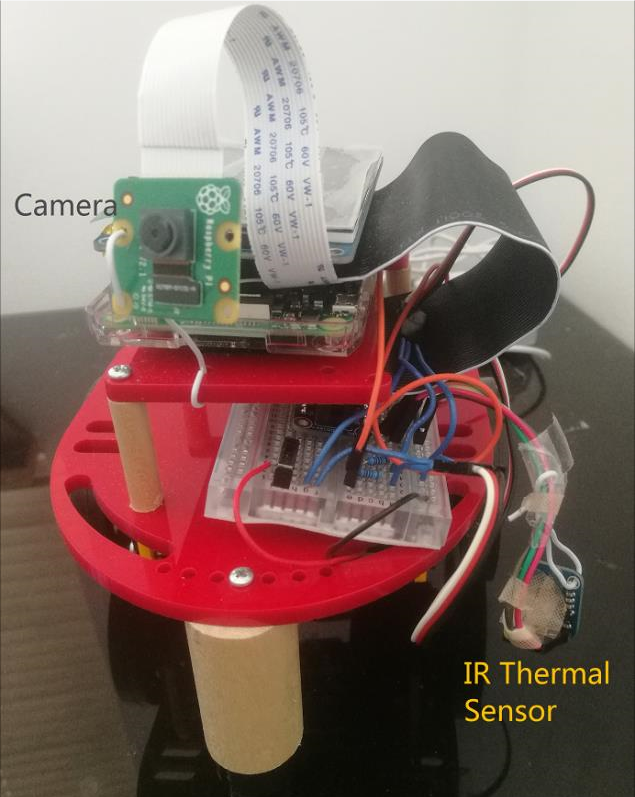

Due to the pandemic--Covid 19,maintaining the social distancing and taking temperature are extremely critical. However taking temperature by humans can easily break the rule of social distancing and easily get infected. We designed a thermometer robot that can take the temperature for a human so that direct contact can be avoided and social distance can be kept during the measurement. The healthcare workers can enter the ID and let the robot find the associated person using face recognition technology. The robot will take the temperature and display it on both piTFT and the desktop monitor. If the temperature is above 37.3℃, it will be shown in red. If the temperature is below 37.3 ℃, it will be shown in green.

Final Product

Project Objective

- Face recognition: The robot can recognize people and find the person that the healthcare staff want to take temperature for.

- Temperature taking: The robot can detect the temperature of the aim with an IR thermometer and show it on the piTFT

- Operation: The robot can move in an auto-mode according to face information from the camera.

Design and Testing

Face Recognition

There are three stages included in the face recognition part: face detection and data gathering, data training, and face recognition. Pi-camera V2 was used to capture the images and OpenCV Library in Python was used for dealing with them.

For face detection, the Haar Cascade classifier was used to identify whether there were faces in an image. Once the face was detected, a rectangle frame would be returned with the coordinates (x,y) , the width (w), and height (h). There was a problem during the test of face detection. It showed a great latency when running the camera and affected the face detection. In order to show a good quality and offer better user experience, python threading was used to eliminate or at least reduce the latency.

.png)

Raspberry Pi Camera Connection

.png)

Face Detection

For gathering facial data, the detection method mentioned above was used, and the faces were tailored from the detected image. An input command was added to input the ID which could be associated with the pictures. Once the face was detected, fifty face samples would be taken and would be stored in a dataset. For data training, the team used LBPH (Local binary patterns histograms) Face Recognizer as recognizer, and used recognizer.train in openCV library to bind the face and ids. For face recognition, a new dictionary was created with names and netids that corresponds to the ID trained before. recognizer.predict in openCV was used to recognize the faces by outputting the face ID and the associate distance.The confidence would be shown when the face was detected to indicate how a perfect match the owner is in percentage. It was calculated by using the equation: confidence=100-distance. If the confidence is below 20, the threshold of the confidence, it would show unknown above the face frame as the name output. If the face detected was in the dataset trained before, it would show the name above the face frame and confidence by the frame.

.png)

Face recognition with photo trained

.png)

Face recognition with photo untrained

Robot Control

Servo motors were used to provide kinetic energy to wheels. The robot would move along with the face number and face position. If the face number is more than one or the robot is too far away (face width smaller than 290 pixels in this case), the robot would move forward. If the robot is too close (width greater than 350 pixels), the robot would move backward. If the face is on the left part of the camera (x coordinate of the face center is smaller than 220), the robot would turn left. If it is on the right part (x greater than 380), the robot would turn right. If there are no people in sight of the robot, it would do the opposite movement compared to before, supposing it has moved too much. The robot would stop until the face frame is at the center of the display. When the target face is detected in the center of the display for more than 5 frames, the status will be marked as stable, and the control function will stop updating. After all the meaurement is complete, the robot will go backwards, so that it will have wider perceptual field and get prepared for the finding the next person.

When the team combined the motion control code and the face recognition code, the robot kept turning right even with a forward or left pivot command. With the help of Professor Skovira, the team found that the problem was resulted from software PWM signals generated by the RPi.GPIO library. The signal might be impacted by other activities on the processor, i.e. face recognition here. Thus the team switched to Hardware PWM, using the PIGPIO library instead. Everything worked smoothly except the Duty cycle and the frequency were not working by using PIGPIO, because the available frequency was limited. Pulse width was changed instead in hardware PWM generation. After several calibration trails, the team changed the parameter definitions for the still, maximum speed clockwise and maximum speed counterclockwise, and made the robot move as expected. Finally the motion control and face recognition worked well simultaneously in the test.

Robot Control by Face Position

.png)

Servo motor schematic

.png)

Servo motor circuit

IR Therometer Measurement

To measure body temperature without contact, an IR thermometer (GY-AMG 8833) was integrated into the robot. This thermometer is an 8x8 array of IR thermal sensors, therefore, can return 64 temperatures in 2D within a single measurement. Although the sensor is able to detect a person up to 7 meters away, the absolute temperature it receives is sensitive to distance. When testing with forehead measurement at about 0.5 meters away from the thermal sensor, the given temperature was only around 32℃. 1 centimeter could be a good distance for getting reliable data. Forehead temperature measurement was discarded and wrists were used instead because of the short trustworthy distance. 36.2℃ was read from the IR thermal sensor while 36.4℃ was read from the normal medical thermometer in one test.

There were four pins needed to be connected. Sensor VIN was connected to 3.3V pin on Pi as power, sensor GND was connected to the ground on the protoboard, sensor SCL was connected to the SCL pin on Pi, and SDA was connected to SDA on Pi.

To show and inform the measurement intuitively, a temperature color contour was calculated and displayed. The contour was a 30x30 resolution square bicubic-interpolated from the 8x8 actual measurement array. Low Temperatures will be displayed in blue, higher ones will be displayed in red, with green, yellow, orange in between.

.png)

IR therometer schematic

.png)

Temperaure Colour Contour

The maximum temperature from the sensor was recorded as the temporary temperature of the person. When the maximum temperature is higher than 35℃, the recorded measurement will start, considering the environment temperature will be generally around 22℃. Each measurement would take 5 seconds, with 20 to 30 sets of data recorded. The ultimate temperature would be the average of the recorded data set.

Overall Process & Pygame Interface

The overall operation and display interface of the program is pygame. The same pygame will be displayed both on the laptop screen to the staff as information, and on the piTFT to the patient as instruction. But it can only be controlled on the laptop screen by the staff. This function was realized by setting the laptop screen as primary framebuffer and the piTFT as secondary framebuffer in 99-fbturbo.conf file, and then copy the primary display to the secondary one with raspi2fb service.

The program would be turned on after pressing the start button on pygame. The netid input requirement would be displayed.

The staff could click on the textbox and input the netid of the person to be found. The start of the input will be identified by using colliderect to check if the mouse clicks on the textbox rect. The text will be recorded character by character using event.unicode from keyboard. Backspace and return are specially defined for delete and confirm functions. After pressing enter with a input, then the camera and face recognition program would be activated and “Looking for < netid > ” would be displayed on pygame.

.png)

Initial Interface

.png)

NetID Interface

Camera capture would be shown on the left top corner of the pygame. Rectangles would exist around faces, with red color for the target person and blue color for other people. Confidence of the recognizition would also be shown at the bottom of the rectangle. When the robot found the person and became stable, “Found < netid > ” would be displayed instead of "looking for".

Then the camera would then be inactivated, the face image would freeze, and the IR thermometer would be activated. “Please put your wrist close to the sensor on your right” would be shown as an instruction to the target person. At the same time, the temperature color contour would be shown below the camera display as a more obvious reminder that the robot has found the person and whether the wrist has been placed at the correct place. The contour would be generally orange or red when the wrist is near the sensor as it should be. Current maximum temperature would be shown on the pygame synchronously.

After the whole measurement, the average temperature would be displayed and the sensor would be inactivated. If the average temperature was less than 37.3℃, which meant the person is "healthy" in the test, it would be shown in green; or it would be shown in red, saying the person had got a fever.

.png)

Asking For Wrist Interface

.png)

Measuring Interface

.png)

Temperature in the normal range

.png)

Temperature above the threshold

(using the hot water)

After the robot went backwards to its original place, the netid input textbox would be shown again to ask for the next person to measure. The program can run unlimitedly as long as the robot knows enough people. The program can be quit by pressing the quit button on pygame when the robot is moving, or press enter on keyboard with an empty input when the new netid is asked.

Result

The listed objectives were generally achieved in this project. From the testing performances, the thermometer robot could receive the command remotely and could find the designated person by the netid entered. The robot successfully moved and changed orientation along with the position of the face of the designated person autonomously, and stayed stable after finding the person. When the robot was close enough to the person, it reminded him/her to put his/her wrist close to the IR sensor and measured the temperatures for five secondes. One of the teammate's temperature was 36.29°C and was shown in green. The robot then went back and got prepared for the next measurement.

Conclusion

Overall, the result of the thermometer robot met all the objectives we listed above. From the demo video, we could see that the thermometer robot can move smoothly, do the face recognition perfectly and measure the temperature as expected. Although there was a small error of the temperature occurring by using the IR thermometer sensor compared to the thermometer gun, it could almost be ignored since it is inevitable to have testing error between different thermometers.

During the testing, we found that software generated PWM signals were not reliable, especially when complex programs were running and slow servo speed was required. Hardware PWM could be an ideal substitution.

Future Work

In the future, the project can be improved with more functions

- Measured data can be saved to another file and uploaded as traceable records, so that the data gathered will be comparable and can be more helpful in health systems.

- Another function can be developed that when the robot detects a random face, it can go and request a temperature measurement, serving as a spot check in crowded places.

Work Distribution

Yuetong Liu

yl3426@cornell.edu

Designed hardware architecture

Coded Robot control

Coded IR therometer function

Yan Zhang

yz2582@cornell.edu

Designed hardware architecture

Coded the Face recognition

Designed the Pygame Interface

Reference

Reduce video latencyPi camera documentation

Face detection cascade

Face recognizition

Hardware GPIO

IR thermal sensor

Textbox input in pygame

Copy display to secome framebuffer