Voice-controlled Speakers

Jide Nwosu and Ben Francis

Introduction

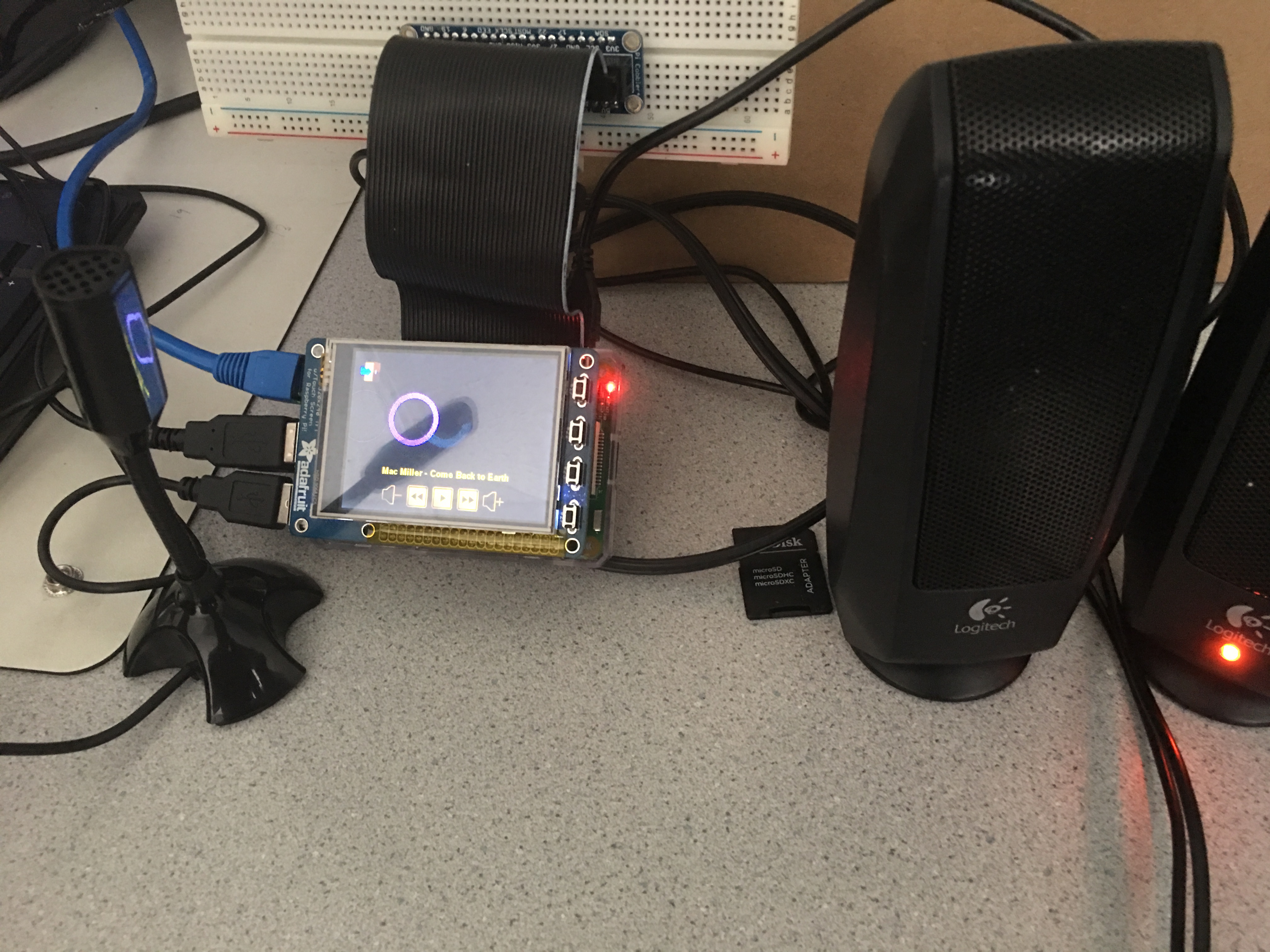

We created an embedded system to control the playback of music streaming from Spotify. Our system is able to stream music from any Spotify album and play it through our speakers. Various aspects of the streaming can be controlled through the PiTFT touchscreen and a microphone, such as playing/pausing, adjusting the volume, and switching to the next/previous tracks in the album. Having two methods of control adds to the capabilities of the system and gives the user more flexibility.

Objective

Our objective was to create a speaker that could be controlled through the piTFT’s touch-screen capability, as well as with a trained voice-recognition application. We wanted all commands to occur seamlessly and for the user to be able to easily control the speaker through speech and the use of their hands. We also aimed to include some intriguing visuals that respond to user inputs, add to the display’s aesthetics, and inform the user of the current playing song.

Design and Testing

We started this project by completing the simplest task, which was designing the buttons displayed on the screen. We created a python script named spotify_interface.py and began to build our display using pygame. We were able to borrow the button icons from a website that provided free icon downloads, and we credited the button designer at the top of our code. We decided on the size that looked the best on our screen, then arranged the buttons in the standard formation with the play button in the center, next and previous buttons on either side of play, and the volume control buttons to the far edges. Eventually, we also added an onscreen exit button that served the same purpose as the quit push button that we utilized for testing purposes, which was to kill all running processes. We implemented state logic that would decide whether to display the pause or the play button based on user inputs that would put the program into play state or paused state.

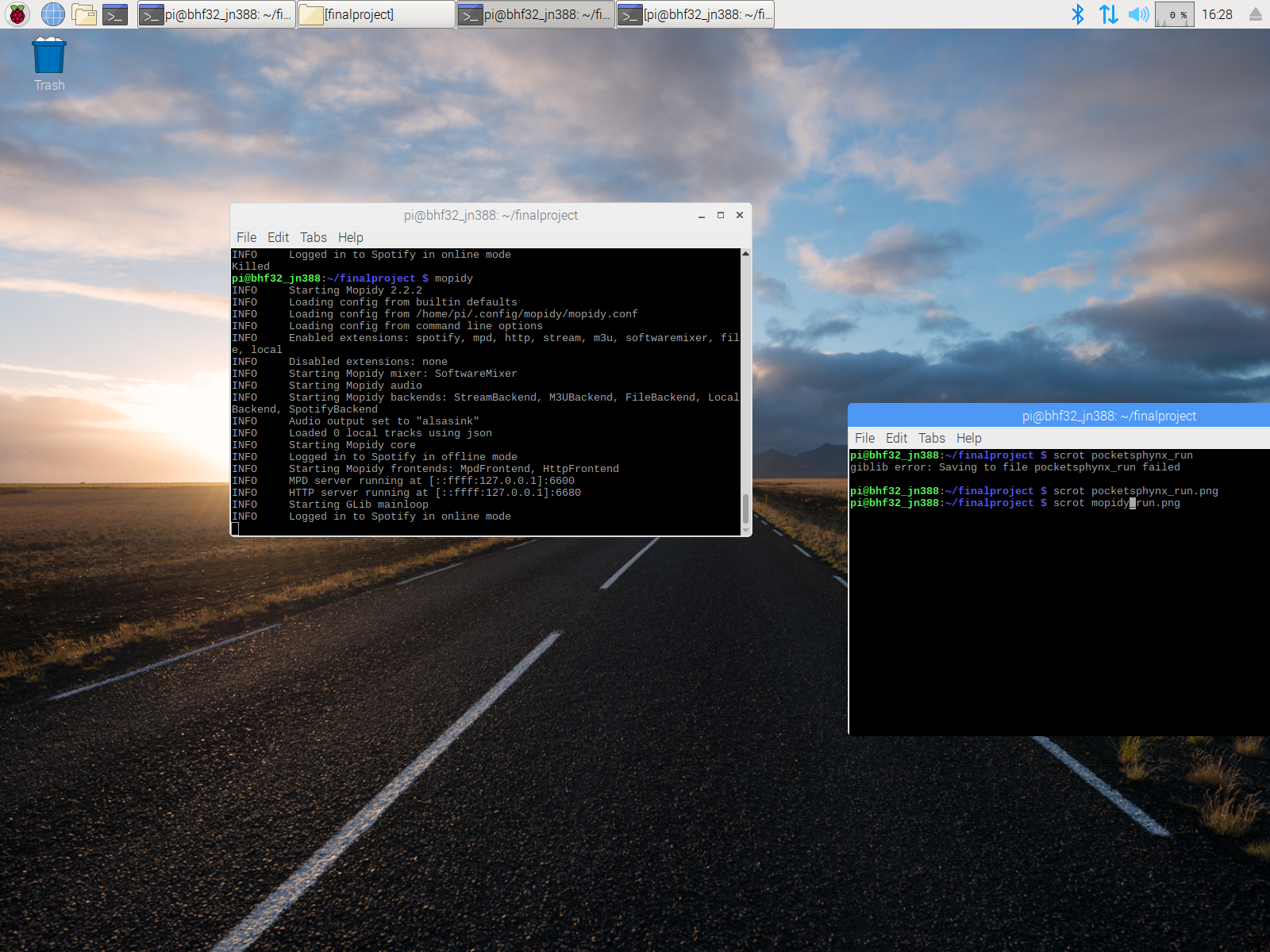

Next we worked on adding the music streaming software to our project. We determined that using the streaming software Mopidy would be our best bet. This software created a server that connected to our Spotify account and allowed us to obtain any song on the Spotify system. We used the mpc player application to control playback and add songs to the playlist through the use of mpc player commands. We found there to be limitations to this software, however, as we could not add user created playlists on Spotify, meaning we would not be able to simply surf through all of our saved songs. We found the most efficient way to add music to be to just add its full album to the library, and if necessary, delete any undesired songs from the playlist. Once we understood how Mopidy and mpc player worked we added code to our python script that would run Mopidy from the terminal in the background, then pipe commands to mpc player that would refresh the list, and add a predetermined album. We then added logic that would send playback mpc commands based on user inputs, either through touch or voice. Finally, we made sure quit out of Mopidy once the python script ended so that we wouldn’t have duplicate servers running the next time we ran the code.

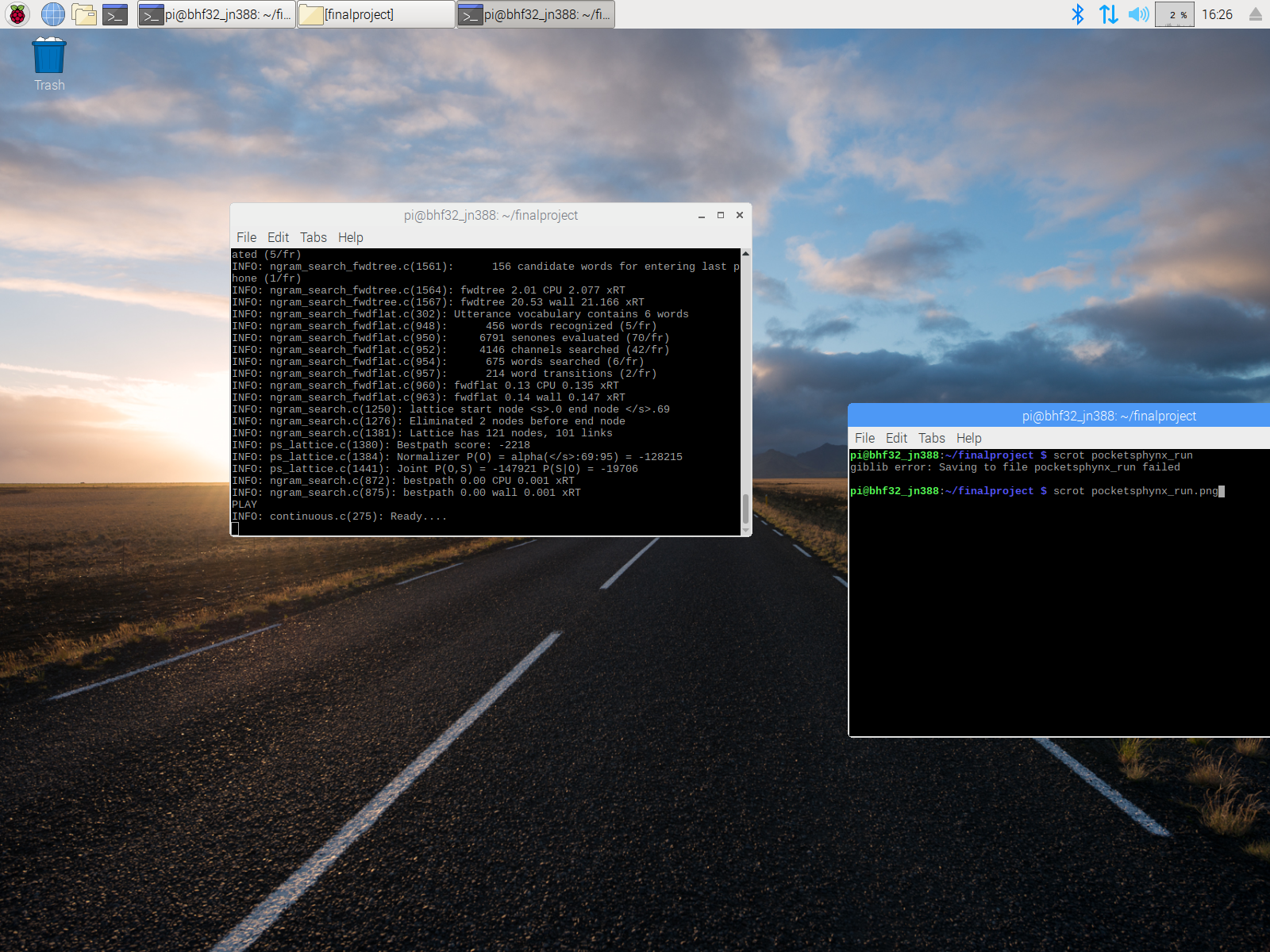

Once the music streaming was working we moved onto the voice recognition application. We used a program suggested to us by Professor Skovira named PocketSphinx. This program, out of Carnegie Mellon, is a lightweight speech recognition engine that is tuned for handheld and mobile devices. We followed a PocketSphinx tutorial that taught us what to download and informed us of all necessary packages that needed to be installed for it to work. Unfortunately, on the advice of one of the tutorials we updated our Raspberry Pi and this led to our touchscreen interface on the PiTFT becoming inaccurate and inconsistent. Once we realized this we reverted to a previous backup and redid the installations without updating the Raspberry Pi. Once we had everything properly set up we could now figure out how to use PocketSphinx.

First, we determined that we would need to generate a new dictionary with which PocketSphinx would compare our speech based on a language model. We created a dictionary that only consisted of the terms “PLAY”, “PAUSE”, “NEXT”, “PREVIOUS”, “VOLUME UP”, and “VOLUME DOWN” and set that as PocketSphinx’s base dictionary. This way PocketSphinx would only listen for those words and nothing else. Now that we had the word bank we had to realize how to call PocketSphinx from our python script. In order to run the correct process we had to cd into the correct PocketSphinx folder and call the process from there. We also had to configure the library each time for optimal performance. We decided that it would be most efficient to create a bash script named voice_commands.sh that would run the configuration and call the process after cd’ing into the right folder. We call this bash script in the background at the beginning of our python script.

Next, we deduced how to take the results of PocketSphinx voice recognition and pipe them into our python script. We found an efficient way to do this by saving the result of the application to a temporary text file, then parsing the file to return the most recently recognized word and comparing it to our possible commands. We made use of rstrip() to remove any unnecessary characters, such as spaces or line breaks, so that we could accurately compare just the recognized word to our list of possible commands. When a recognized song matched with one of our actions, the corresponding command would be sent to the Mopidy server through the pipe of a mpc player terminal call.

Finally, we had to find out how to properly close out of PocketSphinx once we ended the python script. This took quite a while to figure out as closing the bash script didn’t kill the PocketSphinx voice recognition process, and using htop didn’t close the process either. We tried sending a SIGINT signal to interrupt the process, but this failed to stop it. With help from Professor Skovira we realized that we would need to call a sudo SIGKILL on the specific PocketSphinx process, and we could find the id of the process through the use of the pgrep command. Similarly to our voice command parsing logic, we returned the result of the pgrep call to a text file, then piped a sudo SIGKILL call to kill the id denoted in the text file. Once we could properly kill the PocketSphinx process we were content with our voice recognition application. At this point we ensured that all our concurrently running programs worked as desired on both the monitor and on the PiTFT.

At this point the main function of our project was complete, so we could work on adding extra features to increase user experience with our program. We added a text button above the buttons that would return the currently playing song based on a result of a mpc play command that returned the current full playlist. We again saved the output of this command to a text file, then parsed the text file so that each song name was assigned to a position in an array. We then kept track of which song we were on with an index variable, then returned the song name at that index in the array and displayed that on the text button.

We also added visuals to spark interest in the PiTFT screen. We used pygame and a random number generator to randomly flash different shapes of varying size and color across the screen at all times. We also had the screen background sweep between different colors based on the current song index. Once the visuals showed up as desired, we were happy with our final implementation.

Mopidy and Pocketsphinx Running

Example of Mopidy Application Run

Example of Pocketsphinx Application Run

Results

Demo Video

After finishing the design and testing of our voice-controlled speaker, mostly everything performs as planned. We’re able to login to a Mopidy server using our Spotify account information. Any album available on Spotify in the U.S. is able to be added to our playlist through the music player client mpc. As well, input is seamlessly taken transmitted and processed from both the touchscreen and the mic to control the playback of music. Based on the input, commands are sent through mpc to control the playback of music. We can play and pause the music, adjust the volume, and skip between the tracks in the playlist. Although we met most of our initial goals for this project, we did have a few problems that inhibited us from implementing everything outlined in our initial project plan.

As well, the PiTFT display and touchscreen work mostly as expected. The icons for controlling the music show up in the correct positions, and we’re able to press them seamlessly to send commands to the mopidy server. We’re also able to easily quit the application by pressing the on-screen quit button. The visuals on the PiTFT are pretty unique and add to the overall aesthetics. The background color cycles through different hues of colors based on the song being played. There are shapes that randomly blink across the screen to make the display more interesting and entertaining to look at. One thing that we were unable to incorporate was a visual on the screen that’s influenced by the music, for example: moving lines with a color scheme influenced by the frequency content of the song being played. This was mainly due to the trouble we had getting the actual audio data to conduct frequency analysis on since we directly streamed from Spotify.

The voice command portion of our project works pretty well but is limited by the capabilities of the voice-recognition program that we use and external factors, such as outside noise. By speaking close enough to the microphone and saying one of the six commands that we tell pocketsphinx to recognize, commands are sent to mopidy to play, stop, skip track, or adjust the volume of the music. There is a delay between saying the command and it actually being completed. This is due to the processing that is done by the application, which can sometimes take a long time. As well, too much outside noise or talking causes the application to wait longer since it thinks that someone is still trying to speak through the microphone. This can also lead to incorrect voice-recognition, and the software may output the wrong word or phrase. In general, without too much ambient noise, pocketsphinx is pretty accurate and efficient.

Conclusion

In conclusion, our voice-controlled speaker project turned out well and meets most of the goals that we aimed for. We’re able to control the playback of music streaming through two inputs: the touch screen and microphone, and we created an interesting visual on the PiTFT to complement the music. As mentioned above, we did run into some problems with creating the visual that we initially wished to create. The pocketsphinx voice recognition also didn’t work as well as we had hoped. Another problem we ran into at the end was getting the program to begin running right after booting the Raspberry Pi (this was because of an issue with mopidy, which was unable to start up immediately after the Pi’s boot sequence finished). Even though we did run into these problems, overall, we’re satisfied with how our final version works.

Final System

Final Voice-controlled Speakers System

Bill of Materials

Logitech S120 2.20 Watts (RMS) 2.0 Speaker System (newegg.com): $11.99

eBerry USB Microphone (amazon.com): $7.99

Total Cost: $19.98

Future Work

With more time this project could be expanded even further to include additional features and capabilities. One potential expansion of our project would be to create the initial visual that we aimed to make that’s based on the frequency content of the music. A possible method to do this would be to set up a second microphone right next to the speaker to receive the audio data being output through the speaker. Frequency analysis could then be done on the data which is then mapped to a visual for the PiTFT. Another improvement could be to add bluetooth capability to our system. This way a user would be able to listen to and control the music away from the Raspberry Pi. More buttons could be added to the PiTFT to give further control to the user. For example: rewind and forward buttons could be included on the screen. As well, buttons could be created to choose specific albums and songs to play. Instead of having to skip through multiple songs to get to the next album, a user would be able to choose a song directly using the touch screen.

Code Appendix

#Main script for voice-controlled speakers #By Ben Francis and Jide Nwosu, 5/17/19 import pygame # Import pygame graphics library import os # for OS calls import random # for random number generation import time # import time library from pygame.locals import * import RPi.GPIO as GPIO # to use Pi GPIO pins import subprocess # for os subprocess calls #spotify interface #<div>Icons made by <a href="https://www.flaticon.com/authors/xnimrodx" title="xnimrodx">xnimrodx</a> from <a href="https://www.flaticon.com/" title="Flaticon">www.flaticon.com</a> is licensed by <a href="http://creativecommons.org/licenses/by/3.0/" title="Creative Commons BY 3.0" target="_blank">CC 3.0 BY</a></div> subprocess.call("sudo bash fix_touchscreen.sh", shell = True) # calls bash script for touchscreen to work subprocess.call("mopidy &", shell = True) # start running mopidy in background os.putenv('SDL_VIDEODRIVER', 'fbcon') # Display on piTFT os.putenv('SDL_FBDEV', '/dev/fb1') os.putenv('SDL_MOUSEDRV', 'TSLIB') # Track mouse clicks on piTFT os.putenv('SDL_MOUSEDEV', '/dev/input/touchscreen') time.sleep(8) # allow mopidy to finish startup #clear playlist subprocess.Popen(["mpc", "clear", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) #add albums to Mopidy server #can add as many albums as you want here #Mac Miller subprocess.Popen(["mpc", "add", "spotify:album:5wtE5aLX5r7jOosmPhJhhk"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) #Logic subprocess.Popen(["mpc", "add", "spotify:album:6GeHCNwwqMMUrpxuGTRYcf"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) #write list of songs in playlist to text files subprocess.call("mpc playlist > playlist.txt", shell = True) playlist = open("playlist.txt", "r") playlistlines = playlist.readlines() #check if albums correctly added to playlist while len(playlistlines) == 0: #add albums until they are correctly added to playlist #Logic subprocess.Popen(["mpc", "add", "spotify:album:6GeHCNwwqMMUrpxuGTRYcf"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) #Mac Miller subprocess.Popen(["mpc", "add", "spotify:album:5wtE5aLX5r7jOosmPhJhhk"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) subprocess.call("mpc playlist > playlist.txt", shell = True) time.sleep(1) playlist = open("playlist.txt", "r") playlistlines = playlist.readlines() #start voice recognition software (pocketsphinx_contiuous) in background #write results to text file subprocess.call("sudo bash voice_commands.sh > commands.txt &", shell = True) time.sleep(1) #save process id of pocketsphinx for later subprocess.call("pgrep -l pocket > pocketgrep.txt", shell = True) pygame.init() # initialize pygame #set parameters for pygame display size = width, height = 320, 240 WHITE = 255, 255, 255 BLACK = 0,0,0 GOLD = 255, 215, 0 font = pygame.font.Font(None, 20) #setup for piTFT screen screen = pygame.display.set_mode(size) screen.fill(BLACK) pygame.mouse.set_visible(False) # True for monitor False for TFT #initial positions of elements on screen init = [144,200] #play init2 = [144,200] #pause init3 = [184,200] #forward init4 = [104,200] #rewind init5 = [224,200] #volup init6 = [64,200] #voldown init7 = [10,15] #quit circleinit = [160, 110] #circle #load and set loactions of icon images play = pygame.image.load("/home/pi/finalproject/playbutton.png") playrect = play.get_rect() playrect = playrect.move(init) pause = pygame.image.load("/home/pi/finalproject/pausebutton.png") pauserect = pause.get_rect() pauserect = pauserect.move(init2) forward = pygame.image.load("/home/pi/finalproject/forwardbutton.png") forwardrect = forward.get_rect() forwardrect = forwardrect.move(init3) rewind = pygame.image.load("/home/pi/finalproject/rewindbutton.png") rewindrect = rewind.get_rect() rewindrect = rewindrect.move(init4) volup = pygame.image.load("/home/pi/finalproject/volumeup.png") voluprect = volup.get_rect() voluprect = voluprect.move(init5) voldown = pygame.image.load("/home/pi/finalproject/volumedown.png") voldownrect = voldown.get_rect() voldownrect = voldownrect.move(init6) quitbut = pygame.image.load("/home/pi/finalproject/exit.png") quitbutrect = quitbut.get_rect() quitbutrect = quitbutrect.move(init7) # Combine Icon surfaces with workspace surface screen.blit(play, playrect) screen.blit(forward, forwardrect) screen.blit(rewind, rewindrect) screen.blit(volup, voluprect) screen.blit(voldown, voldownrect) screen.blit(quitbut, quitbutrect) pygame.display.flip() # display workspace on screen #initialize state of playback (0 = paused, 1 = playing) playstate = 0 GPIO.setmode(GPIO.BCM) GPIO.setup(17, GPIO.IN, pull_up_down=GPIO.PUD_UP) # physical bail out button #--------------------------------------------------------------------------------- #initialize variables for background color #RGB values rcounter = 254 gcounter = 254 bcounter = 254 colorcounter = 0 #counter for when color should change #increase/decreases rgb values rincrcolor = 0 gincrcolor = 0 bincrcolor = 0 #change r, g,and/or b rchange = 1 gchange = 1 bchange = 1 colorcountermax = 3 #number of iterations between changing color #initialize variables for moving shapes shapecounter = 0 #counter for when shapes should move #randomize parameters of shapes (stroke size, color, shape, color, position, size) shapestroke = random.randint(1,15) shape = random.randint(1,3) rcolor = random.randint(0,255) gcolor = random.randint(0,255) bcolor = random.randint(0,255) shapecolor = rcolor,gcolor,bcolor shapexpos = random.randint(0,320) shapeypos = 100 shapewidth = random.randint(40,80) pi = 3.14 a = time.time() #start time b = a #current time end = 0 #program ended quitnow = 0 #onscreen quit button pressed? #voice command given command = "" prevcommand = "" prevlenlines = 0 i = 0 # current song index subprocess.Popen(["mpc", "volume", "-50"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) #initialize volume to 50% while ((b - a < 1800) and end == 0): #timeout after 30 minutes b = time.time() #format song title song = playlistlines[i].rstrip("\r\n").replace(";", ", ") parenth = song.find("(") if parenth != -1: song = song[0:parenth] #receive and format current voice command text_file2 = open("commands.txt", "r") lines = text_file2.readlines() lenlines = len(lines) if lenlines != 0: command = lines[-1] command = command.rstrip("\r\n").rstrip(" ") #make sure command is new and not a repeat if command == prevcommand or prevlenlines == lenlines: command = "" prevlenlines = lenlines prevcommand = command color = rcounter, gcounter, bcounter # background color time.sleep(.001) #voice commands if command == "PLAY" and playstate == 0: #play music if music is paused and command is "play" playstate = ~playstate subprocess.Popen(["mpc", "play", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) elif command == "STOP" and playstate == -1: #pause music if music is paused and command is "stop" playstate = ~playstate subprocess.Popen(["mpc", "pause", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) elif command == "NEXT" and i < len(playlistlines) - 1: #skip to next track if command is "next" i = i + 1 subprocess.Popen(["mpc", "next", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) elif command == "PREVIOUS" and i > 0: #go back to previuous track if command is "previuous" i = i - 1 subprocess.Popen(["mpc", "prev", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) elif command == "VOLUME UP": #turn volume up if command is "volume up" subprocess.Popen(["mpc", "volume", "+10"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) #update TFT display #flash volume up icon screen.fill(color) texts = {song :(160, 180)} for my_text, text_pos in texts.items(): text_surface = font.render(my_text, True, GOLD) rect = text_surface.get_rect(center=text_pos) screen.blit(text_surface, rect) if (playstate): screen.blit(pause, pauserect) # Combine icon surface with workspace surface else: screen.blit(play, playrect) # Combine icon surface with workspace surface # Combine icon surfaces with workspace surface screen.blit(forward, forwardrect) screen.blit(rewind, rewindrect) screen.blit(voldown, voldownrect) screen.blit(quitbut, quitbutrect) pygame.display.flip() # display workspace on screen time.sleep(0.1) elif command == "VOLUME DOWN": #turn volume down if command is "volume down" subprocess.Popen(["mpc", "volume", "-10"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) #update TFT display #flash volume down icon screen.fill(color) texts = {song :(160, 180)} for my_text, text_pos in texts.items(): text_surface = font.render(my_text, True, GOLD) rect = text_surface.get_rect(center=text_pos) screen.blit(text_surface, rect) if (playstate): screen.blit(pause, pauserect) # Combine icon surface with workspace surface else: screen.blit(play, playrect) # Combine icon surface with workspace surface # Combine icon surfaces with workspace surface screen.blit(forward, forwardrect) screen.blit(rewind, rewindrect) screen.blit(volup, voluprect) screen.blit(quitbut, quitbutrect) pygame.display.flip() # display workspace on screen time.sleep(0.1) for event in pygame.event.get(): if(event.type is MOUSEBUTTONDOWN): pos = pygame.mouse.get_pos() elif(event.type is MOUSEBUTTONUP): #touchsreen detects touch pos = pygame.mouse.get_pos() # get position of touch on screen x,y = pos if y > 200 and y < 232: if x > 144 and x < 176: playstate = ~playstate if (~playstate): #Pause button pressed subprocess.Popen(["mpc", "pause", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) else: #Play button pressed subprocess.Popen(["mpc", "play", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) elif x > 184 and x < 216 and i < len(playlistlines) - 1: #Forward button pressed i = i + 1 subprocess.Popen(["mpc", "next", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) elif x > 104 and x < 136 and i > 0: #previuous track button pressed i = i - 1 subprocess.Popen(["mpc", "prev", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) elif x > 224 and x < 256: #Volume up button pressed subprocess.Popen(["mpc", "volume", "+10"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) #update TFT display #flash volume up icon screen.fill(color) texts = {song :(160, 180)} for my_text, text_pos in texts.items(): text_surface = font.render(my_text, True, GOLD) rect = text_surface.get_rect(center=text_pos) screen.blit(text_surface, rect) if (playstate): screen.blit(pause, pauserect) # Combine icon surface with workspace surface else: screen.blit(play, playrect) # Combine icon surface with workspace surface # Combine icon surfaces with workspace surface screen.blit(forward, forwardrect) screen.blit(rewind, rewindrect) screen.blit(voldown, voldownrect) screen.blit(quitbut, quitbutrect) pygame.display.flip() # display workspace on screen time.sleep(0.1) elif x > 64 and x < 96: #Volume down button pressed subprocess.Popen(["mpc", "volume", "-10"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) #update TFT display #flash volume down icon screen.fill(color) texts = {song :(160, 180)} for my_text, text_pos in texts.items(): text_surface = font.render(my_text, True, GOLD) rect = text_surface.get_rect(center=text_pos) screen.blit(text_surface, rect) if (playstate): screen.blit(pause, pauserect) # Combine icon surface with workspace surface else: screen.blit(play, playrect) # Combine icon surface with workspace surface # Combine icon surfaces with workspace surface screen.blit(forward, forwardrect) screen.blit(rewind, rewindrect) screen.blit(volup, voluprect) screen.blit(quitbut, quitbutrect) pygame.display.flip() # display workspace on screen time.sleep(0.1) elif y > 0 and y < 60: if x > 0 and x < 60: # quit button pressed quitnow = 1 if shapecounter == 20: #after 20 iterations add new shape shapestroke = random.randint(1,15) shape = random.randint(1,3) rcolor = random.randint(0,255) gcolor = random.randint(0,255) bcolor = random.randint(0,255) shapecolor = rcolor,gcolor,bcolor shapexpos = random.randint(0,320) shapeypos = 100 shapecounter = 0 shapewidth = random.randint(40,80) else: shapecounter = shapecounter + 1 #update TFT display screen.fill(color) texts = {song :(160, 180)} for my_text, text_pos in texts.items(): text_surface = font.render(my_text, True, GOLD) rect = text_surface.get_rect(center=text_pos) screen.blit(text_surface, rect) if (playstate): screen.blit(pause, pauserect) # Combine icon surface with workspace surface else: screen.blit(play, playrect) # Combine icon surface with workspace surface #draw randomized shape if shape == 1: pygame.draw.circle(screen, shapecolor, [shapexpos, shapeypos], 40, shapestroke) elif shape == 2: pygame.draw.rect(screen, shapecolor, [shapexpos, shapeypos, shapewidth, 60], shapestroke) elif shape == 3: pygame.draw.ellipse(screen, shapecolor, [shapexpos, shapeypos, shapewidth, 60], shapestroke) # Combine icon surfaces with workspace surface screen.blit(forward, forwardrect) screen.blit(rewind, rewindrect) screen.blit(volup, voluprect) screen.blit(voldown, voldownrect) screen.blit(quitbut, quitbutrect) pygame.display.flip() # display workspace on screen #adjust rgb elements of background being changed based on current song if i%7 == 0: rchange = 1 gchange = 1 bchange = 1 elif i%7 == 1: rchange = 1 gchange = 1 bchange = 0 elif i%7 == 2: rchange = 1 gchange = 0 bchange = 1 elif i%7 == 3: rchange = 1 gchange = 0 bchange = 0 elif i%7 == 4: rchange = 0 gchange = 1 bchange = 1 elif i%7 == 5: rchange = 0 gchange = 1 bchange = 0 elif i%7 == 6: rchange = 0 gchange = 0 bchange = 1 colorcounter = colorcounter + 1 if colorcounter == colorcountermax: #change background color if rchange: if rincrcolor == 0: if rcounter > 0: rcounter = rcounter - 1 else: rincrcolor = 1 else: if rcounter < 255: rcounter = rcounter + 1 else: rincrcolor = 0 if gchange: if gincrcolor == 0: if gcounter > 0: gcounter = gcounter - 1 else: gincrcolor = 1 else: if gcounter < 255: gcounter = gcounter + 1 else: gincrcolor = 0 if bchange: if bincrcolor == 0: if bcounter > 0: bcounter = bcounter - 1 else: bincrcolor = 1 else: if bcounter < 255: bcounter = bcounter + 1 else: bincrcolor = 0 colorcounter = 0 if ( not GPIO.input(17) or quitnow ): #physical bailout button pressed or onscreen quit button pressed #kill all background processes (mopidy and pocketsphinx) and stop mpc subprocess.Popen(["mpc", "stop", "-q"], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE) subprocess.call("pkill mopidy", shell = True) text_file1 = open("pocketgrep.txt", "r") lines = text_file1.read().split(' ') pid = lines[0] subprocess.call("sudo kill -SIGKILL " + pid, shell = True) #close and delete temp text files text_file1.close() text_file2.close() os.remove("commands.txt") os.remove("pocketgrep.txt") end = 1 #program is done quit()

#!/bin/bash #Start pocketsphinx software #By Ben Francis and Jide Nwosu, May 17, 2019 ldconfig #configure pocketsphinx cd pocketsphinx-5prealpha/src/programs # cd to correct directory pocketsphinx_continuous -nfft 2048 -samprate 16000 -inmic yes -lm commands.lm -dict commands.dict # start pocketsphinx with correct parameters

#!/bin/bash #Make sure touchscreen is setup correctly #By Ben Francis and Jide Nwosu, May 17, 2019 #remove and re-install the touchscreen sudo rmmod stmpe_ts sudo modprobe stmpe_ts echo "/dev/input/touchscreen should point to eventn" sleep 1 ls -l /dev/input/touchscreen

Contributions

Ben: Worked on code for main python script, spotifyinterface.py; helped setup microphone; helped download and install mpc, mopidy, and pocketshpinx.

Jide: Worked on writing main python script code, and helped install, troubleshoot, and utilize mopidy, mpc and PocketSphinx. Also ensured backups of project.

References

Voice recognition:

https://www.alatortsev.com/2018/06/28/speech-processing-on-raspberry-pi-3-b/

http://www.rmnd.net/speech-recognition-on-raspberry-pi-with-sphinx-racket-and-arduino/

http://www.speech.cs.cmu.edu/tools/lmtool-new.html

https://sites.google.com/site/observing/Home/speech-recognition-with-the-raspberry-pi/

Microphone and speaker setup:

https://iotbytes.wordpress.com/connect-configure-and-test-usb-microphone-and-speaker-with-raspberry-pi/

Streaming setup/music playback:

https://github.com/mopidy/mopidy-spotify

https://docs.mopidy.com/en/latest/

https://www.mankier.com/1/mpc

Contact

Benjamin Francis: bhf32@cornell.edu

Jidenna Nwosu: jn388@cornell.edu