magic wand

shuhui ding sd784, yilong zhong yz995 may 17, 2017

Introduction

In this project we have built a magic wand game system based on Raspberry Pi. First, the user is instructed to deliver a spell either “Wingardium Leviosa” “Stupefy” or “Lumos” and wave the wand in a certain pattern either “down-left”, “up-left”, or “up-right”. If both the spell and the wand gesture is operated correctly, a video showing the corresponding movie scenes where the leading character is casting the spell will be played on the piTFT screen.

Objective

As Harry Potter fans, not all of us have the chance to go to universal studio and try out the magic power. This project provides users a magic wand game built on Raspberry Pi that enables muggles to practice their spell and wand gesture at home. It’s based on two recognition system: voice recognition and trajectory recognition.

Design

System Overview

Magic wand system is a combination of voice recognition and gesture recognition. For gesture recognition, we used image analysis opencv library, for voice recognition, we used google speech recognition API.

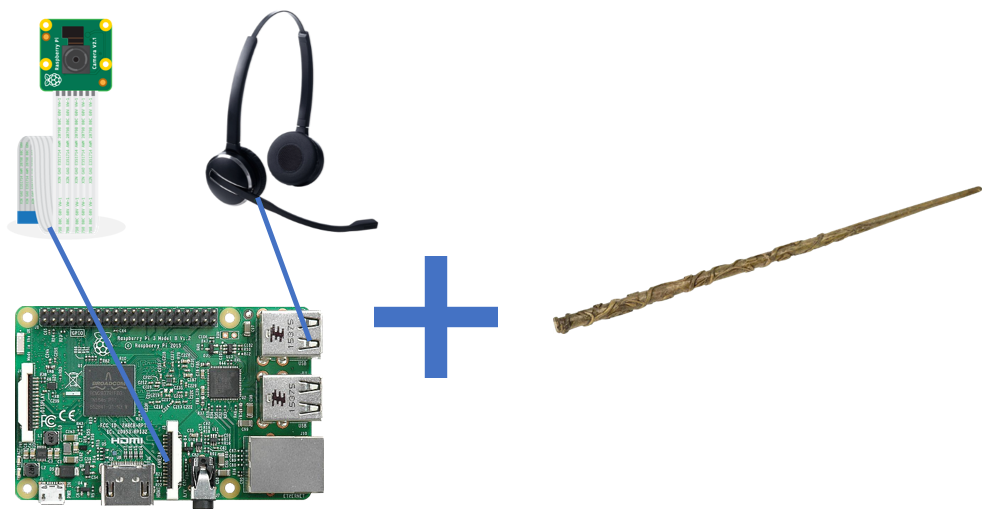

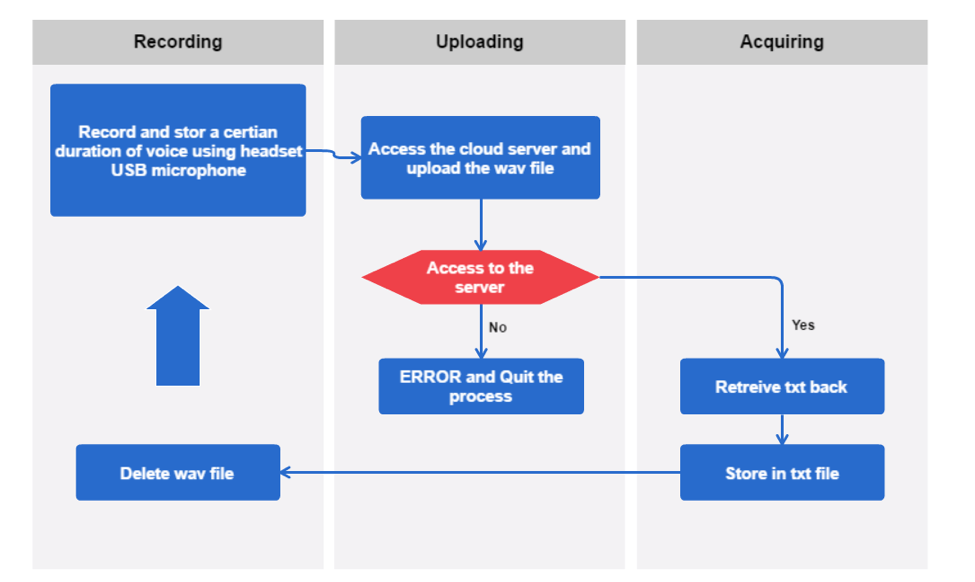

The system consists of four parts: a 3D printed wand, a USB microphone, a NoIR camera, and the pi. We choose the NoIR camera that is able to detect the infrared light shining from the tip of the wand. We blocked most of the visible light to minimize the impact of environment light. We choose the USB microphone because the default sound card that Raspberry Pi has only supports audio output. In order to take audio input, a USB microphone is needed, we choose a headset style one to increase the mobility of the user when playing the game. The wand design has an embedded circuit in it with the infrared LED on the tip of the wand and a switch on the handle. The price for 4 components are listed as followed.

Google speech recognition API

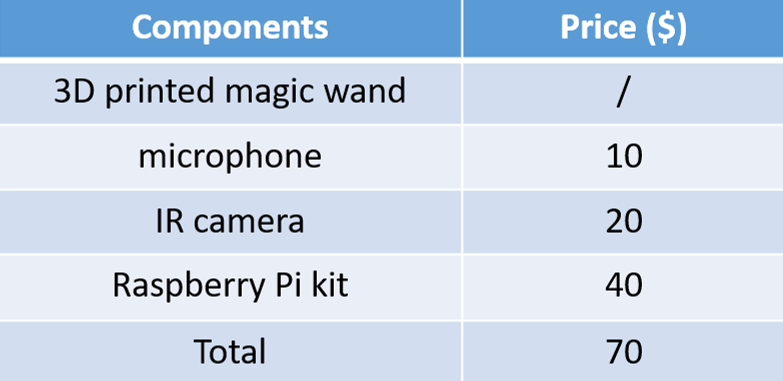

Figure 1. Pricing table of speech API

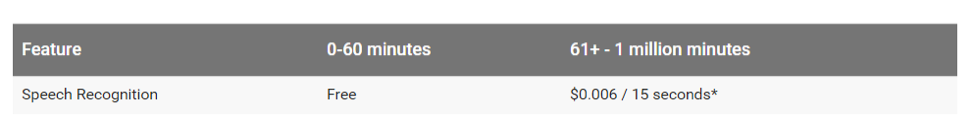

The pricing table is shown in figure 1, however, Google gives out a $300 bonus one-month trial for each developer, it’s enough for the testing and realization of the speech recognition. We wrote a bash script to first record a wav file, upload it onto the cloud and acquire the txt back. By following the steps in the flow chat shown below:

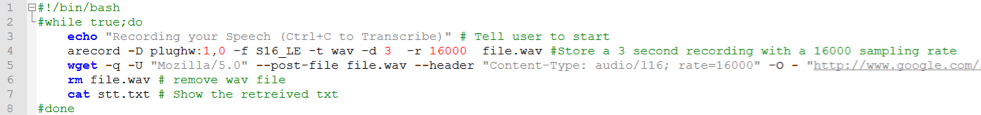

Figure 2. flow-chart of the bash script logic

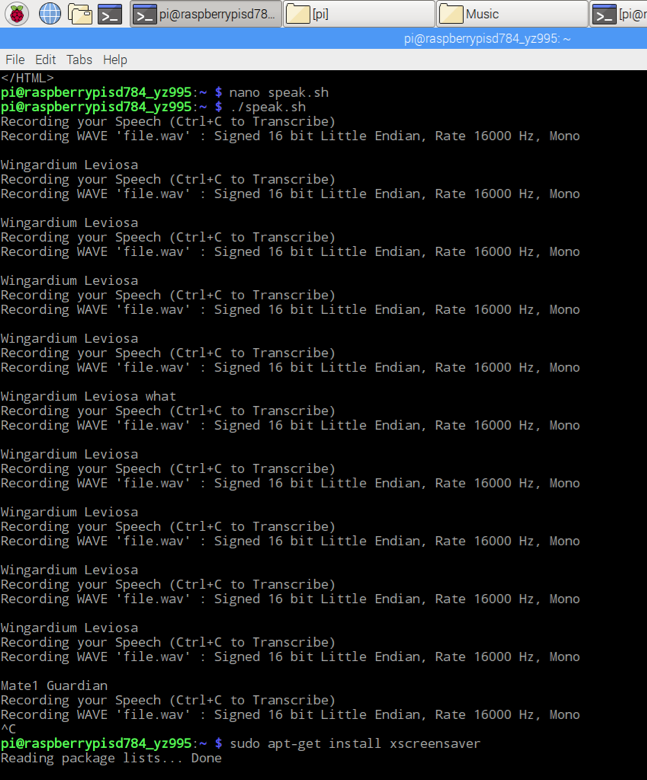

Figure 3. Screenshot of the bash script

We use arecord command to record the voice input from USB microphone. Plughw: determines the input device. -f set the encode format of the wav file and is set to be S16_LE in this case, -t determines the final type of the file, -d determines the duration of the recording, -r set the sampling rate of the file, the reason we set the rate to 16000 Hz is that Google decodes the wav file in such sampling rate. Then the script uploads file.wav onto the cloud, the web site http://www.google.com/speech-api/v2/recognize?lang=en-us&client=chromium&key=YOURKEY where the user key can be generated from the google speech API project console. The txt is stored in the txt file afterwards. Then the script removes the file to save space and print the content of the txt file on screen.

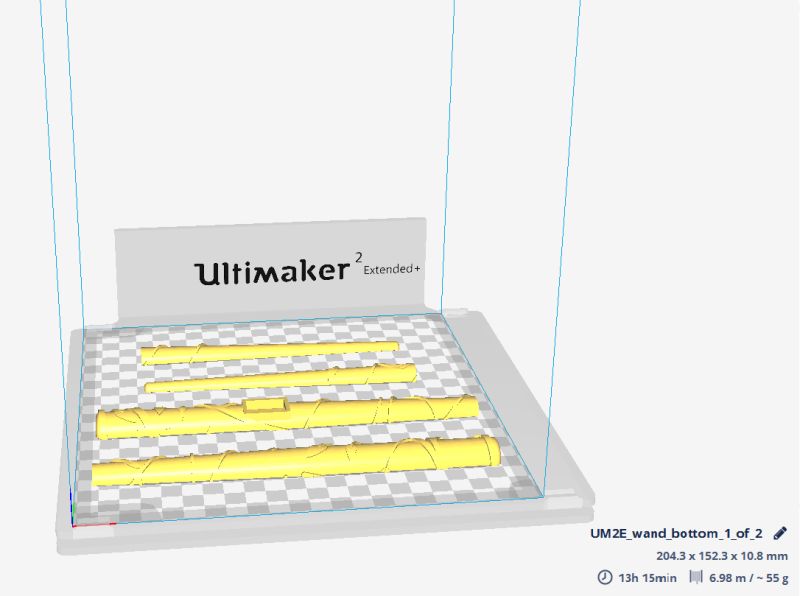

Wand

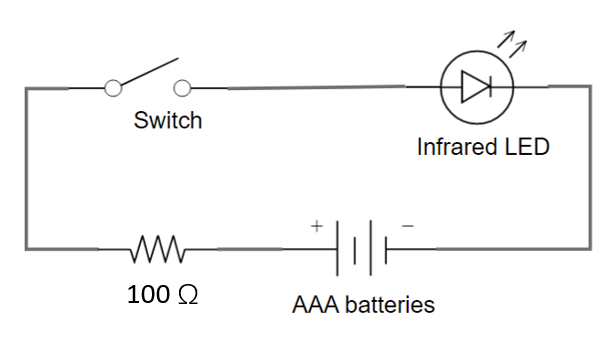

In order to have a wand with infrared LED shining from the tip, we 3D printed the wand of Hermione Granger from https://www.thingiverse.com/make:305655 which is designed to left space for batteries and LED. The circuit diagram and the screenshot of the wand is provided below.

Figure 4. Left) circuit diagram of the wand Right) 3D model of the wand

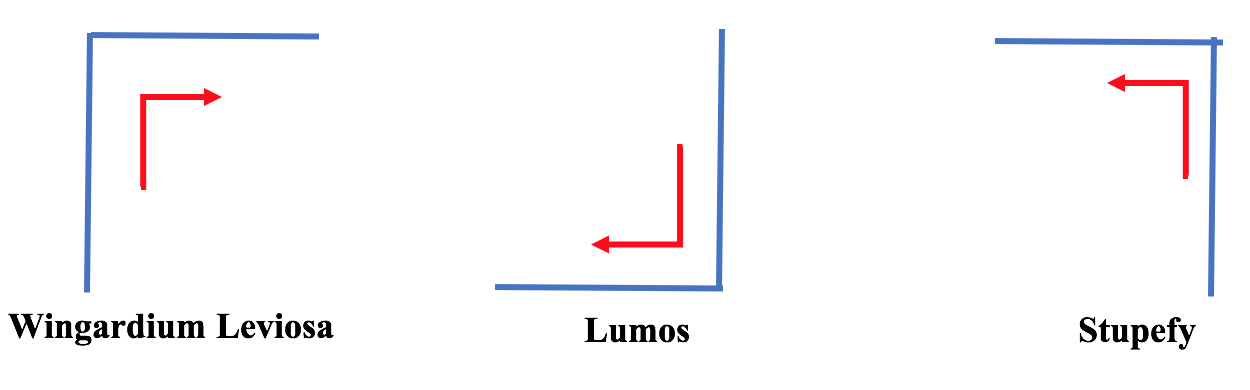

We designed three gesture patterns paired with the spell as shown in figure 5. The principle is to recognize the turning point of the magic wand. For further development, we can implement a trained gesture recognition thus can add more patterns to the wand system.

Figure 5. matching of wand gesture and spell

Opencv image analysis

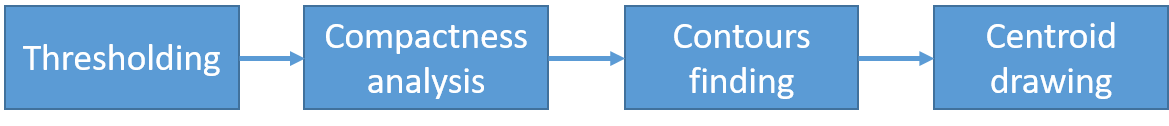

The image analysis process mainly includes thresholding, compactness analysis, contours finding and centroid drawing. We also considered to use the optical flow and hough circle detection to find the wand tip.

In thresholding, we set up a fixed threshold value, after thresholding, we get a binary image with several blobs on (usually 2~5 blobs representing the high light intensity in original frame). We introduce geometric equation P=C^2/S (also known as "compactness", where C is the perimeter of the blob perimeter, S is the area of the enclosed blob) to screen out those long thin components detected via thresholding. This equation has the minimum value of 4π, when the shape of the blob is exactly a circle. Under the truth that the wand tip transmits diffusion led pattern which is similar to a circle, we use this equation to set a dynamic range to screen all the blobs until the wand tip blob is found.

We also considered to use hough circle detection method as our reference suggests. However, the way opencv implements hough circle detection is involved with canny edge operator. The canny edge operator is based on the gradient of the image, thus it is not able to provide a enclosed contour of a certain blob due to edge gradient difference, influencing the post processing - hough circle detection, possibly increasing the false detection chance. This can result to a potential error of wand tip detection. IIt turns out our method of detecting wand tip works well in a controlled light environment.

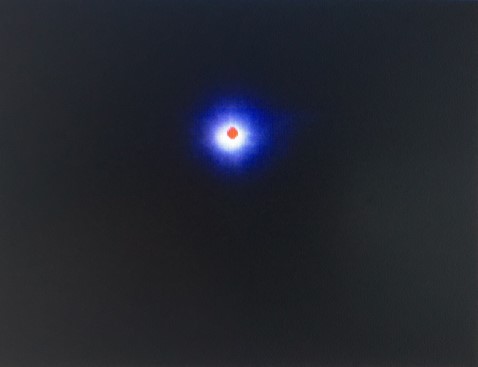

One of the problems we met in our design in image analysis is that it doesn’t work well in a strong environmental brightness due to the mis-capture of the wand tip. The mis-capture can also happen due to a diffusion led pattern and unexpected reflection. We can overcome this problem by either reducing the environmental light or putting up visible light filer on the top surface of the camera. Typically we can use a visible light filter, however, the filter is usually expensive (>$100, exceeds our budget). A good way to achieve the effect of a visible light filter is to put insulated rubber tapes on the camera to block the visible light, only let the infrared go through. Though there might be still some occasional problems with multiple reflection with this method, it is much better than without tapes. Another way to solve this problem is to dim the environmental light directly (choose a night probably), which can achieve a more incredible effect than putting up tapes on the camera. The following pictures are several captures of a good example and bad examples.

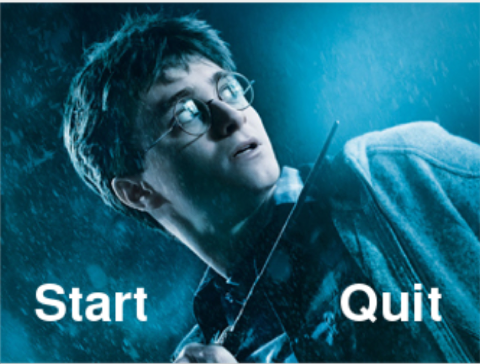

GUI

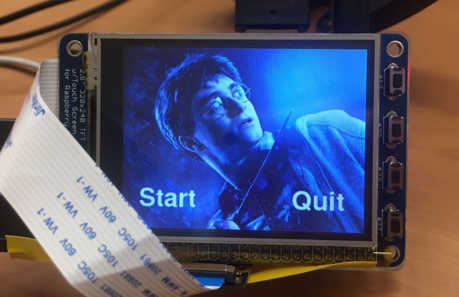

To have a better user experience, a Pygame interface is provided and can be implemented on the piTFT screen. With a Background photo, a “Start” button on the left corner, and a “Quit” button on the right corner. When click on the Start button, system launches the voice recognition, and the user can start casting the spell. People can click on the button repeatedly if they are not satisfied with the spell or after the movie clip is shown. “Quit” button is design to quit the whole game process whenever it’s pressed.

Testing

We tested on the accuracy of the google speech API by saying the same word repeatedly. When the word is spelled correctly, the accuracy is 100%. Even when the spell is casted with a little miss-pronunciation in the middle, an accuracy around 50% can be achieved. We also tested on the accuracy of the gesture recognition. The environment of testing is crucial. When it’s in a relatively dullish lighting condition, the camera captures the tip and follow its motion more accurately. When there is presence of strong light, system identifies several points and is hard to track the motion of the tip. A smooth surface also generates problems. When placing the camera too close to the table, the reflected light with similar whiteness of the original infrared light can be mistaken as another infrared LED light source. Several hazards screenshots have been shown as followed. Users might need to adjust the environment to have a better experience of the game.

Feedback from Google API about “Wingardium Leviosa” with 8 correct pronunciation and mispronunciation in the last three tries

A good example of capturing wand tip

A bad example of capturing wand tip (Diffusion pattern)

A bad example of capturing wand tip (Reflection from somewhere)

Drawings

Breakdown in Ultimaker 3D printer (0.4mm PLA nozzle) & Original 3D model of magic wand

Results

We are able to develop a piTFT screen game with the use of a wand with infrared LED tip and a USB microphone. The whole system performs well on the console window and can be implemented on the piTFT screen. When the Start button is pressed, users with the headset on is able to cast a spell in 3 seconds, and wave the wand in the meantime. When both pattern and the pronunciation is correct, a movie clip is played on the screen, and back to the initial interface afterwards. When the Quit button is pressed, the game ends.

Screen display of the initial interface and after the correct “Stupefy” spell is casted

We managed to develop a bash script to realize the voice recognition process, by recording sound, uploading sound, retrieving text, and finally store it. We also developed OpenCV script to detect and track the movement of an infrared light tip and identified patterns in the movement. A Pygame GUI is implemented to enhance user experience.

Conclusion

Following functions are successfully implemented in our design:

1. Voice recognition (voice record, cloud server upload, retrieve and store txt)

2. Gesture pattern recognition (thresholding, compacness analysis, contours finding, etc.)

3. Pygame interface control

However, there are some flaws in the design that can be improved in future:

1. We need to manually adjust the lighting environment to have the best gesture recognition

2. The implementation on the piTFT screen is not stable

3. Lack interaction with users, it takes only 1 mins to learn 3 spells patterns

In conclusion, we met the goals set for this project, and develop an interesting game for Harry Potter fans. But there is still plenty space for the game process to be improved in future.

Future work

Upon the completion of the project, we can improve it in following ways:

1. Develop algorithm in OpenCV which detects the environment lighting situation and automatically adjust the light strength detection of the LED tip, so that no manual adjustment of the lighting condition is needed.

2. Implement more spells into the game. This process involves identification of more complicated and repeatable gesture recognition

3. Implement the whole process onto a larger screen and enables various interaction with users

Contributions

Shuhui Ding

1. Components purchase

2. Google speech recognition API investigation, code composition and testing

3. Logic flow of voice recognition and gesture recognition design

4. Report writing and formatting on Microsoft word platform

Yilong Zhong

1. Gesture recognition code composition based on opencv package and testing

2. Google speech recognition API code modification

3. Report writing and formatting on Dreamweaver platform

4. Demonstration video editing

All work was done with kind help and guidance of Professor Joe Skovira, TAs Jacob and Brendan.

Reference

O'Brien, Sean. "Turn on a Lamp with a Gesture-Controlled Harry Potter Wand." MAKE:, n.d. Web. 18 May 2017.

3D magic wand CAD model

Contact

Shuhui Ding sd784@cornell.edu

Yilong Zhong yz995@cornell.edu