Travel Robot

Designed by

Minghan Shi (ms3536), Qianqian Hou (qh98)

Demo Video

Introduction

Our project aims to design a robot to realize the function to arrive at a destination based on the input voice commands and return back to the start point automatically based on the previous input commands.

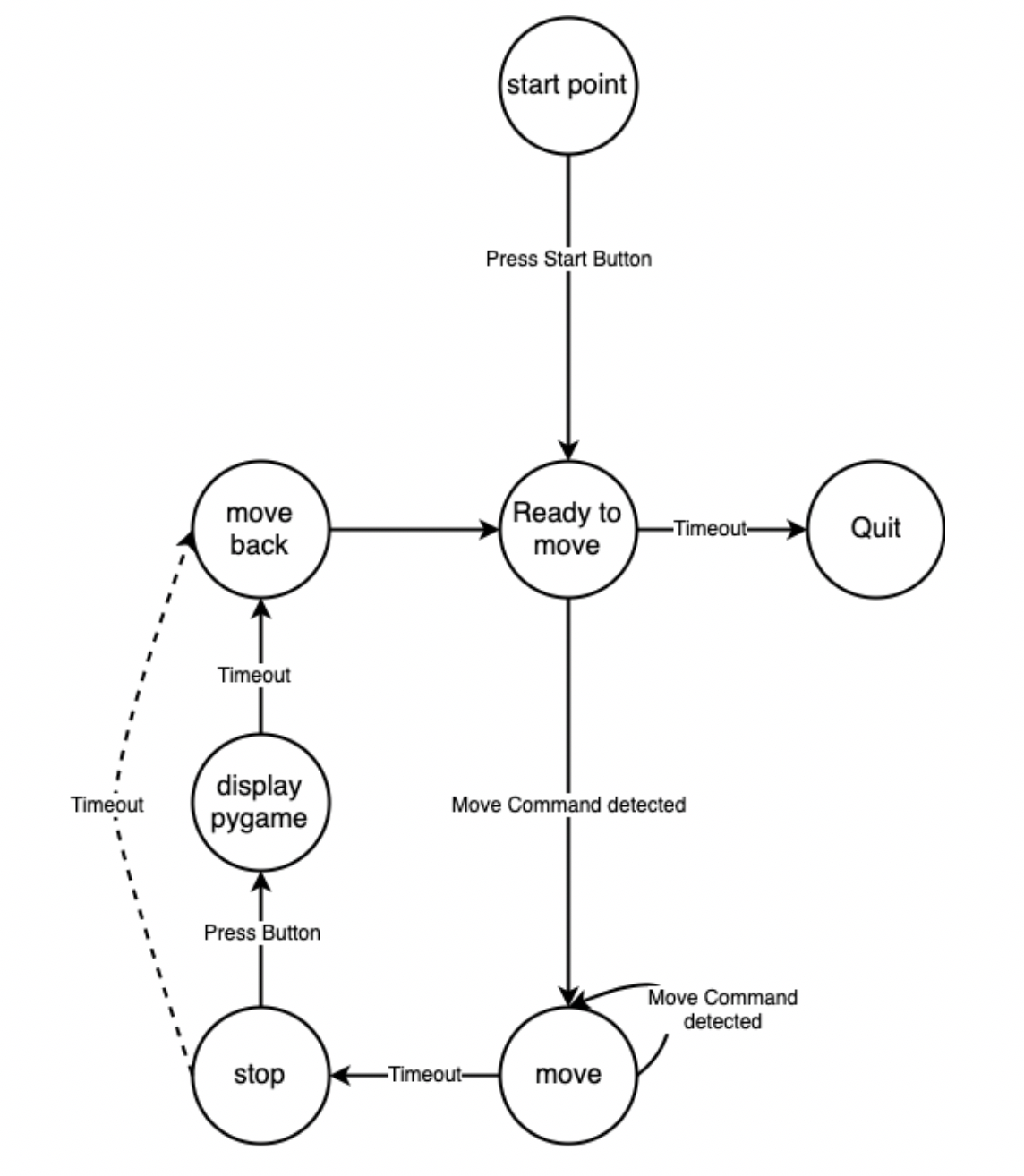

Below is an initial layout for the FSM of our robot. To realize the desired actions, we designed the system to have seven states. When our robot is at the start point, we have the initialization state and want to receive the commands from the controller, if the controller gives out the movement commands, we start to record the movement commands and move as commanded. When the robot receives a stop command or detects a timeout from receiving movement command, we assume the robot has arrived at the destination, and then we make it return back to the start point automatically. Finally, the travel robot will arrive at the start point and can start a new loop.

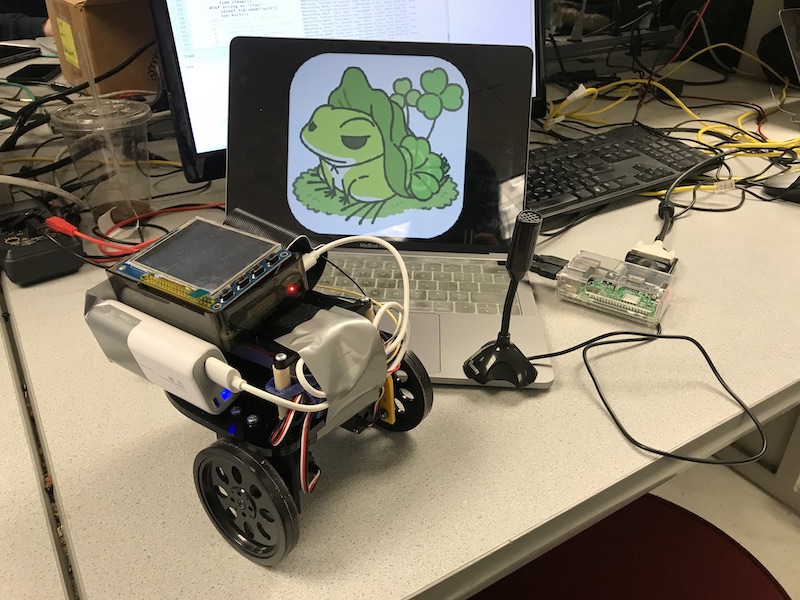

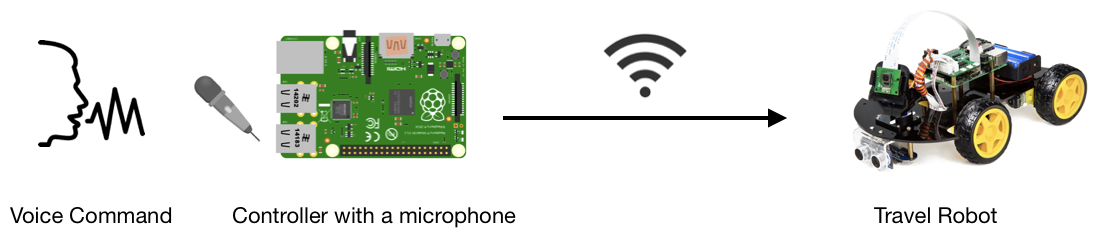

In this project, we used voice commands to control the robot, which means we have one Raspberry Pi as the controller to receive the voice signal and convert voice to text. The other Raspberry Pi with PiTFT and two servos is served as the robot it self. Two Raspberry Pi are connected by WiFi.

Objective

Generally, our project aims to design a travel robot which can move based on voice commands from a remote controller and then return back to the start point automatically.

Design and Testing

- Big Picture

- Hardware Design

- Software Design

The hardware design of our project consists of two Raspberry Pi, PiTFT, a USB microphone, and two servo motors. The specific functions of each parts will be introduced as following.

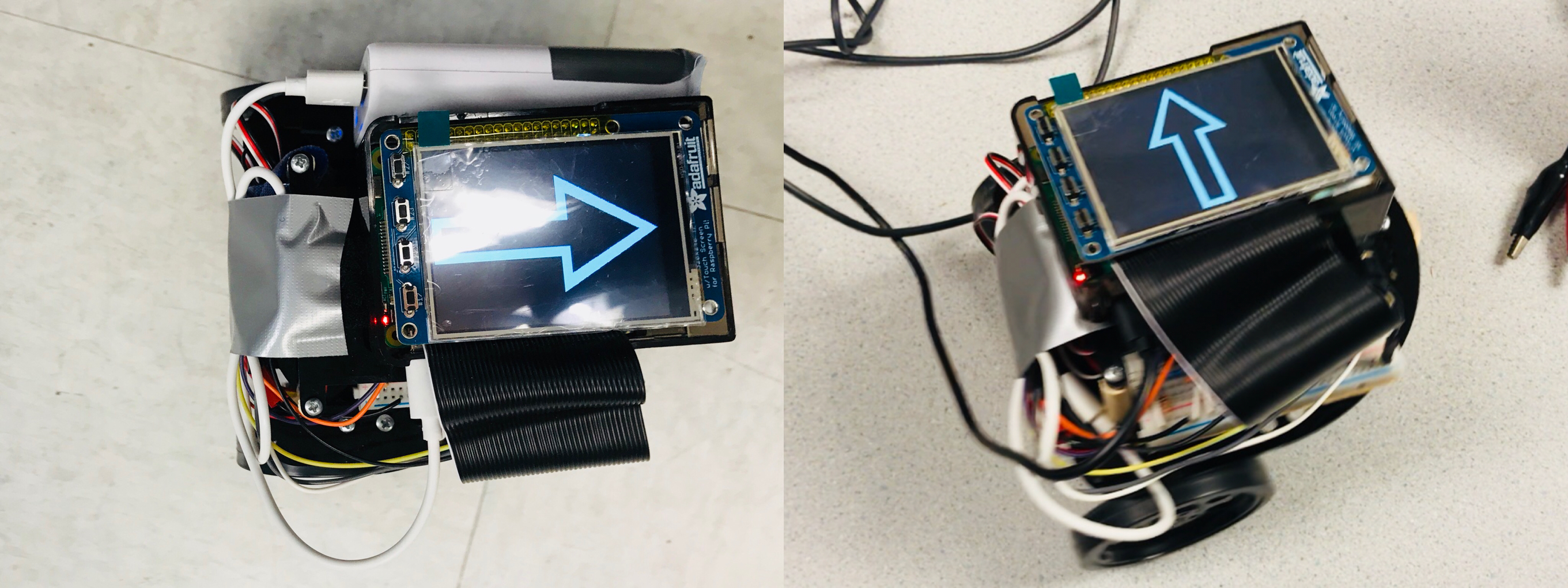

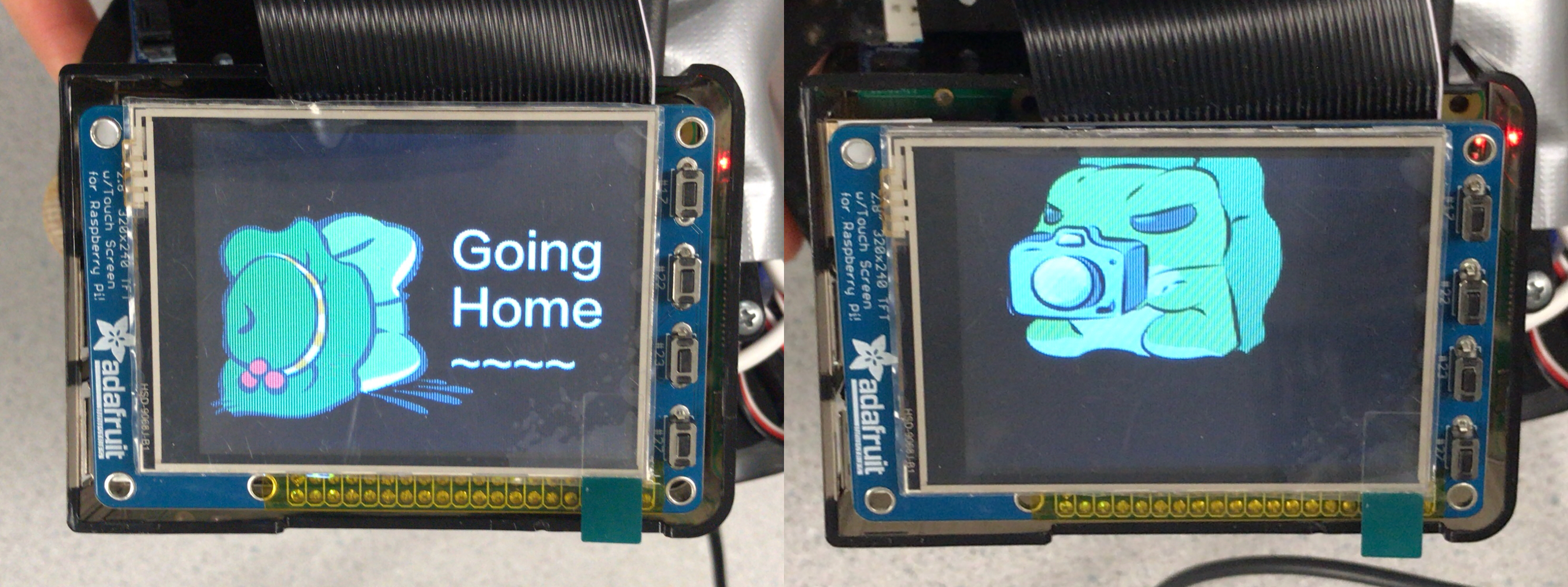

The Raspberry Pi with a USB microphone serves as our controller, which will receive the voice signal through microphone and then convert the audio to text. Another Raspberry Pi which included a PiTFT serves as our travel robot. Once the robot received the command, the PiTFT will show the direction as an arrow. In the meanwhile, when the robot run back to the start point, the PiTFT will display message “Going Home~”.

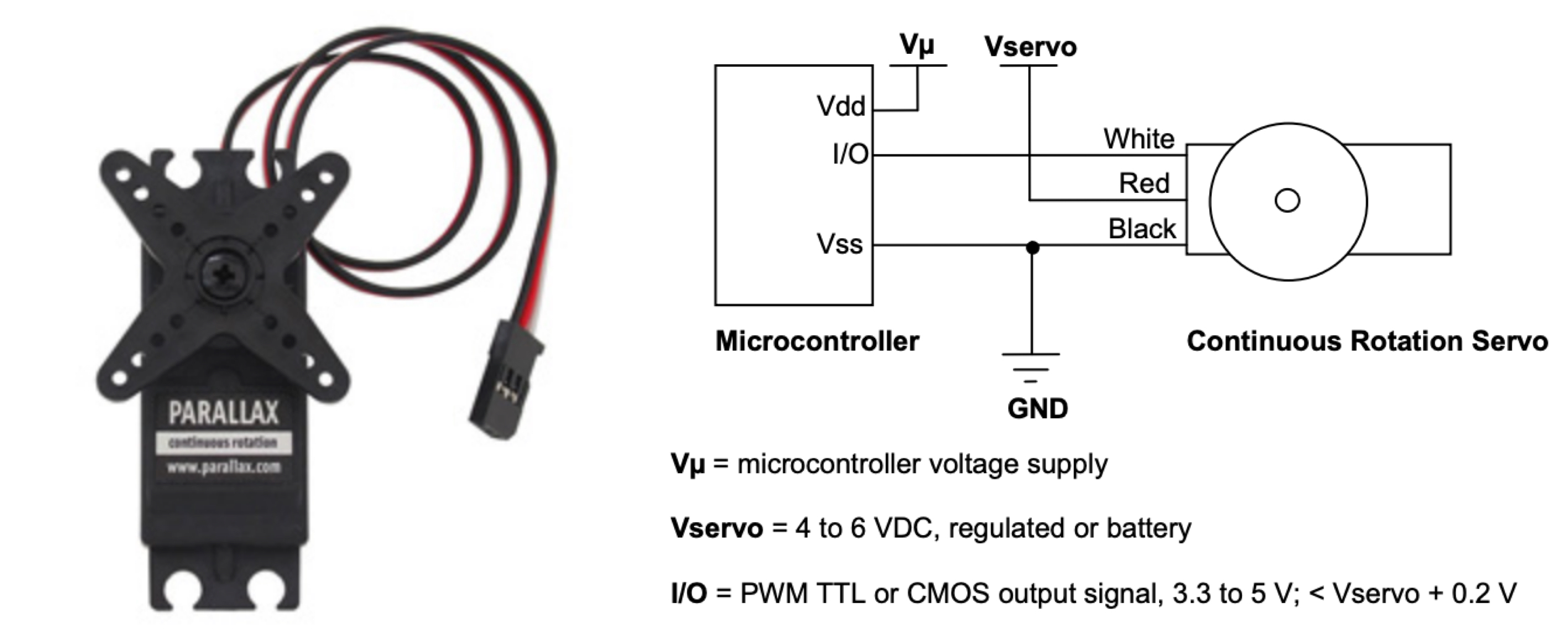

As for the two Parallax servo motors, they are used to control the motion of the robot. By using software GPIO pin 5 and pin19 as output PWM signals, our robot can achieve rotate, go forward, go backward, turn left at a designed angle and turn right at a designed angle. Figure below is wire connection of Parallax continuous servo motor. The servo motors are powered by 4 AA batteries and we also added a switch to better control the servo.

Control Command

There are three phases for our travel robot:

1. Travel: control the robot to go anywhere you want it to go through four keywords voice commands.

2. Return home: make the robot return back to the start point automatically when detects a timeout or receives a stop command.

3. Celebration: make the robot dance (turn around and move forward and backward randomly) if returned home safe and sound.

Our first step is to realize the very basic function of our robot: controlling the robot to move forward, backward, left and right, with the input from the keyboard. Based on the Lab 3 experiment, we checked and adjusted the parameter so that the robot can make a turn at desired angel for both left and right turn. Next, to realize the function “return home”, we decided to record the input commands into a text file and change the commands words correspondly. For example, change "forward" to "backward", "left" to "right". However, when testing this function, we found the robot return to somewhere else rather than the start point. Finally, we figured it out that we should not simply read the record file from the first row to the last row. Instead, we need to read the file inversely so that the robot can return to the start point correctly.

WiFi Connection

At first, we were not sure whether to use WiFi or Bluetooth to connect the two Raspberry Pi. The problem that we were concerning about is the in-time communication stability and accuracy of these two kinds of connections. The Bluetooth has the advantage of clean and in-time communication tunnel, while the WiFi connection has the advantage of large coverage for communication distance. After discussing with Michael and Rohit, we considered WiFi as our first option.

To begin with, we attached our previous backup SD card onto the new Raspberry Pi. We added the new device to the Cornell network on the website. Then we wrote the python program for the communication between the two Raspberry Pi. We considered the robot as server and the controller as client.

SERVER_IP = "10.148.10.156"

SERVER_PORT = 8888

start = time.time()

print("Starting socket: TCP...")

server_addr = (SERVER_IP, SERVER_PORT)

socket_tcp = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

Finally, we realized the communication between two Raspberry Pi using WiFi. We tested it by using one Raspberry Pi to take keyboard commands as input and then found that it succeeded to send these commands to the other Raspberry Pi, which is attached on our robot to make it move forward, backward, right and left.

Voice Recognition

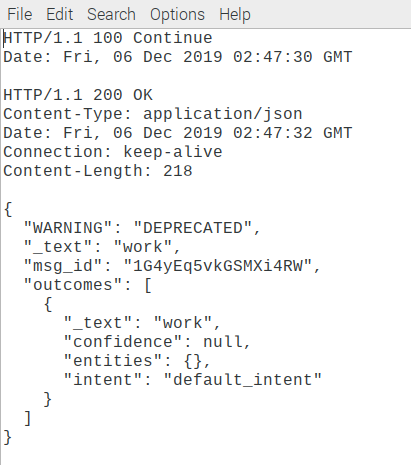

After realizing the basic version to control the robot as well as the communication between the two Raspberry Pi using WiFi, we moved onto voice recognition. We used a USB microphone to record audio files and then performed a request to the Wit.ai API. Wit.ai is a free service that provides speech to text conversion. To begin with, we had to create an account with Wit.ai to acquire a token for authentication. We then tested it by creating an audio file and making a request to see if it accurately reads our file into text.

For voice control, the main problem we need to solve is to store the resulting data given from the API request into a variable that we could use in our python script. After referencing Rohit’s 5725 final project design, we found the solution to add in “-o output.txt” into the call and this redirected the returned information into a file. We then can read through this file and performed string matching and splicing to get the particular text we wanted. With this string we performed an if else structure that checked for certain keywords in the string. The commands we looked for were as follows: Forward; Backward; Left; Right. The following is a snippet of an output file example we got:

If the string we had contained say “forward” and “backward”, regardless of the other words that were spoken the robot would move. This eases some of the rigidity of having specific commands. If one of the four commands were registered then the appropriate function was called to execute that command. Because we wanted to have the voice control work by simply speaking the command at any time, we created a polling system such that the microphone was always listening. We had the microphone listen for five seconds, create the audio file, run the API call, and once that computation was over, return to listening again. The issue we had with this was that the computation time for the API call was rather long. Thus, we came out the idea to download the package to local so that we do not need to visit the website every time when we give a voice command.

As for testing, there were several phases we did for the voice recognition. First, we confirmed that our USB microphone was working and that the audio files it created were reliable. Next, we printed out the results from the API call and checked to see that it was accurately registering our words. Once this was confirmed, we tested that parsing through the output.txt file was done correctly by printing the string to the console. Lastly, after we incorporated the four commands we interactively tested that when we give commands the robot responds appropriately and moves as we wish.

Pygame User Interface

To better interact with users, we have developed a graphic user interface which makes the robot more vivid. Once the robot received a command, the PiTFT will show the direction of the robot goes as an arrow. After traveling, when the robot goes back to the start point, the PiTFT will display a message “Going Home~”. If returned home safe and sound, the full PiTFT screen will turn to green color and then there will be a little frog bouncing around when we get to the celebration phase.

We think the user interface is especially important for our project because we aim to design a robot that everyone can use without effort. The graphic feedback makes our robot much more lovely.

Testing

Due to the nature of our project, most of our testing was very interactive with us testing out features as we implemented them. Our testing process was very modular with us implementing a feature and then testing it immediately. Our testing of each component of the project is described in our Design section after each component as we felt that structuring our design section this way more accurately described our design/testing process.

Issues and Solutions

After changing from the power supply to the power blank, we found one of our robot's wheel did not work. We first thought maybe there were some problems with our servo control code. However, the two wheels are coded symmetrically so that we suspected it was not the problem with our algo. After several tries, we finally solved the problem by changing fresh batteries for the servo motor.

We tried to use socket communication method to achieve the WiFi communication. However, at first we could not even ssh the two Raspberry Pi. To solve the problem, we first confirmed that our two Raspberry Pi are registered at Cornell to enable them to access to Cornell network using this link. Then we ensured that the two Raspberry Pi are in the same network, to be specific, RedRover. And finally in the next day, we found the connection is accessible.

We first tried to get the call from the service and get the response by sending request to the website. We followed the tutorial and input the commands:

curl -XPOST 'http://api.wit.ai/speech' -i -k -L -H 'Authorization: Bearer EHY7GQOG3UD66RTYR4YK66BFRPIGGJ6Z' -H 'Content-Type: audio/wav' --data-binary '@test.wav' -o out.txt

But we got the HTTP: 400 Bad request error and HTTP: 301 Moved Permanently error. We spent a lot of time figuring it out and debugging, and finally solved the problem by correcting the address to the current version. The commands are shown as below:

curl -XPOST 'https://api.wit.ai/speech?v=20141022' \

-i -L \

-H 'Authorization: Bearer 3YN33OHWQJYVSIVM4NZM5JCNWN5QN3E2' \

-H 'Content-Type: audio/wav' \

--data-binary '@test.wav' -o out.txt

Results

We were successfully able to meet all our intended goals for the Travel Robot. After running the python code, first the WiFi connection between the two Raspberry Pi will be built. Once we give a voice command, the controller will receive the audio through the USB microphone and then convert the speech to text with the help of Wit.ai. Then after about five seconds, our travel robot will receive the command through WiFi so that it can move as the command direct. Once the robot arrived at the destination and we give a stop command, the robot can return to the start point automatically with a “Going Home~” message displaying on the PiTFT screen. If our robot arrived at home safely, it will dance happily to celebrate. After one loop, the whole system will be automatically set back to the initial statement, which means that it is ready for a new adventure.

Future Work

Due to the time limitation, there are many aspects and future works that can be done to further enhance our robot. First of all, we experienced some latency with the voice recognition and WiFi communication, if we had more time, we would figure out how to make this process smoother and more time-efficient.

Then, we would like to add some ultrasonic distance sensors to make the travel robot be able to avoid obstacles. This will definitely be helpful to the reliable of our robot. In addition, we would like to make the robot “see” the road using a Raspberry Pi Camera, which means we can control our robot even if it went out of our sight. We can also use OpenCV library to make it stop when it detects and identifies a specific target.

Work Distribution

Demo Day Picture

Minghan Shi

ms3536@cornell.edu

Voice Recognition, WiFi Connection,

Servo Control, Pygame User Interface.

Qianqian Hou

qh98@cornell.edu

Hardware PWM, Hardware & Software Testing,

Pygame User Interface, Website.

Parts List

- Two Raspberry Pi: $35.00 x 2

- Servo Motor - Provided in lab

- USB microphone - Provided in lab

- LEDs, Resistors and Wires - Provided in lab

Total: $70.00

References

Wit.ai DocParallax Servo Datasheet

Pygame Docs

ECE5725 Final Project: Sustainable SmartRoom

R-Pi GPIO Document

Acknowledgement

We would like to thank Professor Joe Skovira and all the TAs who guide us through out the semester. Thank you!

Code Appendix

# ECE 5725 Final Project: Travel Robot

# Authors: Minghan Shi (ms3536), Qianqian Hou (qh98)

# 12/5/2018

# robot.py

import socket

import time

import sys

import os

import RPi.GPIO as GPIO

import pygame

from pygame.locals import*

# load the program to PiTFT and enable the mouse

os.putenv('SDL_VIDEODRIVER','fbcon')

os.putenv('SDL_FBDEV','/dev/fb1')

os.putenv('SDL_MOUSEDRV','TSLIB')

os.putenv('SDL_MOUSEDEV','/dev/input/touchscreen')

pygame.init()

pygame.mouse.set_visible(False)

BLACK = 0,0,0

WHITE=255,255,255

RED = 255,0,0

GREEN = 0,255,0

screen=pygame.display.set_mode((320,240))

size = width,height = 320,240

my_font=pygame.font.Font(None,20)

# define host ip: Rpi's IP

HOST_IP = "10.148.10.156"

HOST_PORT = 8888

print("Starting socket: TCP...")

# 1.create socket object:socket=socket.socket(family,type)

socket_tcp = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

print("TCP server listen @ %s:%d!" %(HOST_IP, HOST_PORT) )

host_addr = (HOST_IP, HOST_PORT)

# 2.bind socket to addr:socket.bind(address)

socket_tcp.bind(host_addr)

# 3.listen connection request:socket.listen(backlog)

socket_tcp.listen(1)

# 4.waite for client:connection,address=socket.accept()

socket_con, (client_ip, client_port) = socket_tcp.accept()

print("Connection accepted from %s." %client_ip)

socket_con.send("Welcome to RPi TCP server!")

start=time.time()

# set up the GPIO

GPIO.setmode(GPIO.BCM)

GPIO.setup(27, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(17, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(6, GPIO.OUT)

GPIO.setup(19, GPIO.OUT)

spe = [40,40]

p=GPIO.PWM(6,1000/21.5)

q=GPIO.PWM(19, 1000/21.5)

p.start(0)

q.start(0)

def right(length=0.5,sp=0.2):

#print(" ")

#print"Right Turn"

sp10 = sp*100

p.ChangeFrequency(1000/(21.5+sp))

p.ChangeDutyCycle((150+sp10)/(21.5+sp))

time.sleep(length)

p.ChangeFrequency(1000/21.5)

p.ChangeDutyCycle(0)

def forward(length=2.5,sp=0.2):

#print(" ")

#print"Forward"

sp10 = sp*100

p.ChangeFrequency(1000/(21.5+sp))

p.ChangeDutyCycle((150+sp10)/(21.5+sp))

q.ChangeFrequency(1000/(21.5-sp))

q.ChangeDutyCycle((150-sp10)/(21.5-sp))

time.sleep(length)

p.ChangeFrequency(1000/21.5)

q.ChangeFrequency(1000/21.5)

p.ChangeDutyCycle(0)

q.ChangeDutyCycle(0)

def left(length=0.5,sp=0.2):

sp10 = sp*100

#print(" ")

#print"Left Turn"

q.ChangeFrequency(1000/(21.5-sp))

q.ChangeDutyCycle((150-sp10)/(21.5-sp))

time.sleep(length)

q.ChangeFrequency(1000/21.5)

q.ChangeDutyCycle(0)

def backward(length=2.5,sp=0.2):

#print(" ")

#print"Backward"

sp10 = sp*100

p.ChangeFrequency(1000/(21.5-sp))

p.ChangeDutyCycle((150-sp10)/(21.5-sp))

q.ChangeFrequency(1000/(21.5+sp))

q.ChangeDutyCycle((150+sp10)/(21.5+sp))

time.sleep(length)

p.ChangeFrequency(1000/21.5)

q.ChangeFrequency(1000/21.5)

p.ChangeDutyCycle(0)

q.ChangeDutyCycle(0)

def quit():

print"Quit"

p.ChangeFrequency(1000/21.5)

q.ChangeFrequency(1000/21.5)

p.ChangeDutyCycle(0)

q.ChangeDutyCycle(0)

open('record.txt','w').close()

file1 = open("record.txt","a")

while time.time()-start <=600:

data = socket_con.recv(512)

if (not GPIO.input(27)):

break

if len(data)>0:

print("Received:%s"%data)

if data == 'forward':

arrow = pygame.image.load("left.png")

arrow_rect = arrow.get_rect(center=(160,120))

screen.blit(arrow,arrow_rect)

pygame.display.flip()

forward()

screen.fill(BLACK)

pygame.display.flip()

file1.write(data+"\n")

elif data == 'backward':

arrow = pygame.image.load("right.png")

arrow_rect = arrow.get_rect(center=(160,120))

screen.blit(arrow,arrow_rect)

pygame.display.flip()

backward()

screen.fill(BLACK)

pygame.display.flip()

file1.write(data+"\n")

elif data == 'right':

arrow = pygame.image.load("forward.png")

arrow_rect = arrow.get_rect(center=(160,120))

screen.blit(arrow,arrow_rect)

pygame.display.flip()

right()

screen.fill(BLACK)

pygame.display.flip()

file1.write(data+"\n")

elif data == 'left':

arrow = pygame.image.load("backward.png")

arrow_rect = arrow.get_rect(center=(160,120))

screen.blit(arrow,arrow_rect)

pygame.display.flip()

left()

screen.fill(BLACK)

pygame.display.flip()

file1.write(data+"\n")

else:

screen.fill(BLACK)

file1.close()

file2 = open("record.txt")

arrow2 = pygame.image.load("frog.png")

arrow_rect2 = arrow2.get_rect(center=(160,120))

screen.blit(arrow2,arrow_rect2)

pygame.display.flip()

stack = []

for line in file2:

stack.append(line)

while(len(stack)!=0):

#screen.fill(RED)

line2 = stack.pop()

print(line2)

if line2 == "forward\n":

backward(2.7)

time.sleep(1)

elif line2 == "backward\n":

forward(2.4)

time.sleep(1)

elif line2 == "right\n":

left()

time.sleep(1)

elif line2 == "left\n":

right()

time.sleep(1)

screen.fill(GREEN)

pygame.display.flip()

time.sleep(8)

file3 = open("dance.txt")

screen.fill(BLACK)

arrow3 = pygame.image.load("frog2.png")

arrow_rect3 = arrow3.get_rect(center=(160,120))

screen.blit(arrow3,arrow_rect3)

pygame.display.flip()

for line in file3:

screen.fill(BLACK)

arrow_rect3 = arrow_rect3.move(spe)

if (not GPIO.input(27)):

break

if arrow_rect3.left < 0 or arrow_rect3.right>320:

spe[0] = -spe[0]

if arrow_rect3.top < 0 or arrow_rect3.bottom>240:

spe[1] = -spe[1]

screen.blit(arrow3,arrow_rect3)

pygame.display.flip()

if line == "forward\n":

forward(1.5,0.5)

time.sleep(0.2)

elif line == "backward\n":

backward(1.5,0.5)

time.sleep(0.2)

elif line == "right\n":

right(0.5,0.5)

elif line == "left\n":

left(0.5,0.5)

quit()

screen.fill(GREEN)

file2.close()

socket_tcp.close()

socket_con.close()

socket_tcp = None

socket_con = None

#os.system("lsof -i :8888")

break

socket_con.send(data)

time.sleep(1)

continue

quit()

#file2.close()

GPIO.cleanup()

#socket_tcp.close()

# ECE 5725 Final Project: Travel Robot

# Authors: Minghan Shi (ms3536), Qianqian Hou (qh98)

# 12/5/2018

# controller.py

import socket

import time

import sys

import os

from wit import Wit

def locRec():

client = Wit('3YN33OHWQJYVSIVM4NZM5JCNWN5QN3E2')

resp = None

os.system('arecord -D plughw:1,0 -d 2 test.wav')

with open('test.wav', 'rb') as f:

resp = client.speech(f, None, {'Content-Type': 'audio/wav'})

#print('Yay, got Wit.ai response: ' + str(resp["_text"]))

result = str(resp["_text"])

if len(result) < 1:

print("Warning: Failed to record your voice, please say it again.")

return ""

if result == "work" or result == "word" or result == "forward" or result[0]=='f' or result[0] == 'w':

return "forward"

elif result[0] == 'l' or result == "left":

return "left"

elif (result[0] == 'b' and len(result)>3) or result == "backward":

return "backward"

elif result == "right" or result[0]=='r':

return "right"

elif result == "stop":

return "stop"

else:

print("Identified your voice as"+result+"\n"+"Please speak more clearly.")

return ""

#RPi's IP

SERVER_IP = "10.148.10.156"

SERVER_PORT = 8888

start = time.time()

print("Starting socket: TCP...")

server_addr = (SERVER_IP, SERVER_PORT)

socket_tcp = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

while True:

try:

print("Connecting to server @ %s:%d..." %(SERVER_IP, SERVER_PORT))

socket_tcp.connect(server_addr)

break

except Exception:

print("Can't connect to server,try it later!")

time.sleep(1)

continue

string = "start"

while time.time()-start <= 300:

while (string != "stop"):

string = locRec()

if string == "left" or string == "right" or string == "forward" or string == "backward":

socket_tcp.send(string)

time.sleep(1)

elif string == "stop":

socket_tcp.send("quit")

sys.exit(1)

#data = socket_tcp.recv(512)

#if len(data)>0:

# print("Received: %s" % data)

# command=raw_input()

# socket_tcp.send(command)

# time.sleep(1)

# continue

#except Exception:

# socket_tcp.close()

# socket_tcp=None

# sys.exit(1)

#socket_tcp.close()

#socket_tcp = None

#os.system("lsof -i :8888")

sys.exit(1)