Recipe Helper

Saturday, December 9th, 2017

ECE 5725 Final Project

Ian Hoffman (ijh6) and Tianjie Sun (ts755)

Project Objective:

We decided to build an interactive cooking assistant for the kitchen, similar to the Amazon Echo or Google Home, which provides voice-controlled help for different essential kitchen tasks. The main problems it solves are finding recipes to cook with the ingredients one has on hand, and speaking recipe steps during cooking to avoid the need for a home cook to constantly look at a recipe and stop tending to the food.

Introduction

For this project, we integrated Google’s new AIY Audio kit with the Raspberry Pi, as a means to easily perform speech-to-text on the user’s words, and text-to-speech for the assistant’s output. With this kit as our input/output device, we created our assistant software, which uses Python’s Selenium library and the headless browser PhantomJS to search a popular cooking website, Allrecipes, for relevant recipes. We created an intuitive, state-based control flow for the voice assistant so that finding and cooking to a recipe would be as natural as possible. Also, with more detailed Selenium web navigation, we were able to present users with detailed information about the cooking time, caloric content, number of servings, and more.

Design and Testing

The first step of our project was to order the Google AIY kit and assemble it, according to the instructions included on the AIY Voice kit’s page found in References. The AIY kit uses a custom Raspbian image supplied on this site, so we flashed that image onto our Raspberry Pi. Next, we checked the test scripts supplied by Google to make sure the kit was working properly. Once we had verified the correctness of our assembly, we needed to get two different authentication files from Google Cloud Platform that enabled us to use all Google Assistant and Cloud Speech APIs.

After adding the credentials required for these APIs, we realized that using the actual Google Assistant would be unwise for our project. Its conversation API is very much a black box, and similar to Siri and Alexa it is extremely intelligent, with an ingrained understanding of data retrieval on the internet. It would simultaneously have done certain aspects of our recipe retrieval process while proving unable to do others. As a result, we decided to forgo using the Google Assistant API and instead use only the Cloud Speech API for voice recognition. We used this API in its most basic form, with none of the built-in keyword recognizing functions enabled. To listen to the user’s voice until they have stopped talking and return a string conversion of their speech, we only needed a couple lines of code. First,

aiy.cloudspeech.get_recognizer()

retrieves the voice recognizer singleton. After that, we could call recognizer.recognize() to listen for the user’s speech. We did our own preprocessing and keyword extraction on the returned text, rather than relying on Cloud Speech’s expect function. To present voice prompts to the user before listening for a response or to speak important recipe information, we used the aiy.audio.say() function, which works as a text-to-speech synthesizer for any string, essentially becoming a print function in audio form. With these two main API calls, we had the framework we needed to do audio-based input/output, the most essential interactive part of this project.

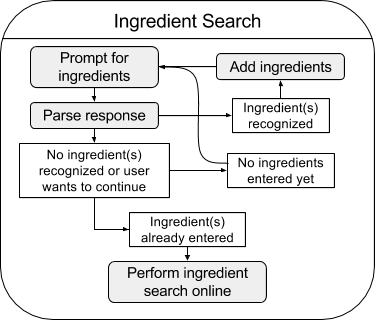

The assistant’s control flow has many different steps (or states), and functions much like a state machine, where each state represents a different recipe helping task, and each transition is controlled by a spoken phrase by the user. Some states include search mode, results mode, recipe mode, ingredients mode, and cook mode. We will describe each mode one by one with a flowchart below.

The main step of interest in this ingredient entry/search flow is the “Parse response” step. In this phase, the user’s spoken words are compared to a list of recognized ingredients, loaded from an ingredient manifest file stored on the Raspberry Pi. Ingredients are searched for and extracted in order of descending size, so that phrases like “Russet potato” are recognized earlier than “potato,” allowing for correct recognition of more specific variants of foods. This is especially important with common terms like “powder,” which can be preceded by many different words like “onion,” “garlic,” “chili,” “curry,” and “baking.”

If ingredients are successfully recognized and extracted from the converted text, the user is prompted for more ingredients. If no ingredients are extracted, two following actions can occur. If the user said nothing, the program assumes no further ingredients and moves on to search online. Additionally, if the user says keywords like “nothing,” “that’s it,” or “that’s all,” the search will also be performed. Note that the ingredient search state machine will return to the ingredient prompt instead of the online search in either of these cases if no ingredients have been entered yet.

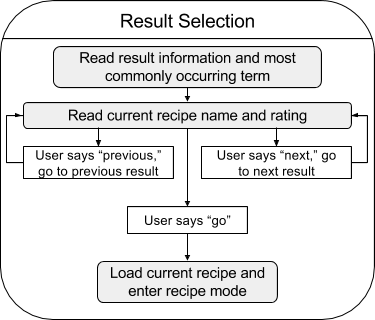

After recipes have been returned from the online ingredient search, Recipe Helper will enter the result selection mode, whose flowchart is shown below.

This mode is simpler than the last, and only recognizes three keywords, “previous,” “next,” and “go.” It starts by speaking the number of results found and the number of ads/unrelated results filtered out, as well as the most commonly occurring term in the retrieved recipes’ titles. Next, it gives instructions for how to navigate the recipes using the above three keywords, and starts the recipe selection loop.

At each iteration of this loop, it reads out the name and rating (out of 5 stars) of the current recipe. Afterwards, it expects users to either navigate to an adjacent recipe result, or choose the current recipe result. If the current recipe result is chosen, the webpage for the current recipe is loaded and many pieces of information about the recipe are scraped, including number of servings, calorie count, prep time, cook time, total time, ingredients, and steps. After these are loaded, the system transitions to the recipe mode. Another word, “search,” can also be said to return to ingredient search mode if no satisfactory recipes are returned.

Recipe mode is simple enough not to need a flowchart, since it acts as somewhat of a transitional state between results and cooking. At the beginning of recipe mode, Recipe Helper reads out the less lengthy information about the chosen recipe. Specifically, the title, number of servings, number of calories, and prep/cook/total times if they were included on the recipe’s webpage. After this phase, recipe mode recognizes four phrases: “ingredients,” “cook,” “results,” and “search.” These four keywords do the following:

- Ingredients: transition to ingredient readout mode

- Cook: transition to cooking mode

- Results: transition back to result selection mode

- Search: transition back to ingredient search mode

The next mode to discuss is ingredients mode, which is relatively simple like recipe mode and needs no flowchart. It reads through the ingredients in the recipe one by one. One small challenge involved in making the ingredient readout sound good was converting all fraction substrings like “3/4" parsed from the ingredients list on the recipe webpage to strings that read well using aiy.audio.say(). Using regular expression parsing and a translation table, we would convert the above example to “three quarters,” which sounds much better than “three slash four,” the output from before. After the ingredient readout is finished, five phrases are recognized: “repeat,” “cook,” “recipe,” “results,” and “search.” These five keywords do the following:

- Repeat: repeat the current mode, reading all ingredients again

- Cook: transition to cooking mode

- Recipe: transition back to recipe mode

- Results: transition back to result selection mode

- Search: transition back to ingredient search mode

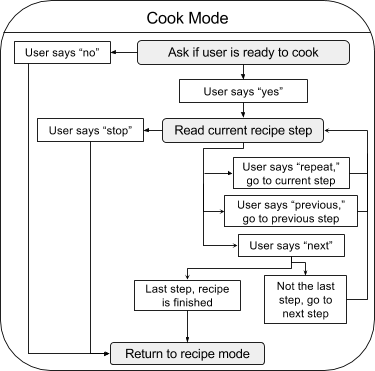

The last and arguably most important mode is cook mode, in which the user can cook along with Recipe Helper, requesting subsequent steps of the recipe when ready, or navigating through the recipe in other useful ways. A flowchart for the cook mode is shown below:

The cook mode starts with an initial prompt allowing users to opt out of cooking early if they’ve changed their mind. After this yes/no decision, the cooking process starts, which involves a cook loop that reads the current step of the recipe and listens for one of four keywords: “stop,” “repeat,” “previous,” and “next.” These four keywords do the following:

- Stop: Another opt out, stopping the cook process and returning to recipe mode

- Repeat: Lets the user hear the current step again for clarification

- Previous: Goes to the previous step in the recipe

- Next: Proceeds normally through the recipe to the next step

After the cook process is completed or cancelled, Recipe Helper returns to recipe mode, but does not read the less lengthy recipe information, having already read it prior to entering the cook mode.

At any time, users can press the red button on top of the AIY Voice Kit as an easy way to power down the device. This code is also done with the help of a voice kit helper function, aiy.voicehat.get_button().on_press(). This completes the functionality of the Recipe Helper robot.

We tested this project in several components. First off, we tested the speech and voice capture technologies using the provided scripts from Google. Next, we tested the Selenium recipe flow by creating it separately from the audio functions and interacting with the script in a live Python environment and a remotely-controlled browser session. After linking the audio components and the web components together, we tested and modified the control flow, making sure that Recipe Helper could correctly interact with users, fetch recipes, and give information on them in a way that felt natural. Finally, we added the ingredient manifest described above, ingredients.txt, to allow for recognition of an arbitrary, wider range of ingredients.

Results

While this project ended up as a success, we ran into several roadblocks along the way, mainly with getting a version of selenium working at a decent speed on the Raspberry Pi. Since the provided AIY image was in Raspbian Jessie, we initially set out trying to work with Selenium in Jessie. Since we read online that using selenium with Chromium was very difficult, we first tried to use Geckodriver with Firefox/Iceweasel. After finding the right compatible version of Geckodriver for the version of Selenium on Jessie (Geckodriver v0.18.0 and Selenium 3.7.0), we ran into unacceptable speed problems with this setup, causing us to abandon it as an option.

Next, we revisited the Chromium option, attempting to use versions of chromedriver compiled for the armhf architecture. However, these were not compatible with the version of Chromium installed on Jessie, and the binaries online that were compatible with that version of Chromium were not compatible with the Raspberry Pi, resulting in an exec format error.

We then tried to use PhantomJS and ghostdriver on Jessie, but the apt-get version of PhantomJS was too old for Selenium 3.7.0, and didn’t accept the same options that Selenium expected it to take. After trying to install a newer version of PhantomJS from online, we found that it was incomplete, lacking functionality like being able to find elements by CSS selectors due to missing atoms. Other solutions like using npm proved similarly fruitless.

After more research, we realized that the version of Chromium we would need to use to achieve Selenium/chromedriver compatibility was chrome v59+. However, this version of Chromium is not available on Jessie. So, we decided to upgrade to Stretch to check if this would allow our setup to work. After a long upgrade procedure, we tried out the test script in Stretch with the chromedriver version from before and the version of Selenium provided in Python 3. This version worked well, but ran into some issues in headless mode with elements being falsely labeled as invisible.

We wanted to see if PhantomJS would also run into a similar problem to headless Chromium, and if it would work at all in Stretch. Using the version of PhantomJS that came from apt-get in Stretch, along with the version of Selenium provided in Python 3, we were able to achieve slightly faster headless browser functionality than Chromium, while also not running into any runtime errors with fetching web elements. This Selenium setup was the one we went with in the end.

We were worried that after this upgrade, we would not be able to integrate the AIY kit’s APIs with our code, despite having read that the Voice Kit can work correctly in Stretch instead of Jessie. However, to our surprise, after reinstalling some of the pip packages in the AIY Python environment, we were greeted with a fully-functional AIY Kit, running on Stretch, with working Selenium functionality. These arduous steps were what allowed all aspects of our project to work together.

Conclusion

In conclusion, the Recipe Helper ended up as a very useful cooking robot that is intelligent enough to guide users through the entire cooking process. Users can speak any of hundreds of recognized ingredients, thanks to Google’s Cloud Speech API and our data-driven keyword extraction code. It is easy to see, after completing this project, how Recipe Helper could be used in real home kitchens by real home cooks to facilitate creativity with ingredients and foster passion in cooking.

Future Work

In the future, we could add several features to make Recipe Helper a more robust system. First of all, we could add an account management system to this cooking assistant, allowing for individual configuration of the device for better customization. For example, food allergies, medical conditions, and dietary restrictions could all be entered on a per-user basis, allowing for further filtering of recipes. Additionally, health constraints and ingredient preferences could be configured by users to help with diets and help avoid undesired foods. Another good addition would be Bluetooth integration with the user’s smartphone. This would allow for a real-time readout of ingredients, recipe results, recipe thumbnails, and cooking instructions to be sent to users’ phones, giving another way to digest the information found by Recipe Helper.

Contributions

Project group picture

Ian Hoffman

ijh6@cornell.edu

Wrote and tested Selenium demos and final software, integrated speech recognition and voice feedback code with final Recipe Helper Selenium software, troubleshooted Selenium webdrivers, carried out and resolved upgrade to Stretch, assembled ingredient manifest, helped with final system testing.

Tianjie Sun

ts755@cornell.edu

Assembled hardware kit, created project on Google Cloud Platform to get API authentication, researched and wrote draft code for speech recognition and voice feedback, helped with final system testing.

Parts List

- Raspberry Pi $35.00

- AIY Voice Kit $25.00